On February 27, 2016, longstanding boundary 2 board member Arif Dirlik gave his final lecture at the University of British Columbia. The talk, The Rise of China and the End of the World As We Know It, is available in full on the UBC Library’s website.

Author: boundary2

-

Martin Woessner — The Sociologists and the Squirrel — Review of “Georg Simmel and the Disciplinary Imaginary”

by Martin Woessner

Review of Elizabeth S. Goodstein, Georg Simmel and the Disciplinary Imaginary (Palo Alto: Stanford UP, 2017).

Georg Simmel only began to be recognized as one of the founding figures of modern sociology shortly before his death in 1918. The recognition came too late and generally amounted to the backhanded compliment in which scholars specialize: Simmel was brilliant, but. As an academic discipline in continental Europe and North America, sociology was still in the process of finding its methodological and institutional footing at the time. It had neither the heritage nor the prestige of philosophy, but modernity was on its side. It was the discipline of the future. Sociologists were rigorous, scientific, and systematic—everything that Simmel supposedly was not. Especially in comparison to Durkheim and Weber, Simmel’s work seemed dilettantish, more subjective and speculative than objective or empirical; more like poetry, in other words, than sociology. It was a strange complaint to make of somebody who wrote a tome like The Philosophy of Money, which was hundreds of pages long and chock full of concrete examples. But it stuck.

In the early decades of the twentieth century, as sociology became ever more scientific, Simmel’s fame became that of the negative example. Neither his methodological preoccupations, which were wide-ranging, nor his intellectual style, which shunned footnotes and bibliographies, fit within the narrowing confines of academic sociology. He thus had to be written into and out of the discipline simultaneously. In a 1936 survey of social thought across the Rhine, Raymond Aron conceded that “the development of sociology as an autonomous discipline can, in fact, scarcely be explained without taking his work into account,” but then proceeded to dispatch Simmel in just a few short pages, as if he were some kind of embarrassing distant relative who had to be acknowledged, but not necessarily celebrated.[1] Another, perhaps more poetic but no less dismissive portrait came from Jose Ortega y Gasset, who likened Simmel to a “philosophical squirrel,” more content to leap from branch to branch, indeed from tree to tree, than to harvest the insights of any one particular area of inquiry.[2]

Simmel may have ended up a squirrel by necessity rather than by choice. Unable to secure a fully funded academic post until very late in his career, and then only in out-of-the-way Strasbourg—rather than, say, Heidelberg, where, with the help of Weber, he had hoped to obtain an appointment, or Berlin, where he lived and studied and taught as an unsalaried lecturer for most of his life—Simmel never enjoyed the academic security that might have lent itself to less squirrelish, more scientific pursuits. His Berlin lectures were fabled performances—attended by everyone from Rainer Maria Rilke to George Santayana, who praised them to his Harvard colleague William James—but he nevertheless “remained,” as Elizabeth Goodstein argues in her new book, Georg Simmel and the Disciplinary Imaginary, “at the margins of the academic establishment.”[3]

Goodstein revisits Simmel’s marginality because she thinks it is the key to understanding not just his career, which was simultaneously storied and tenuous, but also his curious absence from academic debates today. Something essential about Simmel has been lost, she argues, in the narrative that transformed Simmel into a sociological ancestor, in the “decoupling” of his more sociological work from its philosophical foundations.[4] Indeed, as David Frisby pointed out some time ago, Simmel never really thought of himself as a sociologist anyhow.[5] There was a reason he didn’t call it The Sociology of Money. Writing to a French colleague already in 1899, Simmel confessed that “it is altogether rather painful for me that abroad I am only known as a sociologist—whereas I am a philosopher, see my life’s vocation in philosophy, and only pursue sociology as a sideline.”[6]

Heeding this remark, Goodstein urges us to see Simmel more as he saw himself: a marginalized figure, caught between ascendant “social science” on the one hand and “a kind of philosophy that was passing away” on the other.[7] If we do so, we might begin to appreciate how very relevant Simmel’s work is to contemporary debates not just in sociology, but also across the humanities and social sciences more generally. In the vicissitudes of Simmel’s career and legacy, in other words, Goodstein sees a parable or two for the current intellectual epoch, in which academic disciplines seem to be in the process of reforming themselves along new and sometimes competing lines of inquiry.

Instead of presenting us with Simmel as squirrel, then, Goodstein offers us a portrait of Simmel as conflicted interdisciplinarian. It is reassuring, I suppose, to think that what our academic colleagues dismiss as our most evident weaknesses might one day be viewed as our greatest strengths, that what seems scatterbrained now may be heralded as innovative in the future. For those of us who work in the amorphous field of interdisciplinary studies, Goodstein’s book might serve as both legitimation and justification—a defense of our squirreliness to our colleagues over in the harder sciences maybe. Still, it is difficult to shake the idea that interdisciplinarity is, like disciplinarity was a century ago, just another fad, another way to demonstrate to society that what we academics do behind closed doors is valuable and worthy of recognition, if not also funding.

As Louis Menand and others have argued, talk of interdisciplinarity is, at root, an expression of anxiety.[8] In the academy today there is certainly plenty to be anxious about, but, like Menand, I’m not sure that the discourse of interdisciplinarity adequately addresses any of it. Interdisciplinarity does not address budget crises, crumbling infrastructure, or the increasingly contingent nature of academic labor. In fact, it may even exacerbate these problems, insofar as it questions the rationale for having distinct disciplinary departments in the first place: why not collapse two or three different programs in the humanities into one, cut half their staff, and run a leaner, cheaper interdisciplinary program instead? If we are all doing “theory” anyways, what difference does it make if we are attached to a literature department, a philosophy department, or a sociology department?

That sounds paranoid, I know. Interdisciplinarity is not an evil conspiracy concocted by greedy administrators. It is simply the academic buzzword of our times. But like all buzzwords, it says a lot without saying anything of substance, really. It repackages what we already do and sells it back to us. Like any fashion or fad, it is unique enough to seem innovative, but not so unique as to be truly independent. Well over a century ago Simmel suggested that fashion trends were reflections of our competing desires for both “imitation” and “differentiation.”[9] Interdisciplinarity’s fashionable status in the contemporary academy suggests that these desires have found a home in higher education. In an effort to differentiate ourselves from our colleagues, we try to imitate the innovators. We buy into the trend. Interdisciplinary programs, built around interdisciplinary pedagogy, now produce and promote interdisciplinary research and scholarship, the end results of which are interdisciplinary curricula, conferences, journals, and textbooks. All of them come at a price. None of them, it seems to me, are worth it.

When viewed from this perspective at least, Goodstein’s book isn’t about Simmel at all. It is about what has been done to Simmel by the changing tides of academic fashion. The reception of his work becomes, in Goodstein’s hands, a cautionary tale about the plight of disciplinary thinking in the twentieth- and twenty-first centuries. The first section of the book, which investigates the way in which Simmel became a “(mostly) forgotten founding father” of modern sociology, shows how “Simmel’s oeuvre came to be understood as simultaneously foundational for and marginal to the modern social sciences.”[10] Insofar as he made social types (including “the stranger” and “the adventurer”) and forms of social interaction (such as “exchange” and “conflict,” but also including “sociability” itself) topics worthy of academic scrutiny Simmel proved indispensible; insofar as he did so in an impressionistic as opposed to empirical or quantitative style he was expendable. He was both imitated and ignored. Simmel helped make the discipline of sociology possible, but he would remain forever a stranger to it—“a philosophical Monet,” as his student György Lukács described him, surrounded by conventional realists.[11]

Goodstein uses the Simmel case to warn against the dangers of what now gets called, in those overpriced textbooks, “disciplinary reductionism.” She doesn’t use that term, but she is not immune to similar sounding jargon, which is part and parcel of interdisciplinary branding. “In exploring the history of Simmel’s representation as (proto)sociologist,” she writes, “I render more visible the highly tendentious background narratives on which the plausibility of that metadisciplinary (imagined, lived) order as a whole depends—and call into question the (largely tacit) equation of the differentiation and specialization knowledge practices with intellectual progress.”[12] An explanatory footnote tacked on to this sentence doesn’t clarify things all that much: “My purpose is not to argue against the value disciplines or to discount the modes of knowing they embody and perpetuate, but to emphasize that meta-, inter-, pre-, trans-, and even anti-disciplinary approaches are not just supplements or correctives to disciplinary knowledge practices but are themselves valuable constitutive features of a vibrant intellectual culture.”[13] Sounds squirrely to me, and not necessarily in a good way.

If Simmel’s reception in academic sociology serves as a cautionary tale about the limits of disciplinary knowledge for Goodstein, his writings represent something else entirely: a light of inspiration at the end of the disciplinary tunnel. They offer “an alternative vision of inquiry into human cultural or social life as a whole,” one that rejects the narrow tunnel-vision of specialized, compartmentalized, disciplinary frameworks.[14] It is a vision that might also help us to think critically about interdisciplinarity as well, for as Goodstein points out later in the book, in a more critical voice, “the contemporary turn to interdisciplinarity remains situated in a discursive space shaped and reinforced by disciplinary divisions.”[15]

The middle section of Goodstein’s book is devoted to a close reading of The Philosophy of Money. Its three chapters argue, each from a slightly different angle, that Simmel’s magnum opus substantiates just such an “alternative vision.” Here Simmel is presented not as the academic as which sociologists came to portray him, but as what he so desperately wanted to be seen, namely a philosopher. Goodstein argues that Simmel should be understood as a “modernist philosopher,” a kind of missing link, as it were, between Nietzsche on the one side and Husserl and Heidegger on the other. Simmel takes from Nietzsche the importance of post-Cartesian perspectivism, and, in applying it to social and cultural life, anticipates not just the phenomenology of Husserl and the existential philosophy of Heidegger, but also the critical theory of Lukács, and, later, the Frankfurt School. This is the theory you have been waiting for, the one that brings it all together.

In Goodstein’s view, The Philosophy of Money attempts nothing less than an inquiry into all social and cultural life through the subject of money relations. As such, it is neither “inter- or transdisciplinary.” “It is,” she writes, “metadisciplinary.”[16] It operates at a level all its own. It uses the phenomena associated with money—abstraction, valuation, and signification, for example—to explore larger questions associated with epistemology, ethics, and even metaphysics more generally. It shuttles back and forth between the most concrete and immediate observations to the most far-reaching speculations. It helps us understand how calculation, objectivity, and relativity, for example, become the defining features of modernity. It shows us how seemingly objective social and cultural forms—from artistic styles to legal and political norms—emerge out of intimate, subjective experience. But it also shows how these forms come to reify the forms of life out of which they initially sprang.[17]

In Simmel’s hands, money becomes a synecdoche—the “synecdoche of synecdoche” Goodstein repeats, one too many times—for social and cultural life as a whole.[18] What Hegel’s Phenomenology of Spirit did for history, The Philosophy of Money does for cold, hard cash. In this regard, at least, Goodstein’s efforts to re-categorize Simmel as a “modernist philosopher”—to put the philosophy back into the book, as it were—are insightful. Still, as I read Georg Simmel and the Disciplinary Imaginary, I couldn’t help but wonder if it might not be more valuable these days to put some of the money back into it instead. Given all the ways in which interdisciplinarity has been sold to us, and given the neoliberal reforms that are sweeping through the academy, now might be the time to focus on money as money, and not merely as synecdoche.

The problems we face today, both within and beyond the academy, are tremendous. We live in an age, as Goodstein puts it, of “accelerating ecological, economic, and sociopolitical crises.”[19] No matter what its promotional materials suggest, interdisciplinarity will not rescue us from any of them. Goodstein eventually admits as much: “the proliferation of increasingly differentiated inter-, trans-, and post-disciplinary practices reinforces rather than challenges the philosophical—ethical, but also metaphysical—insufficiencies of the modern disciplinary imaginary.”[20] In the final section of her book she emphasizes not so much the disciplining of Simmel’s work by those narrow-minded sociologists as the liberating theoretical potential of his “practices of thought,” which “even today do not comfortably fit into existing institutional frameworks.”[21] After depicting Simmel as a victim of academic rationalization, Goodstein now presents him as a potential savior—a way out of the mess of disciplinarity altogether.

Attractive as that sounds, I’m not sure that Simmel’s “modernist philosophy” will rescue us, either. In fact, I’m not sure that any philosophical or theoretical framework will, by itself, give us what we need to confront the challenges we face. Worrying about finding the right intellectual perspective may not be as important as worrying about where, in our society, the money comes from and where—and to whom—it goes at the end of the day. We need some advocacy to go along with our philosophy, and fretting over the merits of inter-, trans-, post-, meta-, anti-disciplinarity may just get in the way of it.

Simmel predicted that he would “die without spiritual heirs,” which was, in his opinion, “a good thing.” In a revealing quotation that serves as the guiding leitmotif of Goodstein’s book, he likened his intellectual legacy to “cold cash divided among many heirs, and each converts his portion into an enterprise of some sort that corresponds to his nature; whose provenance in that inheritance is not visible.”[22] Georg Simmel and the Disciplinary Imagination goes a long way towards reestablishing that provenance. Maybe it’s about time we start calling for an inheritance tax to be imposed upon the current practitioners and proponents of interdisciplinarity, who have turned that cold cash into gold.

Martin Woessner is Associate Professor of History & Society at The City College of New York’s Center for Worker Education. He is the author of Heidegger in America (Cambridge UP, 2011).

Notes

[1] Raymond Aron, German Sociology, trans. Mary and Thomas Bottomore (New York: Free Press of Glencoe, 1964), 5 n.1. Aron’s text was first published in French in 1936.

[2] Quoted in Lewis Coser, Masters of Sociological Thought: Ideas in Historical and Social Context, Second Edition (Long Grove, Illinois: Waveland Press, 1997), 199.

[3] Goodstein, Georg Simmel, 15.

[4] Ibid., 112.

[5] David Frisby, Fragments of Modernity: Theories of Modernity in the Work of Simmel, Kracauer and Benjamin (Cambridge, Massachusetts: MIT Press, 1986), 64.

[6] Goodstein, Georg Simmel, 41.

[7] Ibid., 29.

[8] Louis Menand, The Marketplace of Ideas: Reform and Resistance in the American University (New York: Norton, 2010), 97.

[9] Georg Simmel, “Fashion,” in On Individuality and Social Forms, edited and with an introduction by Donald N. Levine (Chicago: University of Chicago Press, 1971), 296.

[10] Goodstein, Georg Simmel, 106.

[11] “Introduction to the Translation,” in Simmel, The Philosophy of Money, trans. Tom Bottomore and David Frisby, from a first draft by Kaethe Mengelberg (London: Routledge & Kegan Paul, 1978), 29.

[12] Goodstein, Georg Simmel, 33.

[13] Ibid., note 43.

[14] Ibid., 67.

[15] Ibid., 131.

[16] Ibid., 155.

[17] This point is emphasized in Simmel’s final work, The View of Life: Four Metaphysical Essays with Journal Aphorisms, trans. John A.Y. Andrews and Donald N. Levine, with an introduction by Donald N. Levine and Daniel Silver, and an appendix, “Journal Aphorisms, with an Introduction” edited, translated, and with an introduction by John A.Y. Andrews (Chicago: University of Chicago Press, 2010), 351-352.

[18] Goodstein, Georg Simmel, 171.

[19] Ibid., 329.

[20] Ibid., 258.

[21] Ibid., 254.

[22] Ibid., 1.

-

David Thomas – On No-Platforming

by David Thomas

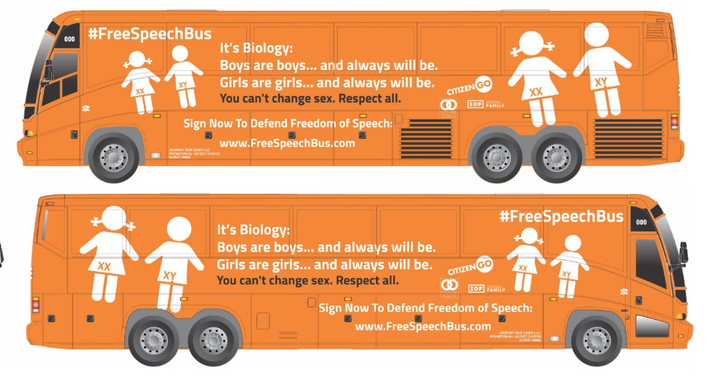

No-platforming has recently emerged as a vital tactical response to the growing mainstream presence of the self-styled alt-right. Described by proponents as a form of cordon sanitaire, and vilified by opponents as the work of coddled ideologues, no-platforming entails the struggle to prevent political opponents from accessing institutional means of amplifying their views. The tactic has drawn criticism from across the political spectrum. Former US President Barack Obama was himself so disturbed by the phenomenon that during the closing days of his tenure he was moved to remark:

I’ve heard some college campuses where they don’t want to have a guest speaker who is too conservative or they don’t want to read a book if it has language that is offensive to African-Americans or somehow sends a demeaning signal towards women. …I gotta tell you I don’t agree with that either. I don’t agree that you, when you become students at colleges, have to be coddled and protected from different points of view…Sometimes I realized maybe I’ve been too narrow-minded, maybe I didn’t take this into account, maybe I should see this person’s perspective. …That’s what college, in part, is all about…You shouldn’t silence them by saying, “You can’t come because I’m too sensitive to hear what you have to say” … That’s not the way we learn either. (qtd. Kingkade 2017 [2015])

Obama’s words here nicely crystalize one traditional understanding of the social utility of free speech. In classical liberal thought, free speech is positioned as the cornerstone of a utilitarian account of political and technological progress, one that views the combat of intellectually dexterous elites as the crucible of social progress. The free expression of informed elite opinion is imagined as an indispensable catalyst to modernity’s ever-accelerating development of new knowledge. The clash of unfettered intellects is said to serve as the engine of history.

For John Stuart Mill, one of the first to formulate this particular approach to the virtues of free expression, the collision of contrary views was necessary to establish any truth. Mill explicitly derived his concept of the truth-producing “free market of ideas” from Adam Smith’s understanding of how markets work. In both cases, moderns were counselled to entrust themselves to the discretion of a judicious social order, one that was said to emerge spontaneously as rational individuals exerted their vying bids for self-expression and self-actualization. These laissez faire arguments insisted that an optimal ordering of ends and means would ultimately be produced out of the mass of autonomous individual initiatives, one that would have been impossible to orchestrate from the vantage point of any one individual or group. In both cases – free speech and free markets – it was said that if we committed to the lawful exercise of individual freedoms we could be sure that the invisible hand will take care of the rest, sorting the wheat from the chaff, sifting and organizing initiatives according to the outcomes that best befit the social whole, securing our steady collective progress toward the best of all possible worlds. No surprise, then, that so much worried commentary on the rise of the alt-right has cautioned us to abide by the established rules, insisting that exposure to the free speech collider chamber will wear the “rough edges” off the worst ideas, allowing their latent kernels of rational truth to be developed and revealed, whilst permitting what is noxious and unsupportable to be displayed and refuted.

A key point, then, about no-platforming is that its practice cuts against the grain of this vision of history and against the theory of knowledge on which it is founded. For in contrast to proponents of Mill’s proceduralist epistemology, student practioners of no-platforming have appropriated to themselves the power to directly intervene in the knowledge factories where they live and work, “affirmatively sabotaging” (Spivak 2014) the alt-right’s strategic attempts to build out its political legitimacy. And it is this use of direct action, and the site-specific rejection of Mill’s model of rational debate that it has entailed, that has brought student activists to the attention of university administrators, state leaders, and law enforcement.

We should not mistake the fact that these students have been made the object of ire precisely because of their performative unruliness, because of their lack of willingness to defer to the state’s authority to decide what constitutes acceptable speech. One thing often left unnoticed in celebrations of the freedoms afforded by liberal democracies is the role that the state plays in conditioning the specific kinds of autonomy that individuals are permitted to exercise. In other words, our autonomy to express opposition as we see fit is already much more intensively circumscribed than recent “free speech” advocates care to admit.

Representations of no-platforming in the media bring us to the heart of the matter here. Time and again, in critical commentary on the practice, the figure of the wild mob resurfaces, often counter-posed to the disciplined, individuated dignity of the accomplished orator:

[Person X] believes that he has an obligation to listen to the views of the students, to reflect upon them, and to either respond that he is persuaded or to articulate why he has a different view. Put another way, he believes that one respects students by engaging them in earnest dialogue. But many of the students believe that his responsibility is to hear their demands for an apology and to issue it. They see anything short of a confession of wrongdoing as unacceptable. In their view, one respects students by validating their subjective feelings. Notice that the student position allows no room for civil disagreement. Given this set of assumptions, perhaps it is no surprise that the students behave like bullies even as they see themselves as victims. (Friedersdorf 2015)

These remarks are exemplary of a certain elective affinity for a particular model citizen – a purportedly non-bullying parliamentarian agent or eloquent spokesperson who is able to establish an argument’s legitimacy with calm rationality. These lofty incarnations of “rational discourse” are routinely positioned as the preferred road to legitimate political influence. Although some concessions are made to the idea of “peaceful protest,” in the present climate even minimal appeals to the politics of collective resistance find themselves under administrative review (RT 2017). Meanwhile, champions of free speech quietly endorse specific kinds of expression. Some tones of voice, some placard messages, some placements of words and bodies are celebrated; others are reviled. In practice, the promotion of ostensibly “free” speech often just serves to idealize and define the parameters of acceptable public conduct.

No-platforming pushes back against these regulatory mechanisms. In keeping with longstanding tactics of subaltern struggle, its practice demonstrates that politics can be waged through a diversity of means, showing that alongside the individual and discursive propagation of one’s political views, communities can also act as collective agents, using their bodies and their capacity for self-organization to thwart the rise of political entities that threaten their wellbeing and survival. Those conversant with the history of workers’ movements will of course recognize the salience of such tactics. For they lie at the heart of emancipatory class politics, in the core realization that in standing together in defiance of state violence and centralized authority, disenfranchised communities can find ways to intervene in the unfolding of their fates, as they draw together in the unsanctioned shaping and shielding of their worlds.

It is telling that so much media reportage seems unable to identify with this history, greeting the renewed rise of collective student resistance with a combination of bafflement and recoil. The undercurrent of pearl-clutching disquiet that runs through such commentary might also be said to perform a subtle kind of rhetorical work, perhaps even priming readers to anticipate and accept the moment when police violence will be deployed to restore “order,” to break up the “mob,” and force individuals back onto the tracks that the state has ordained.

Yet this is not to say there is nothing new about this new wave of free speech struggles. Instead, they supply further evidence that longstanding strategies of collective resistance are being displaced out of the factory systems – where we still tend to look from them – and into what Joshua Clover refers to, following Marx, as the sphere of circulation, into the marketplaces and the public squares where commodities and opinions circulate in search of valorization and validation. Disenfranchised communities are adjusting to the debilitating political legacies of deindustrialization. As waves of automation have rendered workers unable to express their resistance through the slowdown or sabotage of the means of production, the obstinacy of the strike has been stripped down to its core. And as collective resistance to the centralized administration of social conduct now plays out beyond the factory’s walls, it increasingly takes on the character of public confrontation with the state. Iterations of this phenomenon play out in flashpoints as remote and diverse as Berkeley, Ferguson, and Standing Rock. And as new confrontations fall harder on the heels of the old, they make a spectacle of the deteriorating condition of the social contract.

If it seems odd to compare the actions of students at elite US universities and workers in the industrial factory systems of old, consider the extent to which students have themselves become increasingly subject to proletarianization and precarity – to indebtedness, to credit wages, and to job prospects that are at best uncertain. This transformation of the university system – from bastion of civil society and inculcator of elite modes of conduct, to frenetic producer of indebted precarious workers – helps to account for the apparent inversion of campus radicalism’s orientation to the institution of free speech.

Longtime observers will recall that the same West Coast campuses that have been key flashpoints in this wave of free speech controversies were once among the most ardent champions of the institution. Strange, then, that in today’s context the heirs to Mario Savio’s calls to anti-racist civil disobedience seem more prone to obstruct than to promote free speech events. Asked about Savio’s likely response to this trend, social scientist and biographer Robert Cohen finds that “Savio would almost certainly have disagreed with the faculty and students who urged the administration to ban Milo Yiannopoulos from speaking on campus, and been heartened by the chancellor’s refusal to ban a speaker” (Cohen 2017). The alt-right has delighted in trolling student radicals over this apparent break with tradition:

Milo Inc.’s first event will be a return to the town that erupted in riots when he was invited to speak earlier this year. In fact, Yiannopoulos said that he is planning a “week-long celebration of free speech” near U.C. Berkeley, where a speech by his fellow campus agitator, Ann Coulter, was recently canceled after threats of violence. It will culminate in his bestowing something called the Mario Savio Award for Free Speech. (The son of Savio, one of the leaders of Berkeley’s Free Speech movement during the mid-1960s, called the award “some kind of sick joke”.) (Nguyen 2017)

Yet had Milo named his free speech prize after Savio’s would-be mentor John Searle, then the logic of current events might have appeared a little more legible. For as Lisa Hofmann-Kuroda and Beezer de Martelly have recently reminded us, in the period between 1965 and 1967 when the Free Speech Movement (FSM) was emerging as the home of more militant forms of student resistance, the US government commission Searle to research the movement. The resulting publication would eventually come to serve “as a manual for university administrators on how to most efficiently dismantle radical student protests” (Hofmann-Kuroda and de Martelly 2017). One of the keys to Searle’s method was the effort to “encouraged students to focus on their own … abstract rights to free speech,” a move that was to “shift campus momentum away from Black labor struggles and toward forming a coalition between conservatives and liberals on the shared topic of free speech rights” (Hofmann-Kuroda and de Martelly 2017). Summing up the legacies of this history from today’s vantage, Hofmann-Kuroda and de Martelly remark:

In hindsight, it becomes clear that the “alt-right”‘s current use of the free speech framework as a cover for the spread of genocidal politics is actually a logical extension of the FSM — not, as some leftists would have it, a co-optation of its originally “radical” intentions. In addition to the increasingly violent “free speech rallies” organized in what “alt-right” members have dubbed “The Battle for Berkeley,” the use of free speech as a legitimating platform for white supremacist politics has begun to spread throughout the country. (Hofmann-Kuroda and de Martelly 2017)

It is in relation to this institutional history that we might best interpret the alt-right’s use of free speech and the responses of the student left. For as Hofmann-Kuroda and de Martelly suggest, the alt-right’s key avatars such as Milo and Richard Spenser have now succeeded in building out the reach of Searle’s tactics. Their ambitions have extended beyond defusing social antagonisms and shoring up the prevailing status quo; indeed, in an eerie echo of Savio’s hopes for free speech, the alt-right now sees the institution as a site where dramatic social transformations can be triggered.

But why then is the alt-right apt to see opportunities in this foundational liberal democratic institution, while the student left is proving more prone to sabotage its smooth functioning? It certainly appears that Searle’s efforts to decouple free speech discourse and anti-racist struggle have been successful. Yet to grasp the overall stakes of these struggles it can be helpful to pull back from the abstract debates that Searle proved so adept in promoting, to make a broader assessment of prevailing socio-economic and climatic conditions.

For in mapping how the terrain has changed since the time of Salvo and Searle we might take account of the extent to which the universal summons to upward mobility, and the global promise of endless material and technological enfranchisement that defined the social experience of postwar modernization, have lately begun to ring rather hollow. Indeed as we close in on the third decade of the new millennium, there seems to be no end to the world system’s economic woes in sight, and no beginning to its substantive reckoning with problem of anthropogenic climate change.

In response, people are changing the way they orient themselves toward the centrist state. In another instance of his welcome and ongoing leftward drift, Bruno Latour argues that global politics are now defined by the blowback of a catastrophically failed modernization project:

The thing we share with these migrating peoples is that we are all deprived of land. We, the old Europeans, are deprived because there is no planet for globalization and we must now change the entire way we live; they, the future Europeans, are deprived because they have had to leave their old, devastated lands and will need to learn to change the entire way they live.

This is the new universe. The only alternative is to pretend that nothing has changed, to withdraw behind a wall, and to continue to promote, with eyes wide open, the dream of the “American way of life,” all the while knowing that billions of human beings will never benefit from it. (Latour 2017)

Apprehending the full ramifications of the failure of modernization will require us to undertake what the Club of Rome once referred to as a “Copernican revolution of the mind” (Club of Rome 1972: 196). And in many respects the alt-right has been quicker to begin this revolution than the technocratic guardians of the globalist order. In fact, it seems evident that the ethnonationalists look onto the same prospects as Latour, while proscribing precisely the opposite remedies. Meantime, guardians of the “center” remain all too content to repeat platitudinous echoes of Mills’ proceduralism, assuring us all that – evidence to the contrary – the market has the situation in invisible hand.

This larger historical frame is key to understanding campus radicalism’s turn to no-platforming, which seems to register – on the level of praxis – that the far right has capitalized far more rapidly on emergent conditions that the center or the left. In understanding why this has occurred, it is worth considering the relationship between the goals of the FSM and the socioeconomic conditions that prevailed in the late 1960s and early 1970s when the movement was at its peak.

For Savio and his anti-racist allies at the FSM, free speech afforded radicals both a platform from to which protest US imperialism with relative impunity, and an institutional lodestar by which to steer a course that veered away from the purges and paranoia of the Stalinist culture of command. It seemed that the institution itself served as a harbinger of a radicalized and “socialized” state, one that was capable of executing modernization initiatives that would benefit everyone.

The postwar program of universal uplift then seemed apt to roll out over the entire planet, transforming the earth’s surface into a patchwork of independent modern nation states all locked into the same experience of ongoing social and technological enfranchisement. In such a context Savio and other contemporary advocates of free-speech saw the institution as a foreshadowing of the modern civil society into which all would eventually be welcomed as enfranchised bearers of rights. Student activism’s commitment to free speech thus typified the kind of statist radicalism that prevailed in the age of decolonization, a historical period when the postcolonial state seemed poised to socialize wealth, and when the prospect of postcolonial self-determination was apt to be all but synonymous with national modernization programs.

Yet in contrast to this expansive and incorporative modernizing ethos, the alt-right savior state is instead being modeled around avowedly expulsive and exclusionary initiatives. This is the state reimagined as a gated community writ large, one braced – with its walls, border camps, and guards – to resist the incursion of “alien” others, all fleeing the catastrophic effects of a failed postwar modernization project. While siphoning off natural wealth to the benefit of the enwalled few, this project has unleashed the ravages of climate change and the impassive violence of the border on the exposed many. The alt-right response to this situation is surprisingly consonant with the Pentagon’s current assessment, wherein the US military is marketed as a SWAT team serving at the dispensation of an urban super elite:

Given the lines along which military and official state policy now trends, it is probably a mistake to characterize far-right policy proposals as a wholescale departure from prevailing norms. Indeed, it seems quite evident that – as Latour remarks – the “enlightened elite” have known for some time that the advent of climate change has given the lie to the longstanding promises of the postwar reconstruction:

The enlightened elites soon started to pile up evidence suggesting that this state of affairs wasn’t going to last. But even once elites understood that the warning was accurate, they did not deduce from this undeniable truth that they would have to pay dearly.

Instead they drew two conclusions, both of which have now led to the election of a lord of misrule to the White House: Yes, this catastrophe needs to be paid for at a high price, but it’s the others who will pay, not us; we will continue to deny this undeniable truth. (Latour 2017)

From such vantages it can be hard to determine to what extent centrist policies actually diverge from those of the alt-right. For while they doggedly police the exercise of free expression, representatives of centrist orthodoxy often seem markedly less concerned with securing vulnerable peoples against exposure to the worst effects of climate change and de-development. In fact, it seems all too evident that the centrist establishment will more readily defend people’s right to describe the catastrophe in language of their own choosing than work to provide them with viable escape routes and life lines.

Contemporary free speech struggles are ultimately conflicts over policy rather than ironic contests over theories of truth. For it has been in the guise of free speech advocacy that the alt-right has made the bulk of its initial gains, promoting its genocidal vision through the disguise of ironic positional play, a “do it for the lolz” mode of summons that marshals the troops with a nod and wink. It seems that in extending the logic of Searle’s work at Berkley, the alt-right has thus managed to “hack” the institution of free speech, navigating it with such a deft touch that defenses of the institution are becoming increasingly synonymous with the mainstream legitimation of their political project.

Is it then so surprising that factions of the radical left are returning full circle to the foundationally anti-statist modes of collective resistance that defined radical politics at its inception? Here, Walter Benjamin’s concept of “the emergency brake” suggests itself, though we can adjust the metaphor a little to better grasp current conditions (Benjamin 2003: 401). For it is almost as if the student left has responded to a sense that the wheel of history had taken a sickening lurch rightward, by shaking free of paralysis, by grabbing hold of the spokes and pushing back, greeting the overawing complexities of our geopolitical moment with local acts of defiance. It is in this defiant spirit that we might approach the free speech debates, arguing not for the implementation of draconian censorship mechanisms (if there must be a state, better that it is at least nominally committed to freedom of expression than not) but against docile submission to a violent social order—an order with which adherence to the doctrine of free speech is perfectly compatible. The central lesson that we might thus draw from the activities of Berkley’s unruly students is that the time for compliant faith in the wisdom of our “guardians” is behind us (Stengers 2015: 30).

David Thomas is a Joseph-Armand Bombardier Canada Graduate Scholar in the Department of English at Carleton University. His thesis explores narrative culture in post-workerist Britain, and unfolds around the twin foci of class and climate change.

Bibliography

Benjamin, Walter. 2003. Selected Writings Volume 4: 1938 – 1940. Cambridge: Harvard University Press.

Clover, Joshua. 2016. Riot. Strike. Riot. London: Verso.

Cohen, Robert. 2017. “What Might Mario Savio Have Said About the Milo Protest at Berkeley?” Nation, February 7. www.thenation.com/article/what-might-mario-savio-have-said-about-the-milo-protest-at-berkeley/

Friedersdorf, Conor. 2015. “The New Intolerance of Student Activism.” Atlantic, November 9. www.theatlantic.com/politics/archive/2015/11/the-new-intolerance-of-student-activism-at-yale/414810/

Hofmann-Kuroda, Lisa, and Beezer de Martelly. 2017. “The Home of Free Speech™: A Critical Perspective on UC Berkeley’s Coalition With the Far-Right.” Truth Out, May 17. www.truth-out.org/news/item/40608-the-home-of-free-speech-a-critical-perspective-on-uc-berkeley-s-coalition-with-the-far-right

Kingkade, Tyler. 2015. “Obama Thinks Students Should Stop Stifling Debate On Campus.” Huffington Post, September 9. [Updated February 2, 2017]: www.huffingtonpost .com/entry/obama-college-political-correctness_us_55f8431ee4b00e2cd5e80198

Latour, Bruno. 2017. “The New Climate.” Harpers, May. harpers.org/archive/2017/05/the-new-climate/

“Right to Protest?: GOP State Lawmakers Push Back Against Public Dissent.” 2017. RT, February 4. www.rt.com/usa/376268-republicans-seek-outlaw-protest/

Nguyen, Tina. 2017. “Milo Yiannopoulos Is Starting a New, Ugly, For-Profit Troll Circus.” Vanity Fair, April 28. www.vanityfair.com/news/2017/04/milo-yiannopoulos-new-media-venture

Spivak, Gayatri. 2014. “Herald Exclusive: In conversation with Gayatri Spivak,” by Nazish Brohiup. Dawn, Dec 23. www.dawn.com/news/1152482

Stengers, Isabelle. 2015. In Catastrophic Times: Resisting the Coming Barbarism. Open Humanities Press. openhumanitiespress.org/books/download/Stengers 2015 In Catastrophic-Times.pdf

-

Arne De Boever — Realist Horror — Review of “Dead Pledges: Debt, Crisis, and Twenty-First-Century Culture”

by Arne De Boever

Review of Annie McClanahan, Dead Pledges: Debt, Crisis, and Twenty-First-Century Culture (Stanford: Stanford University Press, 2017)

This essay has been peer-reviewed by the boundary 2 editorial collective.

The Financial Turn

The Financial TurnThe financial crisis of 2007-8 has led to a veritable boom of finance novels, that subgenre of the novel that deals with “the economy”.[i] I am thinking of novels such as Jess Walter’s The Financial Lives of the Poets (2009), Jonathan Dee’s The Privileges (2010), Adam Haslett’s Union Atlantic (2010), Teddy Wayne’s Kapitoil (2010), Cristina Alger’s The Darlings (2012), John Lanchester’s Capital (2012), David Foster Wallace’s The Pale King (2012),[ii] Mohsin Hamid’s How To Get Filthy Rich in Rising Asia (2013), Nathaniel Rich’s Odds Against Tomorrow (2013), Meg Wolitzer’s The Interestings (2013)—and those are only a few.

Literary criticism has followed suit. Annie McClanahan’s Dead Pledges: Debt, Crisis, and Twenty-First Century Culture (published in the post-45 series edited by Kate Marshall and Loren Glass) studies some of those novels. It follows on the heels of Leigh Claire La Berge’s Scandals and Abstraction: Financial Fiction of the Long 1980s (2015) and Anna Kornbluh’s Realizing Capital: Financial and Psychic Economies in Victorian Form (2014), both of which deal with earlier instances of financial fiction. By 2014, McClanahan had already edited (with Hamilton Carroll) a “Fictions of Speculation” special issue of the Journal of American Studies. At the time of my writing, Alison Shonkwiler’s The Financial Imaginary: Economic Mystification and the Limits of Realist Fiction has just appeared, and no doubt, many more will follow. In the Coda to her book, La Berge mentions that scholars are beginning to talk about the “critical studies of finance” to bring together these developments into a thriving field.

Importantly, Dead Pledges looks not only at novels but also at poetry, conceptual art, photography, and film. Indeed, the “financial turn” involves more than fiction: J.C. Chandor’s Margin Call (2011), Costa-Gavras’ Capital (2012), Martin Scorcese’s The Wolf of Wall Street (2013), and Adam McKay’s The Big Short (2015) were all released in the aftermath of the 2007-8 crisis. American Psycho, the musical, premiered in London in 2013 and moved on to New York in 2016.

All of this contemporary work builds on and explicitly references earlier instances of thinking and writing about the economy, so it is not as if this interest in the economy is anything new. However, given the finance novel’s particular name one could argue that while the genre of the finance novel—understood more broadly as any novel about the economy–precedes the present, it is only during the financial era, which began in the early 1970s, and especially since the financial crisis of 2007-8 that it has truly come into its own. For the specific challenge that is now set before the finance novel is precisely to render the historic formation of “finance” into fiction. Critics have noted that such a rendering cannot be taken for granted. While capitalism has traditionally been associated with the realist novel (as La Berge and Shonkwiler at the outset of their edited collection Reading Capitalist Realism point out[iii]), literary scholars consider that capitalism’s intensification into financial or finance capitalism or finance tout court also intensifies the challenge to realism that some had already associated with global capitalism.[iv] Abstract and complex, finance exceeds what Julia Breitbach has observed to be some of the key characteristics of realism: “narration”, associated with “readable plots and recognizable characters”; “communication”, allowing “the reader to create meaning and closure”; “reference”, or “language that can refer to external realities, that is, to ‘the world out there’”; and “ethics”, “a return to commitment and empathy”.[v]

In the late 1980s, and just before the October 19th, 1987 “Black Monday” stock market crash, Tom Wolfe may still have thought that to represent finance, one merely had to flex one’s epistemological muscle: all novelists had to do, Wolfe wrote, is report—to bring “the billion-footed beast of reality” to terms.[vi] However, by the time Bret Easton Ellis’s American Psycho comes around, that novel presents itself as an explicit response to Wolfe,[vii] proposing a financial surrealism or what could perhaps be called a “psychotic realism” (Antonio Scurati) to capture the lives that finance produces. If (as per a famous analysis) late capitalism’s aesthetic was not so much realist but postmodernist, late late capitalism or just-in-time capitalism has only intensified those developments, leading some to propose post-postmodernism as the next phase in this contemporary history.[viii]

At the same time, realism seems to have largely survived the postmodernist and post-postmodernist onslaughts: in fact, it too has been experiencing a revival,[ix] and one that is visible in, and in some cases dramatized in, the contemporary finance novel (which thereby exceeds the kind of financial realism that Wolfe proposes). Indeed, one reason for this revival could be that in the aftermath of the financial crisis, novelists have precisely sought to render abstract and complex finance legible, and comprehensible, through literature—to bring a realism to the abstract and complex world of finance.

Given realism’s close association with capitalism, and its post- and post-postmodern crisis under late capitalism and finance, none of this should come as a surprise. Rather, it means that critics can consider the finance novel in its various historical articulations as a privileged site to test realism’s limits and limitations.

Finance, Credit, Mortgage

If Karl Marx’s celebrated formula of capital—M-C-M’, with money leading to money that is worth more via the intermediary of the commodity—is quasi-identified with the realist novel, the formula’s shortened, financial variation—M-M’, money leading to money that is worth more without the intermediary of the commodity[x]—has come to mark its challenges. Perhaps in part reflecting this narrative (though this is not explicitly stated in the book), Dead Pledges’ study of the cultural representations of finance starts with a discussion of the realist novel but quickly moves away from it in order to look elsewhere in search of representations of finance.

McClanahan’s case-studies concern the early twenty-first century, specifically the aftermath of the 2007-8 crisis. However, the historical-theoretical framework of Dead Pledges focuses on credit and debt. It extends some 40 years before that, to the early 1970s and the transformations of the economy that were set in motion then. Dead Pledges thus takes up the history of financialization, which is usually dated back to that time. Neoliberalism, which is sometimes confused with finance and shares some of its history, comes up a few times in the book’s pages but is not a key term in the analysis.

One could bring in various reasons for the periodization that McClanahan adopts, including—though with some important caveats—the Nixon administration’s unilateral decision in 1971 to abolish the gold standard, thus ultimately ending the Bretton Woods international exchange agreements that had been in place since World War Two and propelling the international markets into the so-called “Nixon shock.” However, in his key text “Culture and Finance Capital” Fredric Jameson already warned against the false suggestion of solidity and tangibility that such a reference to the gold standard (which was really “an artificial and contradictory system in its own right”, as Jameson points out[xi]) might bring. Certainly for McClanahan, who focuses on credit and debt and is not that interested in money, it would make sense to abandon so-called commodity theories of money and fiat theories of money—which have proposed that the origins of money lie in the exchange of goods or a sovereign fiat—for the credit or debt theory of money which, as per the revisionist analyses of for example David Graeber and Felix Martin,[xii] have exposed those other theories’ limitations. Indeed, McClanahan’s book explicitly mentions Graeber and other contemporary theorists of credit and debt (Richard Dienst, Maurizio Lazzarato, Angela Mitropoulos, Fred Moten and Stefano Harvey, Miranda Joseph, Andrew Ross) as companion thinkers, even if none of those writers is engaged in any detail in the book.

Since the 1970s, consumer debt has exploded in the United States and Dead Pledges ultimately zooms in on a particular form of credit and debt, namely the home mortgage. McClanahan inherits this focus from the collapse of the home mortgage market, which triggered the 2007-8 crisis. McClanahan rehearses the history, and the complicated technical history, of this collapse at various moments throughout the book. Although this history is likely more or less familiar to readers, the repetition of its technical detail (from various angles, depending on the focus of each of McClanahan’s chapters) is welcome. As McClanahan points out, home mortgages used to be “deposit-financed” (6). While there was always a certain amount of what Marx in Capital: Vol. 3 called “fictitious capital”[xiii] (“fiktives Kapital”) in play—banks can write out more mortgages than they actually have money for based on their credit-worthy borrower’s promise to repay (with interest)—the amount of fictitious capital has increased exponentially since the 1970s. More and more frequently mortgages are being funded not through deposits but “through the sale of speculative financial instruments” (6)—basically, through the sale of a borrower’s promise to repay. This development is enabled by the practice of securitization: many mortgages are bundled together into what is called a tranche, which is then sold as a financial instrument—a mortgage backed security (MBS) or collateralized debt obligation (CDO). These kinds of instruments, so-called derivatives, are the hallmark of what in Giovanni Arrighi’s terms we can understand as the phase of capitalism’s financial expansion (see 14). This refers to an economic cycle during which value is produced not so much through the making of commodities but through value’s “extraction” (as Saskia Sassen puts it[xiv]) beyond what can be realized in the commodity—in this particular case, through the creation and especially the circulation of bundles of mortgages.

As McClanahan explains, securitization is about “creating a secondary market” (6) for the sale of debt. The value of those kinds of debt-backed “commodities” (if we can still call them that) does not so much come from what they are worth as products—indeed, their value is dubious since for example the already mentioned tranches will include both triple A rated mortgages (mortgages with a low risk of default) and subprime mortgages (like the infamous NINJA mortgages that were granted to people with No Income, No Jobs, No Assets). Nevertheless, those MBSs or CDOs often still received a high rating, based on the flawed idea that the risk of value-loss was lessened by mixing the low risk mortgages with the high risk mortgages. What seemed to have mattered most was not so much the value of an MBS or CDO as product but their circulation, which is the mode of value-generation that Jasper Bernes among others has deemed to be central to the financial era. Ultimately, and while they brought the global financial system to the edge of collapse, they also generated extreme value for those who shorted those financial products. And shorted them big, as Adam McKay’s The Big Short would have it (Paramount, 2015; based on Michael Lewis’ 2010 book by the same title). By betting against them, the protagonists of Lewis’ and McKay’s story made an immense profit while everyone else suffered catastrophic losses.

“Dematerialization” alone and cognate understandings of finance as “performative” and “linguistic”[xv]—in other words, this story as it could be told using the abolition of the gold standard as the central point of reference—cannot tell the whole truth here, especially not since credit and debt can actually be found at the origin of money. However, through those historico-economic developments of credit and debt there emerges a transformed role of credit and debt in our societies, from a “form of exchange that reinforces social cohesion” (185) to “a regime of securitization and exploitable risk, of expropriation and eviction” (182). Dematerialization—or perhaps better, various rematerializations: for example from gold or real estate to securitized mortgage debt—is important but without the material specifics of the history that McClanahan recounts, it does not tell us all that much.

Echoing David Harvey’s description of the need for “new markets for [capital’s] goods and less expensive labor to produce them” as a “spatial fix” (Harvey qtd. 12), McClanahan reads the history summarized above as a “temporal fix” because “it allows capital to treat an anticipated realization of value as if it had already happened” (13). In 2007-8, of course, that fix turned out to be an epic fuck-up. McClanahan recalls Arrighi’s periodization (after Fernand Braudel) of capitalism as alternating “between epochs of material expansion (investment in production and manufacturing) and phases of financial expansion (investment in stock and capital markets)” (14) and notes that the 2007-8 crisis seems to have marked the end of the phase of financial expansion.

In Arrighi’s view, that would mean the time has come for the emergence of a new superpower, one that will step in for the U.S. as the global hegemon. A return of American (U.S.) greatness through a return to an era of material expansion (as the current U.S. President Donald J. Trump is proposing) appears unlikely within this framework: at best, it will have some short-lived, anachronistic success before the new hegemon arrives. However, will that new hegemon arrive? According to some, and McClanahan appears to align herself with those, the current crisis of the system “will not lead to the emergence of a new regime of capitalist accumulation under a different imperial superpower” (15). “Instead, it heralds something akin to a ‘terminal crisis’ in which no renewal of capital profitability is possible” (15). Does this then lead to an eternal winter, as Joshua Clover already asked?[xvi] Alternatively, are we finally done with those phases, and ready for something new?

The Novel: Scale and Character

If all of this has been theoretical so far, Dead Pledges’ four chapters stand out first as nuanced readings of works of contemporary culture. As McClanahan sees it, culture is the best site to understand debt as a “ubiquitous yet elusive social form” (2). By that, she does not mean we should forget about economic textbooks; but to understand debt as a “social form”, culture is the go-to place. McClanahan’s inquiry starts out traditionally, with a chapter about the contemporary realist novel. In it, she takes on behavioral economics, a subfield of microeconomics. Unlike macroeconomics, microeconomics focuses on individual human decisions. Whereas microeconomic models tend to assume rational agents, behavioralism does not: non-rational human decisions might cause or result from a market crisis.

What caused the 2007-8 crisis? There are multiple answers, and McClanahan shows that they are in tension with one another. One answer—the macroeconomic one–is that the crisis was the result of an abstract and complex financial system that caved in on itself. Such an explanation tends to avoid individual responsibility. On the other hand, microeconomics, and behavioralism in particular, blames the crisis on the bad decisions of a few individuals, which exculpates institutions. This seemed to be the dominant mode of explanation. In this explanation too, however, the buck seemed to stop nowhere: how many bankers went to jail for the catastrophic market losses they caused? This leads to a larger question: how should one negotiate, in economics, between the macro and the micro, between the individual and the system—how should one assign blame, enforce accountability? How should one regulate? How should one even think, and represent, the connections between systems and individuals?

One cultural form that has been particularly good at this negotiation is the novel, which tends to tell a macro-story through its representation of the micro, and so seeks “to capture the reality of a structural, even impersonal, economic and social whole” (24) while also considering “individual investors’ ‘personal impulses’” (31). This is what McClanahan finds in Martha McPhee’s Dear Money (2010), Adam Haslitt’s Union Atlantic, and Jonathan Dee’s The Privileges. These novels marry the macro- and the micro-economical; they accomplish what McClanahan presents as a scalar negotiation. However, one should note that in doing so, they keep the behavioralist model intact—for they suggest that individual bad decisions lie at the origin of macroeconomic events. McClanahan shows, however, that as novels Dear Money, Union Atlantic, and The Privileges take on that behavioralist remainder, in other words: the novel’s characteristic “focus on subjective experience and the meaningfulness of being a subject” (33), through their awareness of their place in the genealogy of the novel. McClanahan’s readings ultimately reveal that the novels she looks at cannot save the individual from what she terms “a kind of ontological attenuation or even annulment” (33) that comes with their account of the 2007-8 crisis. Out go the full characters of the realist novel. The crisis demands it.

What is left? The chapter culminates in a reading of Dee’s novel in which McClanahan cleverly suggests that the novel explores “the formal limits of sympathetic identification” and tells “money’s” story rather than the story of Adam and Cynthia “Morey” (51), who are the novel’s main characters. Thus, the novel is not so much about behavioralist psychology but about money itself. Capital is remade in the novel, McClanahan argues, “in the image of the human” (52), creating the uncanny effect of human beings who are merely stand-ins for money. Adam Morey/Money “has no agency, and he is all automaton, no autonomy. He has no interiority” (53). McClanahan does not note that this description places Adam in line with American Psycho’s “automated teller”[xvii] Patrick Bateman, who in a famous passage observes that while

there is an idea of a Patrick Bateman, some kind of abstraction, … there is no real me, only an entity, something illusory, and though I can hide my cold gaze and you can shake my hand and feel flesh gripping yours and maybe you can even sense our lifestyles are probably comparable: I simply am not there”.[xviii]

Like Bateman’s narrative voice, which echoes the abstraction of finance, The Privileges’ voice is that of “investment itself” (52), which swallows human beings up whole.

If the neoliberal novel, as per Walter Benn Michaels’ analysis (from which McClanahan quotes; 53) reduces society to individuals (and possibly their families, following Maggie Thatcher’s claim), The Privileges as a finance novel goes beyond that and “liquidat[es]” (53) individuals themselves. We are encountering here the terminal crisis of character that writes, in the guise of the realist novel, our financial present. Rich characterization is out. The poor character is the mark of financial fiction.

Yet, such depersonalization does not capture the full dynamic of financialization either. In Chapter 2, McClanahan draws this out through a discussion of the credit score and its relation to contemporary literature. Although one’s credit score is supposed to be objective, the fact that one can receive different credit scores from different agencies demonstrates that an instability haunts it—and resubjectifies, if not repersonalizes, it. McClanahan starts out with a reading of an ad campaign for a website selling credit reports that quite literally personalizes the scores one can receive. It probably comes as no surprise that one’s ideal score is personalized as a white, tall, and fit young man; the bad score is represented by a short balding guy with a paunch. He also wears a threatening hockey mask.

McClanahan suggests that what structures the difference here between the objective and the subjective, the impersonal and the personalized, is the difference between neutral credit and morally shameful debt. The former is objective and impersonal; the latter is subjective and personalized. The problem with this distinction, however, is not only that the supposedly objective credit easily lets the subjective slip back in (as is evident from the ad campaign McClanahan discusses); discussions of subjective debt also often lack quantitative and material evidence (when they ignore, for example, “the return in debt collection to material coercion rather than moral persuasion”; 57). Rather than showing how the personal can become “a corrective for credit’s impersonality” and how “objectivity [can become] a solution to the problem of debt’s personalization” (57)—debt always operates on the side of both–McClanahan considers how contemporary literature and conceptual art have turned those issues into “a compelling set of questions to be pursued” (57).

If in the finance novel, rich characterization is out, a question arises: what alternatives emerge for characterization at the crossroads of “credit, debt, and personhood” (57)? As McClanahan points out, there is a history to this question in the fact that “the practice of credit evaluation borrowed the realist novel’s ways of describing fictional persons as well as the formal habits of reading and interpretation the novel demanded” (59). The relation went both ways: “the realist novel drew on the credit economy’s models of typification … to produce socially legible characters” (59). Because “quantitative or systematized instruments for evaluating the fiscal soundness” of borrowers were absent, creditors used to rely “on subjective evaluations of personal character” to assess “a borrower’s economic riskiness” (59). Such evaluations used to take a narrative form; in other words, the credit report used to be a story. It provided a detail of characterization that readers of literature would know how to interpret. The novel—the information it provided, the interpretation it required—was the model for this, for the credit report.

Enter the quantitative revolution: in the early 1970s the credit report becomes a credit score, the result of “an empirical technique that uses statistical methodology to predict the probability of repayment by credit applicants” (63). Narrative and character go out the window; the algorithmically generated score is all that counts. It is the end of the person in credit. As McClanahan is quick to point out, however, the credit score nevertheless cannot quite leave the person behind, as the “creditworthiness” that the credit score sums up ultimately “remains a quality of individuals rather than of data” (65). Therefore, the person inevitably slips back in, leading for example to the behavioralist models that McClanahan discusses in Chapter 1. Persons become numbers, but only to inevitably return as persons. McClanahan’s reading of the credit score negotiates this interchange.

One can find some of this in Gary Shteyngart’s Super Sad True Love Story (2010). If critics have faulted the novel for its caricatures and stereotypes, which “[decline] the conventions of characterization associated with the realist novel” (68), McClanahan argues that Shteyngart’s characters are in fact “emblematic of the contemporary regime of credit scoring” (68). Shteyngart’s use of caricature “captures the creation of excessively particular data-persons”; his “use of stereotype registers the paradox by which a contemporary credit economy also reifies generalized social categories” (71). While the credit score supposedly does not “discriminate by race, gender, age, or class” (71), in fact it does. McClanahan relies in part on Frank Pasquale’s important work in The Black Box Society to note credit scoring systematizes bias “in hidden ways” (Pasquale qtd. 72)—hidden because black boxed. This leads McClanahan back to the ad campaign with which she opened her chapter, now noting “its racialization” (72). The chapter closes with a discussion of how conceptual art and conceptual writing about credit and debt have negotiated the issue of personalization (and impersonalization). If “the personal” in Chapter 1 was associated first and foremost with microeconomics and behavioralism (which McClanahan criticizes), McClanahan shows that it can also do “radical work” (77) in response to credit’s impersonalization as “a simultaneously expanded and denaturalized category … representing social relations and collective subjects as if they were speaking persons and thus setting into motion a complex dialectic between the personal and the impersonal” (77). She does this through a discussion of the work of conceptual artist Cassie Thornton and the photographs of the “We are the 99%” tumblr. Mathew Timmons’ work of conceptual writing CREDIT, on the other hand, plays with the impersonal to “provide an account of what constitutes the personal in the contemporary credit economy” (89).

Although McClanahan does not explicitly state this, I read the arch of her Chapters 1 and 2 as a recuperation of the personal from its negative role in behavioralism (as well as its naturalized, racist role in the credit scoring that is discussed in Chapter 2), and more broadly from microeconomics. Following Thornton in particular (whose art also features on the cover of Dead Pledges), McClanahan opens up the personal onto the macro of the social and the collective. In Dead Pledges, the novel and especially the realist novel turn out to be productive sites to pursue such a project due to the scalar negotiation and rich characterization that are typical of the genre—and in the credit-crisis novel both of those are under pressure. If the novel gradually disappears from Dead Pledges to give way to photography and film in Chapters 3 and 4, the concern with realism remains. Indeed, McClanahan’s book ultimately seems to want to tease out a realism of the credit-crisis era, and it is that project to which I now turn.

Foreclosure Photography and Horror Films

In Chapters 3 and 4, once the novel is out of the way, McClanahan’s brilliance as a cultural studies scholar finally shines. Dead Pledges’ third chapter looks at post-crisis photography and “foreclosure photography” in particular. The term refers to photography of foreclosed homes but evokes the very practice of photography itself, which depends on a shutter mechanism that closes—or rather opens very quickly–in order to capture a reality. This signals a complicity between foreclosure and photography that McClanahan’s chapter explores, for example in a discussion of photographs of forced eviction by John Moore and Anthony Suau, which allow McClanahan to draw out the complicities between photography and the police—but not just the police. She notes, for example, that “[t]he photographer’s presence on the scene is underwritten by the capacity of both the state and the bank to violate individual privacy” (114). Dead Pledges ties that violation of individual privacy to a broader cultural development towards what McClanahan provocatively calls “unhousing” (115), evident for example in how various TV shows allow the camera to enter into the private sanctuary of the home to show how people live. Here, “the sanctity of domestic space [is defended] precisely by violating it” (115). In parallel, “sacred” real estate, the financial security of the domestic property has become transformed—violated—by the camera seeking to record foreclosure. The home now represents precarity. This development happened due to the creation of mortgage backed securities, which turned real estate into liquidity and the home into an uncanny abode.

The chapter begins with a comparative discussion of photographs in which the home is “rendered ‘feral’—overrun by nature” (103). McClanahan considers the narratives that such photography evokes: one is that of the disintegration of civilization into a lawless zone of barbarism—the story of the home gone wild. Looking at the mobilization of this narrative in representations of Detroit, she discusses its biopolitical, racial dimensions. Often the economic hardship that the photographs document is presented as something that happens to other people. But the being of debt today is such that it is everywhere—in other words the “othering” of the harm it produces (its location “elsewhere”) has become impossible. So even though the photographs McClanahan discusses “represent the feral houses of the crisis as the signs of racial or economic Otherness, these photographs ultimately reveal that indebtedness is a condition more widely shared than ever before, a condition that can no longer be banished to the margins of either national space or of collective consciousness” (113). It is us—all of us.

The last two sections of the chapter deal with the uncanny aspects of foreclosure photography—with the foreclosed home as the haunted home and the uncanny architectural landscape as the flipside of the financial phase that was supposed to “surmount” (135) the crisis of industrial production but actually merely provided a temporal fix for it. Ghost cities in China—cities without subjects, cities whose assets have never been realized, marking the failed anticipation of credit itself–are the terminal crisis of capital. The uncanny, in fact, becomes a key theoretical focus of this chapter and sets up the discussion of horror films in the next: real estate (in other words, the familiar and secure), becomes the site where the foreign and unstable emerges, and as such the uncanny becomes a perfect concept for McClanahan to discuss the home mortgage crisis.

Far from being real estate, the house, and in particular the mortgaged home, is haunted by debt; so-called “homeowners” are haunted by the fact that the bank actually “owns” their home. Property is thus rendered unstable and becomes perceived as a real from which we have become alienated. In McClanahan’s vision, it even becomes a hostile entity (see 127). At stake here is ultimately not just the notion of property, but a criticism of property and “the inhospitable forms of domestic life produced by it” (105), an undermining of property—and with it a certain kind of “family”–as the cornerstone of liberalism. If McClanahan is critical of our era’s sanctification of the private through a culture of unhousing, her response is not to make the case for housing but rather to use unhousing to expose the fundamental uncanniness of property. With that comes the profanation (as opposed to the sanctification) of the private (as a criticism of inhospitable forms of domestic life). The domestic is not sacred. Property is not secure. Time to get out of the fortress of the house and the violence it produces. If the housing crisis has produced the precarization of the house, let us use it to reinvent domestic life.

Given the horror film’s long-standing relationship with real estate—think of the haunted house–it was only a matter of time before the 2007-8 crisis appeared in contemporary horror films. And indeed, in the films that McClanahan looks at, it does appear—as “explicit content” (151). One has to appreciate here McClanahan’s “vulgar” approach: she is interested in the ways in which the horror films she studies “speak explicitly to the relationship between speculation, gentrification, and the ‘opportunities’ presented to investors by foreclosure” (151). Unlike for example American Psycho, which borrows a thing or two from the horror aesthetic, McClanahan’s horror flicks do not shy away from the nuts and bolts of finance; instead, they “almost [obsessively include] figures and terminology of the speculative economy in real estate” (151). This leads McClanahan to suggest that as horror films, they have “all the power of reportage”: they offer “a systematic account rendered with all the explicit mimetic detail one would expect of a realist novel” (151). At the same time, they do not do the kind of reporting Tom Wolfe was advocating back when: indeed, “they draw on the particular, uncanny capacity of the horror genre to defamiliarize, to turn ideological comfort into embodied fear” (151). McClanahan emphasizes, with a nod to Jameson (and his appropriation of Lévi-Strauss’ account of myth[xix]), that this is not just a performance of the “social contradictions” that always haunt narrative’s “imaginary solutions” (151). Instead, the films “oscillate between the imagined and the real or between ‘true stories’ and ‘crazy’ nightmares” (151). There are contradictions here both at the level of form and of content—both representational and material, McClanahan writes—and they remain without resolution. The credit-crisis era requires this sort of realism.

Darren Lyn Bouseman’s Mother’s Day (Anchor Bay, 2010), for example, a remake of Charles Kaufman’s 1980 film, oscillates between competing understandings of property: “as labor and sentimental attachment”; “as nontransferable value and the site of hospitality”; “as temporal and personal”; “as primarily a matter of contingent need” (157). If those all contradict each other, McClanahan points out that what they have in common is that “they are all incompatible with the contemporary treatment of the house as fungible property and liquid investment” (157). Upkeep, sentimental investment, and use all become meaningless when a hedge fund buys up large quantities of foreclosed homes to make profit in renting. Such a development marks the end of “ownership society ideology in the wake of the crisis” (158). Like Crawlspace (Karz/Vuguru, 2013), another film McClanahan discusses, Mother’s Day reveals a strong interest in the home as fixed asset, and the changes that his asset has undergone due to securitization. Indeed, the two other films that McClanahan looks at, Drag Me to Hell (Universal, 2009) and Dream Home (Edko, 2010), are “more specifically interested in real estate as a speculative asset and in the transformation of uncertainty into risk” (161-2).

By the time Dream Home ends, with an explicit reference—from its Hong Kong setting–to “America’s subprime mortgage crisis” (170), it is hard not to be entirely convinced that with the horror film, McClanahan has uncovered the perfect genre and medium for the study of the representation of the home mortgage crisis. It is here that realism undergoes its most effective transformation into a kind of horrific realism or what I propose to call realist horror, an aesthetic that, like so much else when it comes to finance, cannot be easily located but instead oscillates between different realms. Indeed, if Dream Home provides key insights into the home mortgage crisis in the U.S., it is worth noting that it does so from its Chinese setting, which McClanahan takes to indicate that many of the changes that happened as part of financialization from the 1970s to the present in the U.S. in fact “occurred first in Asia” (174). This opens up the American (U.S.) focus of McClanahan’s book onto the rest of the world, raising some questions about the scope of the situation that Dead Pledges analyzes: how global is the gloomy, even horrific picture that McClanahan’s book paints? This seems particularly important when it comes to imagining, as McClanahan does in the final part of her book, political responses to debt.

Debt and the Revolution