~

This talk was delivered at Virginia Commonwealth University today as part of a seminar co-sponsored by the Departments of English and Sociology and the Media, Art, and Text PhD Program. The slides are also available here.

Thank you very much for inviting me here to speak today. I’m particularly pleased to be speaking to those from Sociology and those from the English and those from the Media, Art, and Text departments, and I hope my talk can walk the line between and among disciplines and methods – or piss everyone off in equal measure. Either way.

This is the last public talk I’ll deliver in 2016, and I confess I am relieved (I am exhausted!) as well as honored to be here. But when I finish this talk, my work for the year isn’t done. No rest for the wicked – ever, but particularly in the freelance economy.

As I have done for the past six years, I will spend the rest of November and December publishing my review of what I deem the “Top Ed-Tech Trends” of the year. It’s an intense research project that usually tops out at about 75,000 words, written over the course of four to six weeks. I pick ten trends and themes in order to closely at the recent past, the near-term history of education technology. Because of the amount of information that is published about ed-tech – the amount of information, its irrelevance, its incoherence, its lack of context – it can be quite challenging to keep up with what is really happening in ed-tech. And just as importantly, what is not happening.

So that’s what I try to do. And I’ll boast right here – no shame in that – no one else does as in-depth or thorough job as me, certainly no one who is entirely independent from venture capital, corporate or institutional backing, or philanthropic funding. (Of course, if you look for those education technology writers who are independent from venture capital, corporate or institutional backing, or philanthropic funding, there is pretty much only me.)

The stories that I write about the “Top Ed-Tech Trends” are the antithesis of most articles you’ll see about education technology that invoke “top” and “trends.” For me, still framing my work that way – “top trends” – is a purposeful rhetorical move to shed light, to subvert, to offer a sly commentary of sorts on the shallowness of what passes as journalism, criticism, analysis. I’m not interested in making quickly thrown-together lists and bullet points. I’m not interested in publishing clickbait. I am interested nevertheless in the stories – shallow or sweeping – that we tell and spread about technology and education technology, about the future of education technology, about our technological future.

Let me be clear, I am not a futurist – even though I’m often described as “ed-tech’s Cassandra.” The tagline of my website is “the history of the future of education,” and I’m much more interested in chronicling the predictions that others make, have made about the future of education than I am writing predictions of my own.

One of my favorites: “Books will soon be obsolete in schools,” Thomas Edison said in 1913. Any day now. Any day now.

Here are a couple of more recent predictions:

“In fifty years, there will be only ten institutions in the world delivering higher education and Udacity has a shot at being one of them.” – that’s Sebastian Thrun, best known perhaps for his work at Google on the self-driving car and as a co-founder of the MOOC (massive open online course) startup Udacity. The quotation is from 2012.

And from 2013, by Harvard Business School professor, author of the book The Innovator’s Dilemma, and popularizer of the phrase “disruptive innovation,” Clayton Christensen: “In fifteen years from now, half of US universities may be in bankruptcy. In the end I’m excited to see that happen. So pray for Harvard Business School if you wouldn’t mind.”

Pray for Harvard Business School. No. I don’t think so.

Both of these predictions are fantasy. Nightmarish, yes. But fantasy. Fantasy about a future of education. It’s a powerful story, but not a prediction made based on data or modeling or quantitative research into the growing (or shrinking) higher education sector. Indeed, according to the latest statistics from the Department of Education – now granted, this is from the 2012–2013 academic year – there are 4726 degree-granting postsecondary institutions in the United States. A 46% increase since 1980. There are, according to another source (non-governmental and less reliable, I think), over 25,000 universities in the world. This number is increasing year-over-year as well. So to predict that the vast vast majority of these schools (save Harvard, of course) will go away in the next decade or so or that they’ll be bankrupt or replaced by Silicon Valley’s version of online training is simply wishful thinking – dangerous, wishful thinking from two prominent figures who will benefit greatly if this particular fantasy comes true (and not just because they’ll get to claim that they predicted this future).

Here’s my “take home” point: if you repeat this fantasy, these predictions often enough, if you repeat it in front of powerful investors, university administrators, politicians, journalists, then the fantasy becomes factualized. (Not factual. Not true. But “truthy,” to borrow from Stephen Colbert’s notion of “truthiness.”) So you repeat the fantasy in order to direct and to control the future. Because this is key: the fantasy then becomes the basis for decision-making.

Fantasy. Fortune-telling. Or as capitalism prefers to call it “market research.”

“Market research” involves fantastic stories of future markets. These predictions are often accompanied with a press release touting the size that this or that market will soon grow to – how many billions of dollars schools will spend on computers by 2020, how many billions of dollars of virtual reality gear schools will buy by 2025, how many billions of dollars of schools will spend on robot tutors by 2030, how many billions of dollars will companies spend on online training by 2035, how big will coding bootcamp market will be by 2040, and so on. The markets, according to the press releases, are always growing. Fantasy.

In 2011, the analyst firm Gartner predicted that annual tablet shipments would exceed 300 million units by 2015. Half of those, the firm said, would be iPads. IDC estimates that the total number of shipments in 2015 was actually around 207 million units. Apple sold just 50 million iPads. That’s not even the best worst Gartner prediction. In October of 2006, Gartner said that Apple’s “best bet for long-term success is to quit the hardware business and license the Mac to Dell.” Less than three months later, Apple introduced the iPhone. The very next day, Apple shares hit $97.80, an all-time high for the company. By 2012 – yes, thanks to its hardware business – Apple’s stock had risen to the point that the company was worth a record-breaking $624 billion.

But somehow, folks – including many, many in education and education technology – still pay attention to Gartner. They still pay Gartner a lot of money for consulting and forecasting services.

People find comfort in these predictions, in these fantasies. Why?

Gartner is perhaps best known for its “Hype Cycle,” a proprietary graphic presentation that claims to show how emerging technologies will be adopted.

According to Gartner, technologies go through five stages: first, there is a “technology trigger.” As the new technology emerges, a lot of attention is paid to it in the press. Eventually it reaches the second stage: the “peak of inflated expectations.” So many promises have been made about this technological breakthrough. Then, the third stage: the “trough of disillusionment.” Interest wanes. Experiments fail. Promises are broken. As the technology matures, the hype picks up again, more slowly – this is the “slope of enlightenment.” Eventually the new technology becomes mainstream – the “plateau of productivity.”

It’s not that hard to identify significant problems with the Hype Cycle, least of which being it’s not a cycle. It’s a curve. It’s not a particularly scientific model. It demands that technologies always move forward along it.

Gartner says its methodology is proprietary – which is code for “hidden from scrutiny.” Gartner says, rather vaguely, that it relies on scenarios and surveys and pattern recognition to place technologies on the line. But most of the time when Gartner uses the word “methodology,” it is trying to signify “science,” and what it really means is “expensive reports you should buy to help you make better business decisions.”

Can it really help you make better business decisions? It’s just a curve with some technologies plotted along it. The Hype Cycle doesn’t help explain why technologies move from one stage to another. It doesn’t account for technological precursors – new technologies rarely appear out of nowhere – or political or social changes that might prompt or preclude adoption. And at the end it is simply too optimistic, unreasonably so, I’d argue. No matter how dumb or useless a new technology is, according to the Hype Cycle at least, it will eventually become widely adopted. Where would you plot the Segway, for example? (In 2008, ever hopeful, Gartner insisted that “This thing certainly isn’t dead and maybe it will yet blossom.” Maybe it will, Gartner. Maybe it will.)

And maybe this gets to the heart as to why I’m not a futurist. I don’t share this belief in an increasingly technological future; I don’t believe that more technology means the world gets “more better.” I don’t believe that more technology means that education gets “more better.”

Every year since 2004, the New Media Consortium, a non-profit organization that advocates for new media and new technologies in education, has issued its own forecasting report, the Horizon Report, naming a handful of technologies that, as the name suggests, it contends are “on the horizon.”

Unlike Gartner, the New Media Consortium is fairly transparent about how this process works. The organization invites various “experts” to participate in the advisory board that, throughout the course of each year, works on assembling its list of emerging technologies. The process relies on the Delphi method, whittling down a long list of trends and technologies by a process of ranking and voting until six key trends, six emerging technologies remain.

Disclosure/disclaimer: I am a folklorist by training. The last time I took a class on “methods” was, like, 1998. And admittedly I never learned about the Delphi method – what the New Media Consortium uses for this research project – until I became a scholar of education technology looking into the Horizon Report. As a folklorist, of course, I did catch the reference to the Oracle of Delphi.

Like so much of computer technology, the roots of the Delphi method are in the military, developed during the Cold War to forecast technological developments that the military might use and that the military might have to respond to. The military wanted better predictive capabilities. But – and here’s the catch – it wanted to identify technology trends without being caught up in theory. It wanted to identify technology trends without developing models. How do you do that? You gather experts. You get those experts to consensus.

So here is the consensus from the past twelve years of the Horizon Report for higher education. These are the technologies it has identified that are between one and five years from mainstream adoption:

It’s pretty easy, as with the Gartner Hype Cycle, to look at these predictions and note that they are almost all wrong in some way or another.

Some are wrong because, say, the timeline is a bit off. The Horizon Report said in 2010 that “open content” was less than a year away from widespread adoption. I think we’re still inching towards that goal – admittedly “open textbooks” have seen a big push at the federal and at some state levels in the last year or so.

Some of these predictions are just plain wrong. Virtual worlds in 2007, for example.

And some are wrong because, to borrow a phrase from the theoretical physicist Wolfgang Pauli, they’re “not even wrong.” Take “collaborative learning,” for example, which this year’s K–12 report posits as a mid-term trend. Like, how would you argue against “collaborative learning” as occurring – now or some day – in classrooms? As a prediction about the future, it is not even wrong.

But wrong or right – that’s not really the problem. Or rather, it’s not the only problem even if it is the easiest critique to make. I’m not terribly concerned about the accuracy of the predictions about the future of education technology that the Horizon Report has made over the last decade. But I do wonder how these stories influence decision-making across campuses.

What might these predictions – this history of the future – tell us about the wishful thinking surrounding education technology and about the direction that the people the New Media Consortium views as “experts” want the future to take. What can we learn about the future by looking at the history of our imagining about education’s future. What role does powerful ed-tech storytelling (also known as marketing) play in shaping that future? Because remember: to predict the future is to control it – to attempt to control the story, to attempt to control what comes to pass.

It’s both convenient and troubling then these forward-looking reports act as though they have no history of their own; they purposefully minimize or erase their own past. Each year – and I think this is what irks me most – the NMC fails to looks back at what it had predicted just the year before. It never revisits older predictions. It never mentions that they even exist. Gartner too removes technologies from the Hype Cycle each year with no explanation for what happened, no explanation as to why trends suddenly appear and disappear and reappear. These reports only look forward, with no history to ground their direction in.

I understand why these sorts of reports exist, I do. I recognize that they are rhetorically useful to certain people in certain positions making certain claims about “what to do” in the future. You can write in a proposal that, “According to Gartner… blah blah blah.” Or “The Horizon Reports indicates that this is one of the most important trends in coming years, and that is why we need to commit significant resources – money and staff – to this initiative.” But then, let’s be honest, these reports aren’t about forecasting a future. They’re about justifying expenditures.

“The best way to predict the future is to invent it,” computer scientist Alan Kay once famously said. I’d wager that the easiest way is just to make stuff up and issue a press release. I mean, really. You don’t even need the pretense of a methodology. Nobody is going to remember what you predicted. Nobody is going to remember if your prediction was right or wrong. Nobody – certainly not the technology press, which is often painfully unaware of any history, near-term or long ago – is going to call you to task. This is particularly true if you make your prediction vague – like “within our lifetime” – or set your target date just far enough in the future – “In fifty years, there will be only ten institutions in the world delivering higher education and Udacity has a shot at being one of them.”

Let’s consider: is there something about the field of computer science in particular – and its ideological underpinnings – that makes it more prone to encourage, embrace, espouse these sorts of predictions? Is there something about Americans’ faith in science and technology, about our belief in technological progress as a signal of socio-economic or political progress, that makes us more susceptible to take these predictions at face value? Is there something about our fears and uncertainties – and not just now, days before this Presidential Election where we are obsessed with polls, refreshing Nate Silver’s website obsessively – that makes us prone to seek comfort, reassurance, certainty from those who can claim that they know what the future will hold?

“Software is eating the world,” investor Marc Andreessen pronounced in a Wall Street Journal op-ed in 2011. “Over the next 10 years,” he wrote, “I expect many more industries to be disrupted by software, with new world-beating Silicon Valley companies doing the disruption in more cases than not.” Buy stock in technology companies was really the underlying message of Andreessen’s op-ed; this isn’t another tech bubble, he wanted to reinsure investors. But many in Silicon Valley have interpreted this pronouncement – “software is eating the world” – as an affirmation and an inevitability. I hear it repeated all the time – “software is eating the world” – as though, once again, repeating things makes them true or makes them profound.

If we believe that, indeed, “software is eating the world,” that we are living in a moment of extraordinary technological change, that we must – according to Gartner or the Horizon Report – be ever-vigilant about emerging technologies, that these technologies are contributing to uncertainty, to disruption, then it seems likely that we will demand a change in turn to our educational institutions (to lots of institutions, but let’s just focus on education). This is why this sort of forecasting is so important for us to scrutinize – to do so quantitatively and qualitatively, to look at methods and at theory, to ask who’s telling the story and who’s spreading the story, to listen for counter-narratives.

This technological change, according to some of the most popular stories, is happening faster than ever before. It is creating an unprecedented explosion in the production of information. New information technologies, so we’re told, must therefore change how we learn – change what we need to know, how we know, how we create and share knowledge. Because of the pace of change and the scale of change and the locus of change (that is, “Silicon Valley” not “The Ivory Tower”) – again, so we’re told – our institutions, our public institutions can no longer keep up. These institutions will soon be outmoded, irrelevant. Again – “In fifty years, there will be only ten institutions in the world delivering higher education and Udacity has a shot at being one of them.”

These forecasting reports, these predictions about the future make themselves necessary through this powerful refrain, insisting that technological change is creating so much uncertainty that decision-makers need to be ever vigilant, ever attentive to new products.

As Neil Postman and others have cautioned us, technologies tend to become mythic – unassailable, God-given, natural, irrefutable, absolute. So it is predicted. So it is written. Techno-scripture, to which we hand over a certain level of control – to the technologies themselves, sure, but just as importantly to the industries and the ideologies behind them. Take, for example, the founding editor of the technology trade magazine Wired, Kevin Kelly. His 2010 book was called What Technology Wants, as though technology is a living being with desires and drives; the title of his 2016 book, The Inevitable. We humans, in this framework, have no choice. The future – a certain flavor of technological future – is pre-ordained. Inevitable.

I’ll repeat: I am not a futurist. I don’t make predictions. But I can look at the past and at the present in order to dissect stories about the future.

So is the pace of technological change accelerating? Is society adopting technologies faster than it’s ever done before? Perhaps it feels like it. It certainly makes for a good headline, a good stump speech, a good keynote, a good marketing claim, a good myth. But the claim starts to fall apart under scrutiny.

This graph comes from an article in the online publication Vox that includes a couple of those darling made-to-go-viral videos of young children using “old” technologies like rotary phones and portable cassette players – highly clickable, highly sharable stuff. The visual argument in the graph: the number of years it takes for one quarter of the US population to adopt a new technology has been shrinking with each new innovation.

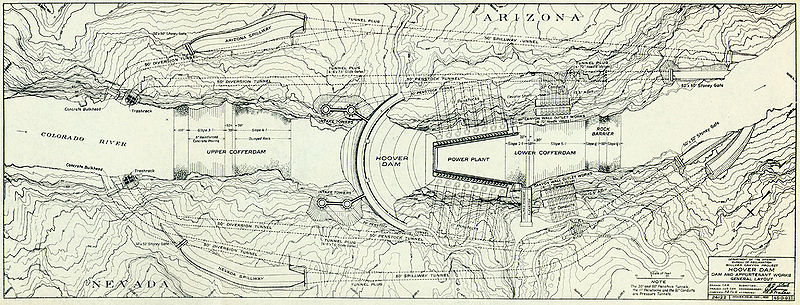

But the data is flawed. Some of the dates given for these inventions are questionable at best, if not outright inaccurate. If nothing else, it’s not so easy to pinpoint the exact moment, the exact year when a new technology came into being. There often are competing claims as to who invented a technology and when, for example, and there are early prototypes that may or may not “count.” James Clerk Maxwell did publish A Treatise on Electricity and Magnetism in 1873. Alexander Graham Bell made his famous telephone call to his assistant in 1876. Guglielmo Marconi did file his patent for radio in 1897. John Logie Baird demonstrated a working television system in 1926. The MITS Altair 8800, an early personal computer that came as a kit you had to assemble, was released in 1975. But Martin Cooper, a Motorola exec, made the first mobile telephone call in 1973, not 1983. And the Internet? The first ARPANET link was established between UCLA and the Stanford Research Institute in 1969. The Internet was not invented in 1991.

So we can reorganize the bar graph. But it’s still got problems.

The Internet did become more privatized, more commercialized around that date – 1991 – and thanks to companies like AOL, a version of it became more accessible to more people. But if you’re looking at when technologies became accessible to people, you can’t use 1873 as your date for electricity, you can’t use 1876 as your year for the telephone, and you can’t use 1926 as your year for the television. It took years for the infrastructure of electricity and telephony to be built, for access to become widespread; and subsequent technologies, let’s remember, have simply piggy-backed on these existing networks. Our Internet service providers today are likely telephone and TV companies; our houses are already wired for new WiFi-enabled products and predictions.

Economic historians who are interested in these sorts of comparisons of technologies and their effects typically set the threshold at 50% – that is, how long does it take after a technology is commercialized (not simply “invented”) for half the population to adopt it. This way, you’re not only looking at the economic behaviors of the wealthy, the early-adopters, the city-dwellers, and so on (but to be clear, you are still looking at a particular demographic – the privileged half.)

And that changes the graph again:

How many years do you think it’ll be before half of US households have a smart watch? A drone? A 3D printer? Virtual reality goggles? A self-driving car? Will they? Will it be fewer years than 9? I mean, it would have to be if, indeed, “technology” is speeding up and we are adopting new technologies faster than ever before.

Some of us might adopt technology products quickly, to be sure. Some of us might eagerly buy every new Apple gadget that’s released. But we can’t claim that the pace of technological change is speeding up just because we personally go out and buy a new iPhone every time Apple tells us the old model is obsolete. Removing the headphone jack from the latest iPhone does not mean “technology changing faster than ever,” nor does showing how headphones have changed since the 1970s. None of this is really a reflection of the pace of change; it’s a reflection of our disposable income and a ideology of obsolescence.

Some economic historians like Robert J. Gordon actually contend that we’re not in a period of great technological innovation at all; instead, we find ourselves in a period of technological stagnation. The changes brought about by the development of information technologies in the last 40 years or so pale in comparison, Gordon argues (and this is from his recent book The Rise and Fall of American Growth: The US Standard of Living Since the Civil War), to those “great inventions” that powered massive economic growth and tremendous social change in the period from 1870 to 1970 – namely electricity, sanitation, chemicals and pharmaceuticals, the internal combustion engine, and mass communication. But that doesn’t jibe with “software is eating the world,” does it?

Let’s return briefly to those Horizon Report predictions again. They certainly reflect this belief that technology must be speeding up. Every year, there’s something new. There has to be. That’s the purpose of the report. The horizon is always “out there,” off in the distance.

But if you squint, you can see each year’s report also reflects a decided lack of technological change. Every year, something is repeated – perhaps rephrased. And look at the predictions about mobile computing:

- 2006 – the phones in their pockets

- 2007 – the phones in their pockets

- 2008 – oh crap, we don’t have enough bandwidth for the phones in their pockets

- 2009 – the phones in their pockets

- 2010 – the phones in their pockets

- 2011 – the phones in their pockets

- 2012 – the phones too big for their pockets

- 2013 – the apps on the phones too big for their pockets

- 2015 – the phones in their pockets

- 2016 – the phones in their pockets

This hardly makes the case for technological speeding up, for technology changing faster than it’s ever changed before. But that’s the story that people tell nevertheless. Why?

I pay attention to this story, as someone who studies education and education technology, because I think these sorts of predictions, these assessments about the present and the future, frequently serve to define, disrupt, destabilize our institutions. This is particularly pertinent to our schools which are already caught between a boundedness to the past – replicating scholarship, cultural capital, for example – and the demands they bend to the future – preparing students for civic, economic, social relations yet to be determined.

But I also pay attention to these sorts of stories because there’s that part of me that is horrified at the stuff – predictions – that people pass off as true or as inevitable.

“65% of today’s students will be employed in jobs that don’t exist yet.” I hear this statistic cited all the time. And it’s important, rhetorically, that it’s a statistic – that gives the appearance of being scientific. Why 65%? Why not 72% or 53%? How could we even know such a thing? Some people cite this as a figure from the Department of Labor. It is not. I can’t find its origin – but it must be true: a futurist said it in a keynote, and the video was posted to the Internet.

The statistic is particularly amusing when quoted alongside one of the many predictions we’ve been inundated with lately about the coming automation of work. In 2014, The Economist asserted that “nearly half of American jobs could be automated in a decade or two.”“Before the end of this century,” Wired Magazine’s Kevin Kelly announced earlier this year, “70 percent of today’s occupations will be replaced by automation.”

Therefore the task for schools – and I hope you can start to see where these different predictions start to converge – is to prepare students for a highly technological future, a future that has been almost entirely severed from the systems and processes and practices and institutions of the past. And if schools cannot conform to this particular future, then “In fifty years, there will be only ten institutions in the world delivering higher education and Udacity has a shot at being one of them.”

Now, I don’t believe that there’s anything inevitable about the future. I don’t believe that Moore’s Law – that the number of transistors on an integrated circuit doubles every two years and therefore computers are always exponentially smaller and faster – is actually a law. I don’t believe that robots will take, let alone need take, all our jobs. I don’t believe that YouTube has been rendered school irrevocably out-of-date. I don’t believe that technologies are changing so quickly that we should hand over our institutions to entrepreneurs, privatize our public sphere for techno-plutocrats.

I don’t believe that we should cheer Elon Musk’s plans to abandon this planet and colonize Mars – he’s predicted he’ll do so by 2026. I believe we stay and we fight. I believe we need to recognize this as an ego-driven escapist evangelism.

I believe we need to recognize that predicting the future is a form of evangelism as well. Sure gets couched in terms of science, it is underwritten by global capitalism. But it’s a story – a story that then takes on these mythic proportions, insisting that it is unassailable, unverifiable, but true.

The best way to invent the future is to issue a press release. The best way to resist this future is to recognize that, once you poke at the methodology and the ideology that underpins it, a press release is all that it is.

Image credits: 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28. And a special thanks to Tressie McMillan Cottom and David Golumbia for organizing this talk. And to Mike Caulfield for always helping me hash out these ideas.

_____

Audrey Watters is a writer who focuses on education technology – the relationship between politics, pedagogy, business, culture, and ed-tech. She has worked in the education field for over 15 years: teaching, researching, organizing, and project-managing. Although she was two chapters into her dissertation (on a topic completely unrelated to ed-tech), she decided to abandon academia, and she now happily fulfills the one job recommended to her by a junior high aptitude test: freelance writer. Her stories have appeared on NPR/KQED’s education technology blog MindShift, in the data section of O’Reilly Radar, on Inside Higher Ed, in The School Library Journal, in The Atlantic, on ReadWriteWeb, and Edutopia. She is the author of the recent book The Monsters of Education Technology (Smashwords, 2014) and working on a book called Teaching Machines. She maintains the widely-read Hack Education blog, and writes frequently for The b2 Review Digital Studies magazine on digital technology and education.