Christian Jacob, in The Sovereign Map, describes maps as enablers of fantasy: “Maps and globes allow us to live a voyage reduced to the gaze, stripped of the ups and downs and chance occurrences, a voyage without the narrative, without the narrative, without pitfalls, without even the departure” (2005). Consumers and theorists of maps, more than cartographers themselves, are especially set up to enjoy the “voyage reduced to the gaze” that cartographic artifacts (including texts) are able to provide. An outside view, distant from the production of the artifact, activates the epistemological potential of the artifact in a way that producing the same artifact cannot.

This dynamic is found at the conceptual level of interpreting cartography as a discipline as well. J.B. Harley, in his famous essay “Deconstructing the Map,” writes that:

a major roadblock to our understanding is that we still accept uncritically the broad consensus, with relatively few dissenting voices, of what cartographers tell us maps are supposed to be. In particular, we often tend to work from the premise that mappers engage in an unquestionably “scientific” or “objective” form of knowledge creation…It is better for us to begin from the premise that cartography is seldom what cartographers say it is (Harley 1989, 57).

Harley urges an interpretation of maps outside the purview and authority of the map’s creator, just as a literary scholar would insist on the critic’s ability to understand the text beyond the authority of what the authors say about their texts. There can be, in other words, a power in having distance from the act of making. There is clarity that comes from the role of the thinker outside of the process of creation.

The goal of this essay is to push back against the valorization of “tools” and “making” in the digital turn, particularly its manifestation in digital humanities (DH), by reflecting on illustrative examples of the cartographic turn, which, from its roots in the sixteenth century through to J.B. Harley’s explosive provocation in 1989 (and beyond) has labored to understand the relationship between the practice of making maps and the experiences of looking at and using them. By considering the stubborn and defining spiritual roots of cartographic research and the way fantasies of empiricism helped to hide the more nefarious and oppressive applications of their work, I hope to provide a mirror for the state of the digital humanities, a field always under attack, always defining and defending itself, and always fluid in its goals and motions.

Cartography in the sixteenth century, even as its tools and representational techniques were becoming more and more sophisticated, could never quite abandon the religious legacies of its past, nor did it want to. Roger Bacon in the thirteenth century had claimed that only with a thorough understanding of geography could one understand the Bible. Pauline Moffitt Watts, in her essay “The European Religious Worldview and Its Influence on Mapping” concludes that many maps, including those by Eskrich and Ortelius, preserved a sense of providential and divine meaning even as they sought to narrate smaller, local areas:

Although the messages these maps present are inescapably bound, their ultimate source—God—transcends and eclipses history. His eternity and omnipresence is signed but not constrained in the figurae, places, people, and events that ornament them. They offer fantastic, sometimes absurd vignettes and pastiches that nonetheless integrate the ephemera into a vision of providential history that maintained its power to make meaning well into the early modern era. (2007, 400)

The way maps make meaning is contained not just in the technical expertise of the way the maps are constructed but in the visual experiences they provide that “make meaning” for the viewer. By over-prioritizing an emphasis on the way maps are made or on the geometric innovations that make their creation possible, the cartographic historian and theorist would miss the full effect of the work.

Yet, the spiritual dimensions of mapmaking were not in opposition to technological expertise, and in many cases they went hand in hand. In his book Radical Arts, the Anglo-Dutch scholar Jan van Dorsten describes the spiritual motivations of sixteenth-century cosmographers disappointed by academic theology’s ability to ease the trauma of the European Reformation: “Theology…as the traditional science of revelation had failed visibly to unite mankind in one indisputably “true” perception of God’s plan and the properties of His creature. The new science of cosmography, its students seem to argue, will eventually achieve precisely that, thanks to its non-disputative method” (1970, 56-7). Some mapmakers of the sixteenth century in England, the Netherlands, and elsewhere—including Ortelius and others—imagined that the science and art of describing the created world, a text rivaling scripture in both revelatory potential and divine authorship, would create unity out of the disputation-prone culture of academic theology. Unlike theology, where thinkers are mapping an invisible world held in biblical scripture and apostolic tradition (as well as a millennium’s worth of commentary and exegesis), the liber naturae, the book of nature, is available to the eyes more directly, seemingly less prone to disputation.

Cartographers were attempting to create an accurate imago mundi—surely that was a more tangible and grounded goal than trying to map divinity. Yet, as Patrick Gautier Dalché notes in his essay “The Reception of Ptolemy’s Geography (End of the Fourteenth to Beginning of the Sixteenth Century),” the modernizing techniques of cartography after the “rediscovery” of Ptolemy’s work, did not exactly follow a straight line of empirical progress:

The modernization of the imago mundi and the work on modes of representation that developed during the early years of the sixteenth century should not be seen as either more or less successful attempts to integrate new information into existing geographic pictures. Nor should they be seen as steps toward a more “correct” representation, that is, toward conforming to our own notion of correct representation. They were exploratory games played with reality that took people in different directions…Ptolemy was not so much the source of a correct cartography as a stimulus to detailed consideration of an essential fact of cartographic representation: a map is a depiction based on a problematic, arbitrary, and malleable convention. (2007, 360).

So even as the maps of this period may appear more “correct” to us, they are still engaged in experimentation to a degree that undermines any sense of the map as simply an empirical graphic representation of the earth. The “problematic, arbitrary, and malleable” conventions, used by the cartographer but observed and understood by the cartographic theorist and historian, reveal the sort of synergetic relationship between maker and observer, practitioner and theorist that allow an artifact to come into greater focus.

Yet, cartography for much of its history turned away from seeing its work as culturally or even politically embedded. David Stoddart, in his history of geography, labels Cook’s voyage to the Pacific in 1769 as the origin point of cartography’s transforming into an empirical science.[1] Stoddart places geography, from that point onward, within the realm of the natural sciences based on, as Derek Gregory observes, “three features of decisive significance for the formation of geography as a distinctly modern, avowedly ‘objective’ science: a concern for realism in description, for systematic classification in collection, and for the comparative method in explanation” (Gregory 1994, 19). What is gone, then, in this march toward empiricism is any sense of culturally embedded codes within the map. The map, like a lab report of scientific findings, is meant to represent what is “actually” there. This term “actually” will come back to haunt us when we turn to the digital humanities.

Yet, in the long history of mapping, before and after this supposed empirical fulcrum, maps remain slippery and malleable objects that are used for a diverse range of purposes and that reflect the cultural imagination of their makers and observers. As maps took on the appearance of the empirical and began to sublimate the devotional and fantastical aspects they had once shown proudly, they were no less imprinted with cultural knowledge and biases. If anything, the veil of empiricism allowed the cultural, political, and imperial goals of mapmaking to be hidden.

In William Boelhower’s groundbreaking “Inventing America: The Culture of the Map” he argued precisely that maps had not simply graphically represented America, but rather that America was invented by maps. “Accustomed to the success of scientific discourse and imbued with the Cartesian tradition,” he writes, “the sons of Columbus naturally presumed that their version of reality was the version” (1988, 212). While Europeans believed they were simply mapping what they saw according to empirical principles, they didn’t realize they were actually inventing America in their own discursive image. He elaborates: “The Map is America’s precognition; at its center is not geography in se but the eye of the cartographer. The fact requires new respect for the in-forming relation between the history of modern cartography and the history of the Euro-American’s being-in-the-new-world” (213). Empiricism, then, was empire. “Empirical” maps were making the eye of the cartographer into the ideal “objective” viewer, producing a fictional way of seeing that reflected state power. Boelhower refers to the scale map as a kind of “panopticon” because of the “line’s achievement of an absolute and closed system no longer dependent on the local perspectivism of the image. With map in hand, the physical subject is theoretically everywhere and nowhere, truly a global operator” (222). What appears, then, simply to be the gathering, studying, and representation of data is, in fact, a system of discursive domination in which the cartographer asserts their worldview onto a site. As Boelhower puts it: “Never before had a nation-state sprung so rationally from a cartographic fiction, the Euclidean map imposing concrete form on a territory and a people” (223). America was a cartographic invention meant to appear as empirically identical to how the cartographers made it look.

To turn again to J.B. Harley’s 1989 bombshell, maps are always evidence of cultural norms and perspectives, even when they try their best to appear sparse and scientific. Referring to “plain scientific maps,” Harley claims that “such maps contain a dimension of ‘symbolic realism’ which is no less a statement of political authority or control than a coat-of-arms or a portrait of a queen placed at the head of an earlier decorative map.” Even “accuracy and austerity of design are now the new talismans of authority culminating in our own age with computer mapping” (60). To represent the world “is to appropriate it” and to “discipline” and “normalize” it (61). The more we move away from cultural markers for the mythical comfort of “empirical” data, the more we find we are creating dominating fictions. There is no representation of data that does not exist within the hierarchies of cultural codes and expectations.

What this rather eclectic history of cartography reveals is that even when maps and mapmaking attempt to hide or move beyond their cultural and devotional roots, cultural, ethical, and political markers inevitably embed themselves in the map’s role as a broker of power. Maps sort data, but in so doing they create worldviews with real world consequences. As some practitioners of mapmaking in the early modern period, such as those Familists who counted several cartographers among their membership, might have thought their cartographic work provided a more universal and less disputation-prone discursive focus than say, philosophy or theology, they were producing power through their maps, appropriating and taming the world around them in ways only fully accessible to the reader, the historian, the viewer. Harley invites us to push back against a definition of cartographic studies that follows what cartographers themselves believe cartography must be. One can now, like the author of this essay, be a theorist and historian of cartographic culture without ever having made a map. Having one’s work exist outside the power-formation networks of cartographic technology provides a unique view into how maps make meaning and power out in the world. The main goal of this essay, as I turn to the digital humanities, is to encourage those interested in the digital turn to make room for those who study, observe, and critique, but do not make.[2]

Though the digital turn in the humanities is often celebrated for its wider scope and its ability to allow scholars to interpret—or at least observe—data trends across many more books than one human could read in the research period of an academic project, I would argue that the main thrust of the fantasy of the digital turn can be understood through its preoccupation with a fantasy of access and a view of its labor as fundamentally different than the labor of traditional academic discourse. A radical hybridity is celebrated. Rather than just read books and argue about the contents, the digital humanist is able to draw from a wide variety of sources and expanded data. Michael Witmore, in a recent essay published in New Literary History, celebrates this age of hybridity: “If we speak of hybridization as the point where constraints cease to be either intellectual or physical, where changes in the earth’s mean temperature follow just as inevitably from the ‘political choices of human beings’ as they do from the ‘laws of nature,’ we get a sense of how rich and productive the modernist divide has been. Hybrids have proliferated. Indeed, they seem inexhaustible” (355). Witmore sees digital humanities as existing within this hybridity: “The Latourian theory of hybrids provides a useful starting point for thinking about a field of inquiry in which interpretive claims are supported by evidence obtained via the exhaustive, enumerative resources of computing” (355). The emphasis on the “exhaustive” and “enumerative” resources of computing would imply, even if this were not Witmore’s intention, that computing opens a depth of evidence not available to the non-hybrid, non-digitally enabled humanist.

Indeed, in certain corners of DH, one often finds a suspicious eye cast on the value of traditional exegetical practices practiced without any digital engagements. In The Digital Humanist: A Critical Inquiry by Teresa Numerico, Domenico Fiormonte, and Francesco Tomasi, “the authors call on humanists to acquire the skills to become digital humanists,” elaborating: “Humanists must complete a paso doble, a double step: to rediscover the roots of their own discipline and to consider the changes necessary for its renewal. The start of this process is the realization that humanists have indeed played a role in the history of informatics” (2015, x). Numerico, Fiormonte, and Tomasi offer a vision of the humanities as in need of “renewal” rather than under attack from external forces. The suggestion is that the humanities need to rediscover their roots while at the same time taking on the “tools necessary for [their] renewal,” tools which are related to their “role in the history of informatics” and computing. The humanities are then shown to be tied up in a double bind: they have forgotten their roots and they are unable to innovate without the help of considering the digital.

To offer a political aside: while Numerico, Fiormonte, and Tomasi offer a compelling and necessary history of the humanistic roots of computing, their argument is well in line with right-leaning attacks on the humanities In their view, the humanities have fallen away from their first purpose, their roots. While the authors of the volume see these roots as connected to the early years of modern computer science, they could just as easily, especially given what early computational humanities looked like, be urging a return to philology and to the world of concordances and indexing that were so important to early and mid-twentieth century literary studies. They might also gesture instead at the deep history of political and philosophical thought out of which the modern university was born, and which were considered fundamental to the very project of university education until only very recently. Barring a return to these roots, the least the humanities can do to survive is to renew itself based on a connection to the digital and to the site of modern work: the computer terminal.

Of course, what scholarly work is done outside the computer terminal? Journals and, increasingly, whole university press catalogs are being digitized and sold to university libraries on a subscription bases. Scholars read these materials and then type their own words into word processing programs onto machines (even, if like the recent Freewrite Machine released by Astrohaus, the machine attempts to appear as little like a computer as possible) and then, in almost all cases, email their work to editors who then edit it digitally and then publish it either in digitally-enabled print publishing or directly on-line. So why aren’t humanists of all sorts already considered connected to the digital?

The answer is complicated and, like so many things in DH, depends on which particular theorist or practitioner you ask. Matthew Kirschenbaum writes about how one knows one is a digital humanist:

You are a digital humanist if you are listened to by those who are already listened to as digital humanists, and they themselves got to be digital humanists by being listened to by others. Jobs, grant funding, fellowships, publishing contracts, speaking invitations—these things do not make one a digital humanist, though they clearly have a material impact on the circumstances of the work one does to get listened to. Put more plainly, if my university hires me as a digital humanist and if I receive a federal grant (say) to do such a thing that is described as digital humanities and if I am then rewarded by my department with promotion for having done it (not least because outside evaluators whom my department is enlisting to listen to as digital humanists have attested to its value to the digital humanities), then, well, yes, I am a digital humanist. Can you be a digital humanist without doing those things? Yes, if you want to be, though you may find yourself being listened to less unless and until you do some thing that is sufficiently noteworthy that reasonable people who themselves do similar things must account for your work, your thing, as a part of the progression of a shared field of interest. (2014, 55)

Kirschenbaum defines the digital humanist as, mostly, someone who does something that earns the recognition of other digital humanists. He argues that this is not particularly different from the traditional humanities in which publications, grants, jobs, etc. are the standard definition of who is or is not a scholar. Yet, one wonders, especially in the age of the complete collapse of the humanities job market, if such institutional distinctions are either ethical or accurate. What would we call someone with a Ph.D. (or even without) who spends their days readings books, reading scholarly articles, and writing in their own room about the Victorian verse monologue or the early Tudor dramatic interludes? If no one reads a scholar, are they still a scholar? For the creative arts, we seem to have answered this question. We believe that the work of a poet, artist, or philosopher matters much more than their institutional appreciation or memberships during the era of the work’s production. Also, the need to be “listened to” is particularly vexed and reflects some of the political critiques that are often launched at DH. Who is most listened to in our society? White, cisgendered, heterosexual men. In the age of Trump, we are especially attuned to the fact that whom we choose to listen to is not always the most deserving or talented voice, but the one reflecting existent narratives of racial and economic distribution.

Beyond this, the combined requirement of institutional recognition and economic investment (a salary from a university, a prestigious grant paid out) ties the work of the humanist to institutional rewards. One can be a poet, scholar, thinker in one’s own house, but you can’t be an investment banker or a lawyer or a police officer by self-declaration. The fluid nature of who can be a philosopher, thinker, poet, scholar has always meant that the work, not the institutional affiliation, of a writer/maker matters. Though DH is a diverse body of practitioners doing all sorts of work, it is often framed, sometimes only implicitly, as a return to “work” over “theory.” Kirschenbaum for instance, defending DH against accusations that it is against the traditional work of the humanities, writes: “Digital humanists don’t want to extinguish reading and theory and interpretation and cultural criticism. Digital humanists want to do their work… they want professional recognition and stability, whether as contingent labor, ladder faculty, graduate students, or in ‘alt-ac’ settings” (56). They essentially want the same things any other scholar does. Yet, while digital humanists are on the one hand defined by their ability to be listened to and to have professional recognition and stability, they are also in search of recognition and stability and eager to reshape humanistic work toward a more technological model.

This leads to a question that is not always explored closely enough in discussions of the digital humanities in higher education. Though scholars are rightly building bridges between STEM and the humanities (rightly pushing for STEAM over STEM), there are major institutional differences between how the humanities and the sciences have traditionally functioned. Scientific research largely happens because of institutional investment of some kind whether from governmental, NGO, or corporate grants. This is why the funding sources of any given study are particularly important to follow. In the humanities, of course, grants also exist and they are a marker of career prestige. No one could doubt the benefit of time spent in a far-away archive or at home writing instead of teaching because of a dissertation-completion grant. Grants, in other words, boost careers but they are not necessary.[3] Very successful humanists depend on only library resources to produce influential work. In many cases, access to a library, a computer, and a desk is all one needs and the digitization of many archives (a phenomenon not free from political and ethical complications) has expanded access to archival materials once only available to students of wealthy institutions with deep special collections budgets or those with grants able to travel and lodge themselves far away for their research.

All this is to say that a particular valorization of the sciences is risky business for the humanities. Kirschenbaum recommends that since “digital humanities…is sometimes said to suffer from Physics envy,” the field should embrace this label and turn to “a singularly powerful intellectual precedent for examining in close (yes, microscopic) detail the material conditions of knowledge production in scientific settings or configurations. Let us read citation networks and publication venues. Let us examine the usage patterns around particular tools. Let us treat the recensio of data sets” (60). Longing for the humanities to resemble the sciences is nothing new. Longing for data sets instead of individual texts, longing for “particular tools” rather than a philosophical problem or trend can sometimes be a helpful corrective to more Platonic searches for the “spirit” of a work or movement. And yet, there are risks to this approach, not least because the works themselves, that is, the object of inquiry, is treated in such general terms that it becomes essentially invisible. One can miss the tree for the forest and know more about the number of citations of Dante’s Commedia than the original text, or the spirit in which those citations are made. Surely, there is room for both, except when, because of shrinking hiring practices, there isn’t.

In fact the economic politics of digital humanities has long been a source of at time fiery debate. Daniel Allington, Sarah Brouillette, and David Golumbia, in “Neoliberal Tools (and Archives): A Political History of Digital Humanities,” argue that the digital humanities have long been more defined by their preference for lab and project-based sources of knowledge over traditional humanistic inquiry:

What Digital Humanities is not about, despite its explicit claims, is the use of digital or quantitative methodologies to answer research questions in the humanities. It is, instead, about the promotion of project-based learning and lab-based research over reading and writing, the rebranding of insecure campus employment as an empowering “alt-ac” career choice, and the redefinition of technical expertise as a form (indeed, the superior form) of humanistic knowledge. (Allington, Brouillette and Golumbia 2016)

This last point, the valorization of “technical expertise,” is, I would argue, profoundly difficult to perform in a way that doesn’t implicitly devalue the classic toolbox of humanistic inquiry. The motto “More hack, less yack”—a favorite of the THATCamps, collaborative “un-conferences”—encapsulates this idea. Too much unfettered talking could lead to discord, to ambiguity, and to strife. To hack, on the other hand, is understood as something tangible and something implicitly more worthwhile than the production of discourse outside of particular projects and digital labs. Yet Natalia Cecire has noted, “You show up at a THATCamp and suddenly folks are talking about separating content and form as if that were, like, a real thing you could do. It makes the head spin” (Cecire 2011). Context, with all its ambiguities, once the bedrock of humanistic inquiry, is being sidestepped for massive data analysis that, by the very nature of distant reading, cannot account for context to a degree that would satisfy, say, the many Renaissance scholars who trained me. Cecire’s argument is a valuable one. In her post, she does not argue that we should necessarily follow a strategy of “no hack,” only that “we should probably get over the aversion to ‘yack.’” As she notes, “[yack] doesn’t have to replace ‘hack’; the two are not antithetical.”

As DH continues to define itself, one can detect a sense that digital humanities’ focus on individual pieces or series of data, as well as their work in coding, embeds them in more empirical conversations that do not float to the level of speculation that is so emblematic of what used to be called high theory. This is, for many DH practitioners, a source of great pride. Kirschenbaum ends his essay with the following observation: “there is one thing that digital humanities ineluctably is: digital humanities is work, somebody’s work, somewhere, some thing, always. We know how to talk about work. So let’s talk about this work, in action, this actually existing work” (61). The author’s insistence on “some thing” and “this actually existing work” implies that there is work that is not centered on a thing or on work that actually exists, that the move to more concrete objects of inquiry, toward more empirical subjects, is a defining characteristic of digital humanities.

This, among other issues, has made many respond to the digital humanities as if they are cooperating with and participating in the corporatized ideologies of Silicon Valley “tech culture.” Whitney Trettien, in an insightful blogpost, claims, “Humanities scholars who engage with technology in non-trivial ways have done a poor job responding to such criticism” and accuses those who criticize digital humanities as “continuing to reify a diverse set of practices as a homogeneous whole.” Let me be clear: I am not claiming that Kirschenbaum or Trettien or any other scholar writing in a theoretical mode about digital humanities is representative of an entire field, but their writing is part of the discursive community and when those of us whose work is enabled by digital resources but who do not work to build digital tools see our work described as a “trivial” engagement with the digital and see our work put in contrast, implicitly but still clearly, with “this actually existing work,” it is hard not to feel as if the humanist working on texts with digital tools (but not about the digital tools or about data derived from digital modeling) were being somehow slighted.

For instance, in a short essay by Tom Scheinfeldt, “Why Digital Humanities is ‘Nice,’” the author claims: “One of the things that people often notice when they enter the field of digital humanities is how nice everybody is. This can be in stark contrast to other (unnamed) disciplines where suspicion, envy, and territoriality sometimes seem to rule. By contrast, our most commonly used bywords are ‘collegiality,’ ‘openness,’ and ‘collaboration’” (2012, 1). I have to admit I have not noticed what Scheinfeldt claims people often notice (perhaps I have spent too much time on twitter watching digital humanities debates unfurl in less than “nice” ways), but the claim, even as a discursive and defining fiction around DH, helps to understand one thread of the digital humanities’ project of self-definition: we are kind because what we work on is verifiable fact, not complicated and speculative philosophy or theory. Scheinfeldt says as much as he concludes his essay:

Digital humanities is nice because, as I have described in earlier posts, we’re often more concerned with method than we are with theory. Why should a focus on method make us nice? Because methodological debates are often more easily resolved than theoretical ones. Critics approaching an issue with sharply opposed theories may argue endlessly over evidence and interpretation. Practitioners facing a methodological problem may likewise argue over which tool or method to use. Yet at some point in most methodological debates one of two things happens: either one method or another wins out empirically, or the practical needs of our projects require us simply to pick one and move on. Moreover, as Sean Takats, my colleague at the Roy Rosenzweig Center for History and New Media (CHNM), pointed out to me today, the methodological focus makes it easy for us to “call bullshit.” If anyone takes an argument too far afield, the community of practitioners can always put the argument to rest by asking to see some working code, a useable standard, or some other tangible result. In each case, the focus on method means that arguments are short, and digital humanities stays nice. (2)

The most obvious question one is left with is: but what is the code doing? Where are the humanities in this vision of the digital? What truly discursive and interpretative work could produce fundamental disagreements that could be resolved simply by verifying the code in a community setting? Also, the celebration of how enforceable community norms are if an argument goes “too far afield” presents a troubling vision of a true discursive community where the appearance of agreement, enforceable through “empirical” testing, is more important than freedom of debate. In our current political climate, one wonders if such empirically-minded groupthink adequately makes room for more vulnerable, and not quite as loud, voices. When the goal is a functioning website or program, Scheinfeldt may be quite right, but when describing discursive work in the humanities, citing text for instance, rarely quells disagreement, but only makes clearer where the battle lines are drawn. This is particularly ironic given how the digital humanities, understood as a giant discursive, never-quite-adequate term for the field, is still defining itself and has been defining itself for decades with essay after essay defining just what DH is.

I am echoing here some of the arguments offered by Adeline Koh in her essay “Niceness, Building, and Opening the Genealogy of the Digital Humanities: Beyond the Social Contract of Humanities Computing.” In this quite important intervention, Koh argues that DH is centered in two linked characteristics, niceness and technological expertise. Though one might think these requirements are disparate, Koh reveals how they are linked in the formation of a DH social contract:

In my reading of this discursive structure, each rule reinforces the other. An emphasis on method as it applies to a project—which requires technical knowledge—requires resolution, which in turn leads to niceness and collegiality. To move away from technical knowledge—which appears to happen in [prominent DH scholar Stephen] Ramsay’s formulation of DH 2—is to move away from niceness and toward a darker side of the digital humanities. Proponents of technical knowledge appear to be arguing that to reject an emphasis on method is to reject an emphasis on civility. In other words, these two rules form the basis of an attempt to enforce a digital humanities social contract: necessary conditions (technical knowledge) that impose civic responsibilities (civility and niceness). (100)

Koh believes that what is necessary to reduce the link between DH social contracts and the tenets of liberalism, is an expanded genealogy of the digital humanities. Koh urges DH to consider its roots beyond humanities computing.[4]

To demand that one work with technical expertise on “this actually existing work”—whatever that work may end up being—is to state rather clearly that there are guidelines fencing in the digital humanities. As in the history of cartographic studies, the opinions of the makers paying attention to data sets have been allowed to determine what the digital humanities are (or what DH is). Like the moment when J.B. Harley challenged historians and theorists of cartography to ignore what the cartographers say and explore maps and mapmaking outside of the tools needed to make a map, perhaps DH is ready to enter a new phase where it begins its own renewal by no longer valorizing tools, code, and technology and letting the observers, the consumers, the fantasists, and the historians of power and oppression in (without their laptops). Indeed, what DH can learn from the history of cartography is to understand that what DH is, in all its many forms, is seldom (just) what digital humanists say it is.

_____

Tim Duffy is a scholar of Renaissance literature, poetics, and spatial philosophy.

_____

Notes

[1] See David Stoddart, “Geography—a European Science” in On geography and its history, pp 28-40. For a discussion of Stoddart’s thinking, see Derek Gregory, Geographic Imaginations, pp. 16-21.

[2] Obviously, critics and writers make, but their critique exists outside of the production of the artifact that they study. Cartographic theorists, as this article will argue, need not be a cartographer themselves any more than a critic or theorist of the digital need be a programmer or creator of digital objects.

[3] For more on the political problems of dependence on grants, see Waltzer (2012): “One of those conditions is the dependence of the digital humanities upon grants. While the increase in funding available to digital humanities projects is welcome and has led to many innovative projects, an overdependence on grants can shape a field in a particular way. Grants in the humanities last a short period of time, which make them unlikely to fund the long-term positions that are needed to mount any kind of sustained challenge to current employment practices in the humanities. They are competitive, which can lead to skewed reporting on process and results, and reward polish, which often favors the experienced over the novice. They are external, which can force the orientation of the organizations that compete for them outward rather than toward the structure of the local institution and creates the pressure to always be producing” (340-341).

[4] In her reading of how digital humanities deploys niceness, Koh writes “In my reading of this discursive structure, each rule reinforces the other. An emphasis on method as it applies to a project—which requires technical knowledge—requires resolution, which in turn leads to niceness and collegiality. To move away from technical knowledge…is to move away from niceness and toward a darker side of the digital humanities. Proponents of technical knowledge appear to be arguing that to reject an emphasis on method is to reject an emphasis on civility” (100).

_____

Works Cited

- Allington, Daniel, Sarah Brouillete, and David Golumbia. 2016. “Neoliberal Tools (and Archives): A Political History of Digital Humanities.” Los Angeles Review of Books.

- Boelhower, William. 1988. “Inventing America: The Culture of the Map” in Revue française d’études américaines 36. 211-224.

- Cecire, Natalia. 2011. “When DH Was in Vogue; or, THATCamp Theory.”

- Dalché, Patrick Gautier. 2007. “The Reception of Ptolemy’s Geography (End of the Fourteenth to Beginning of the Sixteenth Century) in Cartography in the European Renaissance, Volume 3, Part 1. Edited by David Woodward. Chicago: University of Chicago Press. 285-364.

- Fiormonte, Domenico, Teresa Numerico, and Francesca Tomasi. 2015. The Digital Humanist: A Critical Inquiry. New York: Punctum Books

- Gregory, Derek. 1994. Geographic Imaginations. Cambridge: Blackwell.

- Harley, J.B. 2011. “Deconstructing the Map” in The Map Reader: Theories of Mapping Practice and Cartographic Representation, First Edition, edited by Martin Dodge, Rob Kitchin and Chris Perkins. New York: John Wiley & Sons, Ltd. 56-64.

- Jacob, Christian. 2005. The Sovereign Map. Translated by Tom Conley. Chicago: University of Chicago Press.

- Kirschenbaum, Matthew. 2014. “What is ‘Digital Humanities,’ and Why Are They Saying Such Terrible Things about It?” Differences 25:1. 46-63.

- Koh, Adeline. 2014. “Niceness, Building, and Opening the Genealogy of the Digital Humanities: Beyond the Social Contract of Humanities Computing.” Differences 25:1. 93-106.

- Scheinfeldt, Tom. 2012. “Why Digital Humanities is ‘Nice.’” In Matthew Gold, ed., Debates in the Digital Humanities. Minneapolis: University of Minnesota Press.

- Trettien, Whitney. 2016. “Creative Destruction/‘Digital Humanities.’” Medium (Aug 24).

- Watts, Pauline Moffitt. 2007. “The European Religious Worldview and Its Influence on Mapping” in The History of Cartography: Cartography in the European Renaissance, Vol. 3, part 1. Edited by David Woodward. Chicago: University of Chicago Press). 382-400.

- Waltzer, Luke. 2012. “Digital Humanities and the ‘Ugly Stepchildren’ of American Higher Education.” In Matthew Gold, ed., Debates in the Digital Humanities. Minneapolis: University of Minnesota Press.

- Witmore, Michael. 2016. “Latour, the Digital Humanities, and the Divided Kingdom of Knowledge.” New Literary History 47:2-3. 353-375.

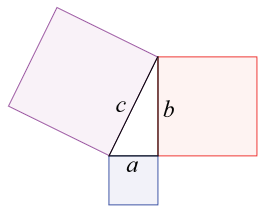

It is understood as more essential than the world and as prior to it. Mathematics becomes an outlier among representational systems because numbers are claimed to be “ideal forms necessarily prior to the material ‘instances’ and ‘examples’ that are supposed to illustrate them and provide their content” (Rotman 2000, 147).

It is understood as more essential than the world and as prior to it. Mathematics becomes an outlier among representational systems because numbers are claimed to be “ideal forms necessarily prior to the material ‘instances’ and ‘examples’ that are supposed to illustrate them and provide their content” (Rotman 2000, 147).