by Zachary Loeb

~

“We hate the people who make us form the connections we do not want to form.” – Simone Weil

1. Repairing Our Common Home

When confronted with the unsettling reality of the world it is easy to feel overwhelmed and insignificant. This feeling of powerlessness may give rise to a temptation to retreat – or to simply shrug – and though people may suspect that they bear some responsibility for the state of affairs in which they are embroiled the scale of the problems makes individuals doubtful that they can make a difference. In this context, the refrain “well, it could always be worse” becomes a sort of inured coping strategy, though this dark prophecy has a tendency to prove itself true week after week and year after year. Just saying that things could be worse than they presently are does nothing to prevent things from deteriorating further. It can be rather liberating to decide that one is powerless, to conclude that one’s actions do not truly matter, to imagine that one will be long dead by the time the bill comes due – for taking such positions enables one to avoid doing something difficult: changing.

A change is coming. Indeed, the change is already here. The question is whether people are willing to consciously change to meet this challenge or if they will only change when they truly have no other option.

The matter of change is at the core of Pope Francis’s recent encyclical Laudato Si’ (“Praise be to You”). Much of the discussion around Laudato Si’ has characterized the document as being narrowly focused on climate change and the environment. Though Laudato Si’ has much to say about the environment, and the threat climate change poses, it is rather reductive to cast Laudato Si’ as “the Pope’s encyclical about the environment.” Granted, that many are describing the encyclical in such terms is understandable as framing it in that manner makes it appear quaint – and may lead to many concluding that they do not need to spend the time reading through the encyclical’s 245 sections (roughly 200 pages). True, Pope Francis is interested in climate change, but in the encyclical he proves far more interested in the shifts in the social, economic, and political climate that have allowed climate change to advance. The importance of Laudato Si’ is precisely that it is less about climate change than it is about the need for humanity to change, as Pope Francis writes:

“we cannot adequately combat environmental degradation unless we attend to causes related to human and social degradation.” (Francis, no. 48)

And though the encyclical is filled with numerous pithy aphorisms it is a text that is worth engaging in its entirety.

Lest there be any doubt, Laudato Si’ is a difficult text to read. Not because it is written in archaic prose, or because it assumes the reader is learned in theology, but because it is discomforting. Laudato Si’ does not tell the reader that they are responsible for the world, instead it reminds them that they have always been responsible for the world, and then points to some of the reasons why this obligation may have been forgotten. The encyclical calls on those with their heads in the clouds (or head in “the cloud”) to see they are trampling the poor and the planet underfoot. Pope Francis has the audacity to suggest, despite what the magazine covers and advertisements tell us, that there is no easy solution, and that if we are honest with ourselves we are not fulfilled by consumerism. What Laudato Si’ represents is an unabashed ethical assault on high-tech/high-consumption life in affluent nations. Yet it is not an angry diatribe. Insofar as the encyclical represents a hammer it is not as a blunt instrument with which one bludgeons foes into submission, but is instead a useful tool one might take up to pull out the rusted old nails in order to build again, as Pope Francis writes:

“Humanity still has the ability to work together in building our common home.” (Francis, no. 13)

Laudato Si’ is a work of intense, even radical, social criticism in the fine raiment of a papal encyclical. The text contains an impassioned critique of technology, an ethically rooted castigation of capitalism, a defense of the environment that emphasizes that humans are part of that same environment, and a demand that people accept responsibility. There is much in Laudato Si’ that those well versed in activism, organizing, environmentalism, critical theory, the critique of technology, radical political economy (and so forth) will find familiar – and it is a document that those bearing an interest in the aforementioned areas would do well to consider. While the encyclical (it was written by the Pope, after all) contains numerous references to Jesus, God, the Church, and the saints – it is clear that Pope Francis intends the document for a wide (not exclusively Catholic, or even Christian) readership. Indeed, those versed in other religious traditions will likely find much in the encyclical that echoes their own beliefs – and the same can likely be said of those interested in ethics with our without the presence of God. While many sections of Laudato Si’ speak to the religious obligation of believers, Pope Francis makes a point of being inclusive to those of different faiths (and no faith) – an inclusion which speaks to his recognition that the problems facing humanity can only be solved by all of humanity. After all:

“we need only take a frank look at the facts to see that our common home is falling into serious disrepair.” (Francis, no. 61)

The term “common home” refers to the planet and all those – regardless of their faith – who dwell there.

Nevertheless, there are several sections in Laudato Si’ that will serve to remind the reader that Pope Francis is the male head of a patriarchal organization. Pope Francis stands firm in his commitment to the poor, and makes numerous comments about the rights of indigenous communities – but he does not have particularly much to say about women. While women certainly number amongst the poor and indigenous, Laudato Si’ does not devote attention to the ways in which the theologies and ideologies of dominance that have wreaked havoc on the planet have also oppressed women. It is perhaps unsurprising that the only woman Laudato Si’ focuses on at any length is Mary, and that throughout the encyclical Pope Francis continually feminizes nature whilst referring to God with terms such as “Father.” The importance of equality is a theme which is revisited numerous times in Laudato Si’ and though Pope Francis addresses his readers as “sisters and brothers” it is worth wondering whether or not this entails true equality between all people – regardless of gender. It is vital to recognize this shortcoming of Laudato Si’ – as it is a flaw that undermines much of the ethical heft of the argument.

In the encyclical Pope Francis laments the lack of concern being shown to those – who are largely poor – already struggling against the rising tide of climate change, noting:

“Our lack of response to these tragedies involving our brothers and sisters points to the loss of that sense of responsibility to our fellow men and women upon which all civil society is founded.” (Francis, no. 25)

Yet it is worth pushing on this “sense of responsibility to our fellow men and women” – and doing so involves a recognition that too often throughout history (and still today) “civil society” has been founded on an emphasis on “fellow men” and not necessarily upon women. In considering responsibilities towards other people Simone Weil wrote:

“The object of any obligation, in the realm of human affairs, is always the human being as such. There exists an obligation towards every human being for the sole reason that he or she is a human being, without any other condition requiring to be fulfilled, and even without any recognition of such obligation on the part of the individual concerned.” (Weil, 5 – The Need for Roots)

To recognize that the obligation is due to “the human being as such” – which seems to be something Pope Francis is claiming – necessitates acknowledging that “the human being” is still often defined as male. And this is a bias that can easily be replicated, even in encyclicals that tout the importance of equality.

There are aspects of Laudato Si’ that will give readers cause to furrow their brows; however, it would be unfortunate if the shortcomings of the encyclical led people to dismiss it completely. After all, Laudato Si’ is not a document that one reads, it is a text with which one wrestles. And, as befits a piece written by a former nightclub bouncer, Laudato Si’ proves to be a challenging and scrappy combatant. Granted, the easiest way to emerge victorious from a bout is to refuse to engage in it in the first place – which is the tactic that many seem to be taking towards Laudato Si’. Yet it should be noted that those whose responses are variations of “the Pope should stick to religion” are largely revealing that they have not seriously engaged with the encyclical. Laudato Si’ does not claim to be a scientific document, but instead recognizes – in understated terms – that:

“A very solid scientific consensus indicates that we are presently witnessing a disturbing warming of the climate system.” (Francis, no. 23)

And that,

“Climate change is a global problem with grave implications: environmental, social, economic, political and for the distribution of goods. It represents one of the principal challenges facing humanity in our day. Its worst impact will probably be felt by developing countries in the coming decades.” (Francis, no. 25)

However, when those who make a habit of paying no heed to scientists themselves make derisive comments that the Pope is not a scientist they are primarily delivering a television-news-bite-ready-quip which ignores that the climate Pope Francis is mainly concerned with today’s social, economic and political climate.

As has been previously noted, Laudato Si’ is as much a work of stinging social criticism as it is a theological document. It is a text which benefits from the particular analysis of people – be they workers, theologians, activists, scholars, and the list could go on – with knowledge in the particular fields the encyclical touches upon. And yet, one of the most striking aspects of the encyclical – that which poses a particular challenge to the status quo – is way in which the document engages with technology.

For, it may well be that Laudato Si’ will change the tone of current discussions around technology and its role in our lives.

At least one might hope that it will do so.

2. Meet the New Gods, Not the Same as the Old God

Perhaps being a person of faith makes it easier to recognize the faith of others. Or, put another way, perhaps belief in God makes one attuned to the appearance of new gods. While some studies have shown that in recent years the number of individuals who do not adhere to a particular religious doctrine has risen, Laudadto Si’ suggests – though not specifically in these terms – that people may have simply turned to new religions. In the book To Be and To Have, Erich Fromm uses the term “religion” not to:

“refer to a system that has necessarily to do with a concept of God or with idols or even to a system perceived as religion, but to any group-shared system of thought and action that offers the individual a frame of orientation and an object of devotion.” (Fromm, 135 – italics in original)

Though the author of Laudato Si’, obviously, ascribes to a belief system that has a heck-of-a-lot to do “with a concept of God” – the main position of the encyclical is staked out in opposition to the rise of a “group-shared system of thought” which has come to offer many people both “a frame of orientation and an object of devotion.” Pope Francis warns his readers against giving fealty and adoration to false gods – gods which are as appealing to atheists as they are to old-time-believers. And while Laudato Si’ is not a document that seeks (not significantly, at least) to draw people into the Catholic church, it is a document that warns people against the religion of technology. After all, we cannot return to the Garden of Eden by biting into an Apple product.

It is worth recognizing, that there are many reasons why the religion of technology so easily wins converts. The world is a mess and the news reports are filled with a steady flow of horrors – the dangers of environmental degradation seem to grow starker by the day, as scientists issue increasingly dire predictions that we may have already passed the point at which we needed to act. Yet, one of the few areas that continually operates as a site of unbounded optimism is the missives fired off by the technology sector and its boosters. Wearable technology, self-driving cars, the Internet of Things, delivery drones, artificial intelligence, virtual reality – technology provides a vision of the future that is not fixated on rising sea levels and extinction. Indeed, against the backdrop of extinction some even predict that through the power of techno-science humans may not be far off from being able to bring back species that had previously gone extinct.

Technology has become a site of millions of minor miracles that have drawn legions of adherents to the technological god and its sainted corporations – and while technology has been a force present with humans for nearly as long as there have been humans, technology today seems increasingly to be presented in a way that encourages people to bask in its uncanny glow. Contemporary technology – especially of the Internet connected variety – promises individuals that they will never be alone, that they will never be bored, that they will never get lost, and that they will never have a question for which they cannot execute a web search and find an answer. If older religions spoke of a god who was always watching, and always with the believer, than the smart phone replicates and reifies these beliefs – for it is always watching, and it is always with the believer. To return to Fromm’s description of religion it should be fairly apparent that technology today provides people with “a frame of orientation and an object of devotion.” It is thus not simply that technology comes to be presented as a solution to present problems, but that technology comes to be presented as a form of salvation from all problems. Why pray if “there’s an app for that”?

In Laudato Si’, Pope Francis warns against this new religion by observing:

“Life gradually becomes a surrender to situations conditioned by technology, itself viewed as the principle key to the meaning of existence.” (Francis, no. 110)

Granted, the question should be asked as to what is “the meaning of existence” supplied by contemporary technology? The various denominations of the religion of technology are skilled at offering appealing answers to this question filled with carefully tested slogans about making the world “more open and connected.” What the religion of technology continually offers is not so much a way of being in the world as a way of escaping from the world. Without mincing words, the world described in Laudato Si’ is rather distressing: it is a world of vast economic inequality, rising sea levels, misery, existential uncertainty, mountains of filth discarded by affluent nations (including e-waste), and the prospects are grim. By comparison the religion of technology provides a shiny vision of the future, with the promise of escape from earthly concerns through virtual reality, delivery on demand, and the truly transcendent dream of becoming one with machines. The religion of technology is not concerned with the next life, or with the lives of future generations, it is about constructing a new Eden in the now, for those who can afford the right toys. Even if constructing this heaven consigns much of the world’s population to hell. People may not be bending their necks in prayer, but they’re certainly bending their necks to glance at their smart phones. As David Noble wrote:

“A thousand years in the making, the religion of technology has become the common enchantment, not only of the designers of technology but also of those caught up in, and undone by, their godly designs. The expectation of ultimate salvation through technology, whatever the immediate human and social costs, has become the unspoken orthodoxy, reinforced by a market-induced enthusiasm for novelty and sanctioned by millenarian yearnings for new beginnings. This popular faith, subliminally indulged and intensified by corporate, government, and media pitchmen, inspires an awed deference to the practitioners and their promises of deliverance while diverting attention from more urgent concerns.” (Noble, 207)

Against this religious embrace of technology, and the elevation of its evangels, Laudato Si’ puts forth a reminder that one can, and should, appreciate the tools which have been invented – but one should not worship them. To return to Erich Fromm:

“The question is not one of religion or not? but of which kind of religion? – whether it is one that furthers human development, the unfolding of specifically human powers, or one that paralyzes human growth…our religious character may be considered an aspect of our character structure, for we are what we are devoted to, and what we are devoted to is what motivates our conduct. Often, however, individuals are not even aware of the real objects of their personal devotion and mistake their ‘official’ beliefs for their real, though secret religion.” (Fromm, 135-136)

It is evident that Pope Francis considers the worship of technology to be a significant barrier to further “human development” as it “paralyzes human growth.” Technology is not the only false religion against which the encyclical warns – the cult of self worship, unbridled capitalism, the glorification of violence, and the revival tent of consumerism are all considered as false faiths. They draw adherents in by proffering salvation and prescribing a simple course of action – but instead of allowing their faithful true transcendence they instead warp their followers into sycophants.

Yet the particularly nefarious aspect of the religion of technology, in line with the quotation from Fromm, is the way in which it is a faith to which many subscribe without their necessarily being aware of it. This is particularly significant in the way that it links to the encyclical’s larger concern with the environment and with the poor. Those in affluent nations who enjoy the pleasures of high-tech lifestyles – the faithful in the religion of technology – are largely spared the serious downsides of high-technology. Sure, individuals may complain of aching necks, sore thumbs, difficulty sleeping, and a creeping sense of dissatisfaction – but such issues do not tell of the true cost of technology. What often goes unseen by those enjoying their smart phones are the exploitative regimes of mineral extraction, the harsh labor conditions where devices are assembled, and the toxic wreckage of e-waste dumps. Furthermore, insofar as high-tech devices (and the cloud) require large amounts of energy it is worth considering the degree to which high-tech lifestyles contribute to the voracious energy consumption that helps drive climate change. Granted, those who suffer from these technological downsides are generally not the people enjoying the technological devices.

And though Laudato Si’ may have a particular view of salvation – one need not subscribe to that religion to recognize that the religion of technology is not the faith of the solution.

But the faith of the problem.

3. Laudato Si’ as Critique of Technology

Relatively early in the encyclical, Pope Francis decries how, against the background of “media and the digital world”:

“the great sages of the past run the risk of going unheard amid the noise and distractions of an information overload.” (Frances, no. 47)

Reading through Laudato Si’ it becomes fairly apparent who Pope Francis considers many of these “great sages” to be. For the most part Pope Francis cites the encyclicals of his predecessors, declarations from Bishops’ conferences, the bible, and theologians who are safely ensconced in the Church’s wheelhouse. While such citations certainly help to establish that the ideas being put forth in Laudato Si’ have been circulating in the Catholic Church for some time – Pope Francis’s invocation of “great sages of the past…going unheard” raises a larger question. How much of the encyclical is truly new and how much is a reiteration of older ideas that have gone “unheard?” In fairness, the social critique being advanced by Laudato Si’ may strike many people as novel – particularly in terms of its ethically combative willingness to take on technology – but it may be that the significant thing about Laudato Si’ is not that the message is new, but that the messenger is new. Without wanting to decry or denigrate Laudato Si’ it is worth noting that much of the argument being presented in the document could previously be found in works by thinkers associated with the critique of technology, notably Lewis Mumford and Jacques Ellul. Indeed, the following statement, from Lewis Mumford’s Art and Technics, could have appeared in Laudato Si’ without seeming out of place:

“We overvalue the technical instrument: the machine has become our main source of magic, and it has given us a false sense of possessing godlike powers. An age that has devaluated all its symbols has turned the machine itself into a universal symbol: a god to be worshiped.” (Mumford, 138 – Art and Technics)

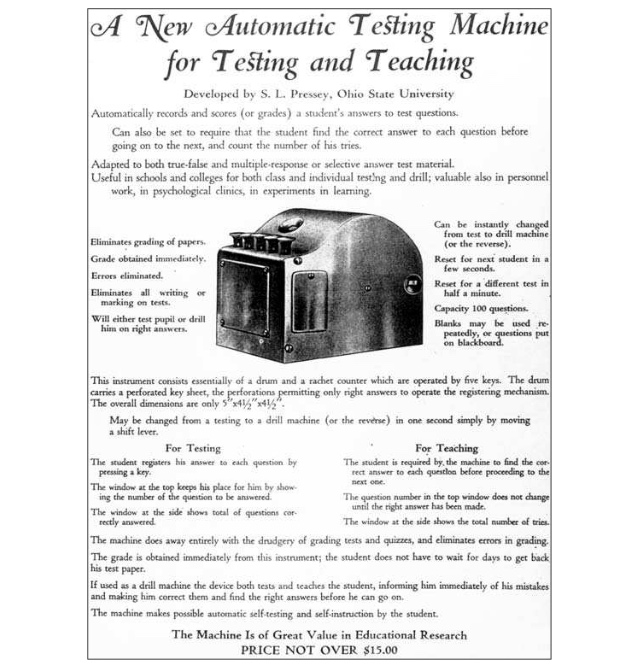

The critique of technology does not represent a cohesive school of thought – rather it is a tendency within several fields (history and philosophy of technology, STS, media ecology, critical theory) that places particular emphasis on the negative impacts of technology. What many of these thinkers emphasized was the way in which the choices of certain technologies over others winds up having profound impacts upon the shape of a society. Thus, within the critique of technology, it is not a matter of anything so ridiculously reductive as “technology is bad” but of considering what alternative forms technology could take: “democratic technics” (Mumford), “convivial tools” (Illich), “appropriate technology” (Schumacher), “liberatory technology” (Bookchin), and so forth. Yet what is particularly important is the fact that the serious critique of technology was directly tied to a critique of the broader society. And thus, Mumford also wrote extensively about urban planning, architecture and cities – while Ellul wrote as much (perhaps more) about theological issues (Ellul was a devout individual who described himself as a Christian anarchist).

With the rise of ever more powerful and potentially catastrophic technological systems, many thinkers associated with the critique of technology began issuing dire warnings about the techno-science wrought danger in which humanity had placed itself. With the appearance of the atomic bomb, humanity had invented the way to potentially bring an end to the whole of the human project. Galled by the way in which technology seemed to be drawing ever more power to itself, Ellul warned of the ascendance of “technique” while Mumford cautioned of the emergence of “the megamachine” with such terms being used to denote not simply technology and machinery but the fusion of techno-science with social, economic and political power – though Pope Francis seems to prefer to use the term “technological paradigm” or “technocratic paradigm” instead of “megamachine.” When Pope Francis writes:

“The technological paradigm has become so dominant that it would be difficult to do without its resources and even more difficult to utilize them without being dominated by their internal logic.” (Francis, no. 108)

Or:

“the new power structures based on the techno-economic paradigm may overwhelm not only our politics but also freedom and justice.” (Francis, no. 53)

Or:

“The alliance between the economy and technology ends up sidelining anything unrelated to its immediate interests.” (Francis, no. 54)

These are comments that are squarely in line with Ellul’s comment that:

“Technical civilization means that our civilization is constructed by technique (makes a part of civilization only that which belongs to technique), for technique (in that everything in this civilization must serve a technical end), and is exclusively technique (in that it excludes whatever is not technique or reduces it to technical forms).” (Ellul, 128 – italics in original)

A particular sign of the growing dominance of technology, and the techno-utopian thinking that everywhere evangelizes for technology, is the belief that to every problem there is a technological solution. Such wishful thinking about technology as the universal panacea was a tendency highly criticized by thinkers like Mumford and Ellul. Pope Francis chastises the prevalence of this belief at several points, writing:

“Obstructionist attitudes, even on the part of believers, can range from denial of the problem to indifference, nonchalant resignation or blind confidence in technical solutions.” (Francis, no. 14)

And the encyclical returns to this, decrying:

“Technology, which, linked to business interests, is presented as the only way of solving these problems,” (Francis, no. 20)

There is more than a passing similarity between the above two quotations from Pope Francis’s 2015 encyclical and the following quotation from Lewis Mumford’s book Technics and Civilization (first published in 1934):

“But the belief that the social dilemmas created by the machine can be solved merely by inventing more machines is today a sign of half-baked thinking which verges close to quackery.” (Mumford, 367)

At the very least this juxtaposition should help establish that there is nothing new about those in power proclaiming that technology will solve everything, but just the same there is nothing particularly new about forcefully criticizing this unblinking faith in technological solutions. If one wanted to do so it would not be an overly difficult task to comb through Laudato Si’ – particularly “Chapter Three: The Human Roots of the Ecological Crisis” – and find a couple of paragraphs by Mumford, Ellul or another prominent critic of technology in which precisely the same thing is being said. After all, if one were to try to capture the essence of the critique of technology in two sentences, one could do significantly worse than the following lines from Laudato Si’:

“We have to accept that technological products are not neutral, for they create a framework which ends up conditioning lifestyles and shaping social possibilities along the lines dictated by the interests of certain powerful groups. Decisions which may seem purely instrumental are in reality decisions about the kind of society we want to build.” (Francis, no. 107)

Granted, the line “technological products are not neutral” may have come as something of a disquieting statement to some readers of Laudato Si’ even if it has long been understood by historians of technology. Nevertheless, it is the emphasis placed on the matter of “the kind of society we want to build” that is of particular importance. For the encyclical does not simply lament the state of the technological world, it advances an alternative vision of technology – one which recognizes the tremendous potential of technological advances but sees how this potential goes unfulfilled. Laudato Si’ is a document which is skeptical of the belief that smart phones have made people happier, and it is a text which shows a clear unwillingness to believe that large tech companies are driven by much other than their own interests. The encyclical bears the mark of a writer who believes in a powerful God and that deity’s prophets, but has little time for would-be all powerful corporations and their lust for profits. One of the themes that ran continuously throughout Lewis Mumford’s work was his belief that the “good life” had been overshadowed by the pursuit of the “goods life” – and a similar theme runs through Laudato Si’ wherein the analysis of climate change, the environment, and what is owed to the poor, is couched in a call to reinvigorate the “good life” while recognizing that the “goods life” is a farce. Despite the power of the “technological paradigm,” Pope Francis remains hopeful regarding the power of people, writing:

“We have the freedom needed to limit and direct technology; we can put it at the service of another type of progress, one which is healthier, more human, more social, more integral. Liberation from the dominant technocratic paradigm does in fact happen sometimes, for example, when cooperatives of small producers adopt less polluting methods of production, and opt for a non-consumerist model of life, recreation and community. Or when technology is directed primarily to resolving people’s concrete problems, truly helping them live with more dignity and less suffering.” (Francis, no. 112)

In the above quotation, what Pope Francis is arguing for is the need for, to use Mumford’s terminology, “democratic technics” to replace “authoritarian technics.” Or, to use Ivan Illich’s terms (and Illich was himself a Catholic priest) the emergence of a “convivial society” centered around “convivial tools.” Granted, as is perhaps not particularly surprising for a call to action, Pope Francis tends to be rather optimistic about the prospects individuals have for limiting and directing technology. For, one of the great fears shared amongst numerous critics of technology was the belief that the concentration of power in “technique” or “the megamachine” or the “technological paradigm” gradually eliminated the freedom to limit or direct it. That potential alternatives emerged was clear, but such paths were quickly incorporated back into the “technological paradigm.” As Ellul observed:

“To be in technical equilibrium, man cannot live by any but the technical reality, and he cannot escape from the social aspect of things which technique designs for him. And the more his needs are accounted for, the more he is integrated into the technical matrix.” (Ellul, 224)

In other words, “technique” gradually eliminates the alternatives to itself. To live in a society shaped by such forces requires an individual to submit to those forces as well. What Laudato Si’ almost desperately seeks to claim, to the contrary, is that it is not too late, that people still have the ability “to limit and direct technology” provided they tear themselves away from their high-tech hallucinations. And this earnest belief is the hopeful core of the encyclical.

Ethically impassioned books and articles decrying what a high consumption lifestyle wreaks upon the planet and which exhort people to think of those who do not share in the thrill of technological decadence are not difficult to come by. And thus, the aspect of Laudato Si’ which may be the most radical and the most striking are the sections devoted to technology. For what the encyclical does so impressively is that it expressly links environmental destruction and the neglect of the poor with the religious allegiance to high-tech devices. Numerous books and articles appear on a regular basis lamenting the current state of the technological world – and yet too often the authors of such texts seem terrified of being labeled “anti-technology.” Therefore, the authors tie themselves in knots trying to stake out a position that is not evangelizing for technology but at the same time they refuse to become heretics to the religion of technology – and as a result they easily become the permitted voices of dissent who only seem to empower the evangels as they conduct the debate on the terms of technological society. They try to reform the religion of technology instead of recognizing that it is a faith premised upon worshiping a false god. After all, one is permitted to say that Google is getting too big, that the Apple Watch is unnecessary, and that Internet should be called “the surveillance mall” – but to say:

“There is a growing awareness that scientific and technological progress cannot be equated with the progress of humanity and history, a growing sense that the way to a better future lies elsewhere.” (Francis, no. 113)

Well…one rarely hears such arguments today, precisely because the dominant ideology of our day places ample faith in equating “scientific and technological progress” with progress, as such. Granted, that was the type of argument being made by the likes of Mumford and Ellul – though the present predicament makes it woefully evident that too few heeded their warnings. Indeed a leitmotif that can be detected amongst the works of many critics of technology is a desire to be proved wrong, as Mumford wrote:

“I would die happy if I knew that on my tombstone could be written these words, ‘This man was an absolute fool. None of the disastrous things that he reluctantly predicted ever came to pass!’ Yes: then I could die happy.” (Mumford, 528 – My Works and Days)

Yet to read over Mumford’s predictions in the present day is to understand why those words are not carved into his tombstone – for Mumford was not an “absolute fool,” he was acutely prescient. Though, alas, the likes of Mumford and Ellul too easily number amongst the ranks of “the great sages of the past” who, in Pope Francis’s words, “run the risk of going unheard amid the noise and distractions of an information overload.”

Despite the issues that various individuals will certainly have with Laudato Si’ – ranging from its stance towards women to its religious tonality – the element that is likely to disquiet the largest group is its serious critique of technology. Thus, it is somewhat amusing to consider the number of articles that have been penned about the encyclical which focus on the warnings about climate change but say little about Pope Francis’s comments about the danger of the “technological paradigm.” For the encyclical commits a profound act of heresy against the contemporary religion of technology – it dares to suggest that we have fallen for the PR spin about the devices in our pockets, it asks us to consider if these devices are truly filling an existential void or if they are simply distracting us from having to think about this absence, and the encyclical reminds us that we need not be passive consumers of technology. These arguments about technology are not new, and it is not new to make them in ethically rich or religiously loaded language; however, these are arguments which are verboten in contemporary discourse about technology. Alas, those who make such claims are regularly derided as “Luddites” or “NIMBYs” and banished to the fringes. And yet the historic Luddites were simply workers who felt they had the freedom “to limit and direct technology,” and as anybody who knows about e-waste can attest when people in affluent nations say “Not In My Back Yard” the toxic refuse simply winds up in somebody else’s back yard. Pope Francis writes that today:

“It has become countercultural to choose a lifestyle whose goals are even partly independent of technology, of its costs and its power to globalize and make us all the same.” (Francis, no. 108)

And yet, what Laudato Si’ may represent is an important turning point in discussions around technology, and a vital opportunity for a serious critique of technology to reemerge. For what Laudato Si’ does is advocate for a new cultural paradigm based upon harnessing technology as a tool instead of as an absolute. Furthermore, the inclusion of such a serious critique of technology in a widely discussed (and hopefully widely read) encyclical represents a point at which rigorously critiquing technology may be able to become less “countercultural.” Laudato Si’ is a profoundly pro-culture document insofar as it seeks to preserve human culture from being destroyed by the greed that is ruining the planet. It is a rare text that has the audacity to state: “you do not need that, and your desire for it is bad for you and bad for the planet.”

Laudato Si’ is a piece of fierce social criticism, and like numerous works from the critique of technology, it is a text that recognizes that one cannot truly claim to critique a society without being willing to turn an equally critical gaze towards the way that society creates and uses technology. The critique of technology is not new, but it has been sorely underrepresented in contemporary thinking around technology. It has been cast as the province of outdated doom mongers, but as Pope Francis demonstrates, the critique of technology remains as vital and timely as ever.

Too often of late discussions about technology are conducted through rose colored glasses, or worse virtual reality headsets – Laudato Si’ dares to actually look at technology.

And to demand that others do the same.

4. The Bright Mountain

The end of the world is easy.

All it requires of us is that we do nothing, and what can be simpler than doing nothing? Besides, popular culture has made us quite comfortable with the imagery of dystopian states and collapsing cities. And yet the question to ask of every piece of dystopian fiction is “what did the world that paved the way for this terrible one look like?” To which the follow up question should be: “did it look just like ours?” And to this, yet another follow up question needs to be asked: “why didn’t people do something?” In a book bearing the uplifting title The Collapse of Western Civilization Naomi Oreskes and Erik Conway analyze present inaction as if from the future, and write:

“the people of Western civilization knew what was happening to them but were unable to stop it. Indeed, the most startling aspect of this story is just how much these people knew, and how unable they were to act upon what they knew.” (Oreskes and Conway, 1-2)

This speaks to the fatalistic belief that despite what we know, things are not going to change, or that if change comes it will already be too late. One of the most interesting texts to emerge in recent years in the context of continually ignored environmental warnings is a slim volume titled Uncivilisation: The Dark Mountain Manifesto. It is the foundational text of a group of writers, artists, activists, and others that dares to take seriously the notion that we are not going to change in time. As the manifesto’s authors write:

“Secretly, we all think we are doomed: even the politicians think this; even the environmentalists. Some of us deal with it by going shopping. Some deal with it by hoping it is true. Some give up in despair. Some work frantically to try and fend off the coming storm.” (Hine and Kingsnorth, 9)

But the point is that change is coming – whether we believe it or not, and whether we want it or not. But what is one to do? The desire to retreat from the cacophony of modern society is nothing new and can easily sow the fields in which reactionary ideologies can grow. Particularly problematic is that the rejection of the modern world often entails a sleight of hand whereby those in affluent nations are able to shirk their responsibility to the world’s poor even as they walk somberly, flagellating themselves into the foothills. Apocalyptic romanticism, whether it be of the accelerationist or primitivist variety, paints an evocative image of the world of today collapsing so that a new world can emerge – but what Laudato Si’ counters with is a morally impassioned cry to think of the billions of people who will suffer and die. Think of those for whom fleeing to the foothills is not an option. We do not need to take up residence in the woods like latter day hermetic acolytes of Francis of Assisi, rather we need to take that spirit and live it wherever we find ourselves.

True, the easy retort to the claim “secretly, we all think we are doomed” is to retort “I do not think we are doomed, secretly or openly” – but to read climatologists predictions and then to watch politicians grouse, whilst mining companies seek to extract even more fossil fuels is to hear that “secret” voice grow louder. People have always been predicting the end of the world, and here we still are, which leads many to simply shrug off dire concerns. Furthermore, many worry that putting too much emphasis on woebegone premonitions overwhelms people and leaves them unable and unwilling to act. Perhaps this is why Al Gore’s film An Inconvenient Truth concludes not by telling people they must be willing to fundamentally alter their high-tech/high-consumption lifestyles but instead simply tells them to recycle. In Laudato Si’ Pope Francis writes:

“Doomsday predictions can no longer be met with irony or disdain. We may well be leaving to coming generations debris, desolation and filth.” (Francis, no. 161)

Those lines, particularly the first of the two, should be the twenty-first century replacement for “Keep Calm and Carry On.” For what Laudato Si’ makes clear is that now is not the time to “Keep Calm” but to get very busy, and it is a text that knows that if we “Carry On” than we are skipping aimlessly towards the cliff’s edge. And yet one of the elements of the encyclical that needs to be highlighted is that it is a document that does not look hopefully towards a coming apocalypse. In the encyclical, environmental collapse is not seen as evidence that biblical preconditions for Armageddon are being fulfilled. The sorry state of the planet is not the result of God’s plan but is instead the result of humanity’s inability to plan. The problem is not evil, for as Simone Weil wrote:

“It is not good which evil violates, for good is inviolate: only a degraded good can be violated.” (Weil, 70 – Gravity and Grace)

It is that the good of which people are capable is rarely the good which people achieve. Even as possible tools for building the good life – such as technology – are degraded and mistaken for the good life. And thus the good is wasted, though it has not been destroyed.

Throughout Laudato Si’, Pope Francis praises the merits of an ascetic life. And though the encyclical features numerous references to Saint Francis of Assisi, the argument is not that we must all abandon our homes to seek out new sanctuary in nature, instead the need is to learn from the sense of love and wonder with which Saint Francis approached nature. Complete withdrawal is not an option, to do so would be to shirk our responsibility – we live in this world and we bear responsibility for it and for other people. In the encyclical’s estimation, those living in affluent nations cannot seek to quietly slip from the scene, nor can they claim they are doing enough by bringing their own bags to the grocery store. Rather, responsibility entails recognizing that the lifestyles of affluent nations have helped sow misery in many parts of the world – it is unethical for us to try to save our own cities without realizing the part we have played in ruining the cities of others.

Pope Francis writes – and here an entire section shall be quoted:

“Many things have to change course, but it is we human beings above all who need to change. We lack an awareness of our common origin, of our mutual belonging, and of a future to be shared with everyone. This basic awareness would enable the development of new conviction, attitudes and forms of life. A great cultural, spiritual and educational challenge stands before us, and it will demand that we set out on the long path of renewal.” (Francis, no. 202)

Laudato Si’ does not suggest that we can escape from our problems, that we can withdraw, or that we can “keep calm and carry on.” And though the encyclical is not a manifesto, if it were one it could possibly be called “The Bright Mountain Manifesto.” For what Laudato Si’ reminds its readers time and time again is that even though we face great challenges it remains within our power to address them, though we must act soon and decisively if we are to effect a change. We do not need to wander towards a mystery shrouded mountain in the distance, but work to make the peaks near us glisten – it is not a matter of retreating from the world but of rebuilding it in a way that provides for all. Nobody needs to go hungry, our cities can be beautiful, our lifestyles can be fulfilling, our tools can be made to serve us as opposed to our being made to serve tools, people can recognize the immense debt they owe to each other – and working together we can make this a better world.

Doing so will be difficult. It will require significant changes.

But Laudato Si’ is a document that believes people can still accomplish this.

In the end Laudato Si’ is less about having faith in god, than it is about having faith in people.

_____

Zachary Loeb is a writer, activist, librarian, and terrible accordion player. He earned his MSIS from the University of Texas at Austin, and is currently working towards an MA in the Media, Culture, and Communications department at NYU. His research areas include media refusal and resistance to technology, ethical implications of technology, infrastructure and e-waste, as well as the intersection of library science with the STS field. Using the moniker “The Luddbrarian,” Loeb writes at the blog Librarian Shipwreck, on which an earlier version of this post first appeared. He is a frequent contributor to The b2 Review Digital Studies section.

Back to the essay

_____

Works Cited

Pope Francis. Encyclical Letter Laudato Si’ of the Holy Father Francis on Care For Our Common Home. Vatican Press, 2015. [Note – the numbers ins all citations from this document refer to the section number, not the page number]

Ellul, Jacques. The Technological Society. Vintage Books, 1964.

Fromm, Erich. To Be and To Have. Harper & Row, Publishers, 1976.

Hine, Dougald and Kingsnorth, Paul. Uncivilization: The Dark Mountain Manifesto. The Dark Mountain Project, 2013.

Mumford, Lewis. My Works and Days: A Personal Chronicle. Harcourt, Brace, Jovanovich, 1979.

Mumford, Lewis. Art and Technics. Columbia University Press, 2000.

Mumford, Lewis. Technics and Civilization. University of Chicago Press, 2010.

Noble, David. The Religion of Technology. Penguin, 1999.

Oreskes, Naomi and Conway, Erik M. The Collapse of Western Civilization: A View from the Future. Columbia University Press, 2014.

Weil, Simone. The Need for Roots. Routledge Classics, 2002.

Weil, Simone. Gravity and Grace. Routledge Classics, 2002. (the quote at the beginning of this piece is found on page 139 of this book)