a review of N. Katherine Hayles, How We Think: Digital Media and Contemporary Technogenesis (Chicago, 2012)

a review of N. Katherine Hayles, How We Think: Digital Media and Contemporary Technogenesis (Chicago, 2012)

by R. Joshua Scannell

~

In How We Think, N Katherine Hayles addresses a number of increasingly urgent problems facing both the humanities in general and scholars of digital culture in particular. In keeping with the research interests she has explored at least since 2002’s Writing Machines (MIT Press), Hayles examines the intersection of digital technologies and humanities practice to argue that contemporary transformations in the orientation of the University (and elsewhere) are attributable to shifts that ubiquitous digital culture have engendered in embodied cognition. She calls this process of mutual evolution between the computer and the human technogenesis (a term that is mostly widely associated with the work of Bernard Stiegler, although Hayles’s theories often aim in a different direction from Stiegler’s). Hayles argues that technogenesis is the basis for the reorientation of the academy, including students, away from established humanistic practices like close reading. Put another way, not only have we become posthuman (as Hayles discusses in her landmark 1999 University of Chicago Press book, How We Became Posthuman: Virtual Bodies in Cybernetics, Literature, and Informatics), but our brains have begun to evolve to think with computers specifically and digital media generally. Rather than a rearguard eulogy for the humanities that was, Hayles advocates for an opening of the humanities to digital dromology; she sees the Digital Humanities as a particularly fertile ground from which to reimagine the humanities generally.

Hayles is an exceptional scholar, and while her theory of technogenesis is not particularly novel, she articulates it with a clarity and elegance that are welcome and useful in a field that is often cluttered with good ideas, unintelligibly argued. Her close engagement with work across a range of disciplines – from Hegelian philosophy of mind (Catherine Malabou) to theories of semiosis and new media (Lev Manovich) to experimental literary production – grounds an argument about the necessity of transmedial engagement in an effective praxis. Moreover, she ably shifts generic gears over the course of a relatively short manuscript, moving from quasi-ethnographic engagement with University administrators, to media archaeology a la Friedrich Kittler, to contemporary literary theory, with grace. Her critique of the humanities that is, therefore, doubles as a praxis: she is actually producing the discipline-flouting work that she calls on her colleagues to pursue.

The debate about the death and/or future of the humanities is weather worn, but Hayles’s theory of technogenesis as a platform for engaging in it is a welcome change. For Hayles, the technogenetic argument centers on temporality, and the multiple temporalities embedded in computer processing and human experience. She envisions this relation as cybernetic, in which computer and human are integrated as a system through the feedback loops of their coemergent temporalities. So, computers speed up human responses, which lag behind innovations, which prompt beta test cycles at quicker rates, which demand humans to behave affectively, nonconsciously. The recursive relationship between human duration and machine temporality effectively mutates both. Humanities professors might complain that their students cannot read “closely” like they used to, but for Hayles this is a fault of those disciplines to imagine methods in step with technological changes. Instead of digital media making us “dumber” by reducing our attention spans, as Nicholas Carr argues, Hayles claims that the movement towards what she calls “hyper reading” is an ontological and biological fact of embodied cognition in the age of digital media. If “how we think” were posed as a question, the answer would be: bodily, quickly, cursorily, affectively, non-consciously.

Hayles argues that this doesn’t imply an eliminative teleology of human capacity, but rather an opportunity to think through novel, expansive interventions into this cyborg loop. We may be thinking (and feeling, and experiencing) differently than we used to, but this remains a fact of human existence. Digital media has shifted the ontics of our technogenetic reality, but it has not fundamentally altered its ontology. Morphological biology, in fact, entails ontological stability. To be human, and to think like one, is to be with machines, and to think with them. The kids, in other words, are all right.

This sort of quasi-Derridean or Stieglerian Hegelianism is obviously not uncommon in media theory. As Hayles deploys it, this disposition provides a powerful framework for thinking through the relationship of humans and machines without ontological reductivism on either end. Moreover, she engages this theory in a resolutely material fashion, evading the enervating tendency of many theorists in the humanities to reduce actually existing material processes to metaphor and semiosis. Her engagement with Malabou’s work on brain plasticity is particularly useful here. Malabou has argued that the choice facing the intellectual in the age of contemporary capitalism is between plasticity and self-fashioning. Plasticity is a quintessential demand of contemporary capitalism, whereas self-fashioning opens up radical possibilities for intervention. The distinction between these two potentialities, however, is unclear – and therefore demands an ideological commitment to the latter. Hayles is right to point out that this dialectic insufficiently accounts for the myriad ways in which we are engaged with media, and are in fact produced, bodily, by it.

But while Hayles’ critique is compelling, the responses she posits may be less so. Against what she sees as Malabou’s snide rejection of the potential of media, she argues

It is precisely because contemporary technogenesis posits a strong connection between ongoing dynamic adaptation of technics and humans that multiple points of intervention open up. These include making new media…adapting present media to subversive ends…using digital media to reenvision academic practices, environments and strategies…and crafting reflexive representations of media self fashionings…that call attention to their own status as media, in the process raising our awareness of both the possibilities and dangers of such self-fashioning. (83)

With the exception of the ambiguous labor done by the word “subversive,” this reads like a catalog of demands made by administrators seeking to offload ever-greater numbers of students into MOOCs. This is unfortunately indicative of what is, throughout the book, a basic failure to engage with the political economics of “digital media and contemporary technogenesis.” Not every book must explicitly be political, and there is little more ponderous than the obligatory, token consideration of “the political” that so many media scholars feel compelled to make. And yet, this is a text that claims to explain “how” “we” “think” under post-industrial, cognitive capitalism, and so the lack of this engagement cannot help but show.

Universities across the country are collapsing due to lack of funding, students are practically reduced to debt bondage to cope with the costs of a desperately near-compulsory higher education that fails to deliver economic promises, “disruptive” deployment of digital media has conjured teratic corporate behemoths that all presume to “make the world a better place” on the backs of extraordinarily exploited workforces. There is no way for an account of the relationship between the human and the digital in this capitalist context not to be political. Given the general failure of the book to take these issues seriously, it is unsurprising that two of Hayles’ central suggestions for addressing the crisis in the humanities are 1) to use voluntary, hobbyist labor to do the intensive research that will serve as the data pool for digital humanities scholars and 2) to increasingly develop University partnerships with major digital conglomerates like Google.

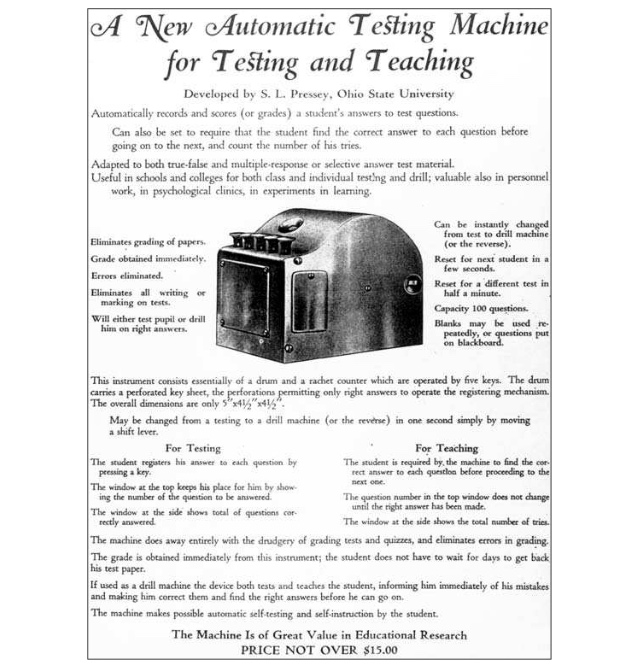

This reads like a cost-cutting administrator’s fever dream because, in the chapter in which Hayles promulgates novel (one might say “disruptive”) ideas for how best to move the humanities forward, she only speaks to administrators. There is no consideration of labor in this call for the reformation of the humanities. Given the enormous amount of writing that has been done on affective capitalism (Clough 2008), digital labor (Scholz 2012), emotional labor (Van Kleaf 2015), and so many other iterations of exploitation under digital capitalism, it boggles the mind a bit to see an embrace of the Mechanical Turk as a model for the future university.

While it may be true that humanities education is in crisis – that it lacks funding, that its methods don’t connect with students, that it increasingly must justify its existence on economic grounds – it is unclear that any of these aspects of the crisis are attributable to a lack of engagement with the potentials of digital media, or the recognition that humans are evolving with our computers. All of these crises are just as plausibly attributable to what, among many others, Chandra Mohanty identified ten years ago as the emergence of the corporate university, and the concomitant transformation of the mission of the university from one of fostering democratic discourse to one of maximizing capital (Mohanty 2003). In other words, we might as easily attribute the crisis to the tightening command that contemporary capitalist institutions have over the logic of the university.

Humanities departments are underfunded precisely because they cannot – almost by definition – justify their existence on monetary grounds. When students are not only acculturated, but are compelled by financial realities and debt, to understand the university as a credentialing institution capable of guaranteeing certain baseline waged occupations – then it is no surprise that they are uninterested in “close reading” of texts. Or, rather, it might be true that students’ “hyperreading” is a consequence of their cognitive evolution with machines. But it is also just as plausibly a consequence of the fact that students often are working full time jobs while taking on full time (or more) course loads. They do not have the time or inclination to read long, difficult texts closely. They do not have the time or inclination because of the consolidating paradigm around what labor, and particularly their labor, is worth. Why pay for a researcher when you can get a hobbyist to do it for free? Why pay for a humanities line when Google and Wikipedia can deliver everything an institution might need to know?

In a political economy in which Amazon’s reduction of human employees to algorithmically-managed meat wagons is increasingly diagrammatic and “innovative” in industries from service to criminal justice to education, the proposals Hayles is making to ensure the future of the university seem more fifth columnary that emancipatory.

This stance also evacuates much-needed context from what are otherwise thoroughly interesting, well-crafted arguments. This is particularly true of How We Think’s engagement with Lev Manovich’s claims regarding narrative and database. Speaking reductively, in The Language of New Media (MIT Press, 2001), Manovich argued that under there are two major communicative forms: narrative and database. Narrative, in his telling, is more or less linear, and dependent on human agency to be sensible. Novels and films, despite many modernist efforts to subvert this, tend toward narrative. The database, as opposed to the narrative, arranges information according to patterns, and does not depend on a diachronic point-to-point communicative flow to be intelligible. Rather, the database exists in multiple temporalities, with the accumulation of data for rhizomatic recall of seemingly unrelated information producing improbable patterns of knowledge production. Historically, he argues, narrative has dominated. But with the increasing digitization of cultural output, the database will more and more replace narrative.

Manovich’s dichotomy of media has been both influential and roundly criticized (not least by Manovich himself in Software Takes Command, Bloomsbury 2013) Hayles convincingly takes it to task for being reductive and instituting a teleology of cultural forms that isn’t borne out by cultural practice. Narrative, obviously, hasn’t gone anywhere. Hayles extends this critique by considering the distinctive ways space and time are mobilized by database and narrative formations. Databases, she argues, depend on interoperability between different software platforms that need to access the stored information. In the case of geographical information services and global positioning services, this interoperability depends on some sort of universal standard against which all information can be measured. Thus, Cartesian space and time are inevitably inserted into database logics, depriving them of the capacity for liveliness. That is to say that the need to standardize the units that measure space and time in machine-readable databases imposes a conceptual grid on the world that is creatively limiting. Narrative, on the other hand, does not depend on interoperability, and therefore does not have an absolute referent against which it must make itself intelligible. Given this, it is capable of complex and variegated temporalities not available to databases. Databases, she concludes, can only operate within spatial parameters, while narrative can represent time in different, more creative ways.

As an expansion and corrective to Manovich, this argument is compelling. Displacing his teleology and infusing it with a critique of the spatio-temporal work of database technologies and their organization of cultural knowledge is crucial. Hayles bases her claim on a detailed and fascinating comparison between the coding requirements of relational databanks and object-oriented databanks. But, somewhat surprisingly, she takes these different programming language models and metonymizes them as social realities. Temporality in the construction of objects transmutes into temporality as a philosophical category. It’s unclear how this leap holds without an attendant sociopolitical critique. But it is impossible to talk about the cultural logic of computation without talking about the social context in which this computation emerges. In other words, it is absolutely true that the “spatializing” techniques of coders (like clustering) render data points as spatial within the context of the data bank. But it is not an immediately logical leap to then claim that therefore databases as a cultural form are spatial and not temporal.

Further, in the context of contemporary data science, Hayles’s claims about interoperability are at least somewhat puzzling. Interoperability and standardized referents might be a theoretical necessity for databases to be useful, but the ever-inflating markets around “big data,” data analytics, insights, overcoming data siloing, edge computing, etc, demonstrate quite categorically that interoperability-in-general is not only non-existent, but is productively non-existent. That is to say, there are enormous industries that have developed precisely around efforts to synthesize information generated and stored across non-interoperable datasets. Moreover, data analytics companies provide insights almost entirely based on their capacity to track improbably data patterns and resonances across unlikely temporalities.

Far from a Cartesian world of absolute space and time, contemporary data science is a quite posthuman enterprise in committing machine learning to stretch, bend and strobe space and time in order to generate the possibility of bankable information. This is both theoretically true in the sense of setting algorithms to work sorting, sifting and analyzing truly incomprehensible amounts of data and materially true in the sense of the massive amount of capital and labor that is invested in building, powering, cooling, staffing and securing data centers. Moreover, the amount of data “in the cloud” has become so massive that analytics companies have quite literally reterritorialized information– particularly trades specializing in high frequency trading, which practice “co- location,” locating data centers geographically closer the sites from which they will be accessed in order to maximize processing speed.

Data science functions much like financial derivatives do (Martin 2015). Value in the present is hedged against the probable future spatiotemporal organization of software and material infrastructures capable of rendering a possibly profitable bundling of information in the immediate future. That may not be narrative, but it is certainly temporal. It is a temporality spurred by the queer fluxes of capital.

All of which circles back to the title of the book. Hayles sets out to explain How We Think. A scholar with such an impeccable track record for pathbreaking analyses of the relationship of the human to technology is setting a high bar for herself with such a goal. In an era in which (in no small part due to her work) it is increasingly unclear who we are, what thinking is or how it happens, it may be an impossible bar to meet. Hayles does an admirable job of trying to inject new paradigms into a narrow academic debate about the future of the humanities. Ultimately, however, there is more resting on the question than the book can account for, not least the livelihoods and futures of her current and future colleagues.

_____

R Joshua Scannell is a PhD candidate in sociology at the CUNY Graduate Center. His current research looks at the political economic relations between predictive policing programs and urban informatics systems in New York City. He is the author of Cities: Unauthorized Resistance and Uncertain Sovereignty in the Urban World (Paradigm/Routledge, 2012).

Back to the essay

_____

Patricia T. Clough. 2008. “The Affective Turn.” Theory Culture and Society 25(1) 1-22

N. Katherine Hayles. 2002. Writing Machines. Cambridge: MIT Press

N. Katherine Hayles. 1999. How We Became Posthuman: Virtual Bodies in Cybernetics, Literature, and Informatics. Chicago: University of Chicago Press

Catherine Malabou. 2008. What Should We Do with Our Brain? New York: Fordham University Press

Lev Manovich. 2001. The Language of New Media. Cambridge: MIT Press.

Lev Manovich. 2009. Software Takes Command. London: Bloomsbury

Randy Martin. 2015. Knowledge LTD: Toward a Social Logic of the Derivative. Philadelphia: Temple University Press

Chandra Mohanty. 2003. Feminism Without Borders: Decolonizing Theory, Practicing Solidarity. Durham: Duke University Press.

Trebor Scholz, ed. 2012. Digital Labor: The Internet as Playground and Factory. New York: Routledge

Bernard Stiegler. 1998. Technics and Time, 1: The Fault of Epimetheus. Palo Alto: Stanford University Press

Kara Van Cleaf. 2015. “Of Woman Born to Mommy Blogged: The Journey from the Personal as Political to the Personal as Commodity.” Women’s Studies Quarterly 43(3/4) 247-265