a review of Peter Frase, Four Futures: Life After Capitalism (Verso Jacobin Series, 2016)

~

Charlie Brooker’s acclaimed British techno-dystopian television series, Black Mirror, returned last year in a more American-friendly form. The third season, now broadcast on Netflix, opened with “Nosedive,” a satirical depiction of a recognizable near future when user-generated social media scores—on the model of Yelp reviews, Facebook likes, and Twitter retweets—determine life chances, including access to basic services, such as housing, credit, and jobs. The show follows striver Lacie Pound—played by Bryce Howard—who, in seeking to boost her solid 4.2 life score, ends up inadvertently wiping out all of her points, in the nosedive named by the episode’s title. Brooker offers his viewers a nightmare variation on a now familiar online reality, as Lacie rates every human interaction and is rated in turn, to disastrous result. And this nightmare is not so far from the case, as online reputational hierarchies increasingly determine access to precarious employment opportunities. We can see this process in today’s so-called sharing economy, in which user approval determines how many rides will go to the Uber driver, or if the room you are renting on Airbnb, in order to pay your own exorbitant rent, gets rented.

Brooker grappled with similar themes during the show’s first season; for example, “Fifteen Million Merits” shows us a future world of human beings forced to spend their time on exercise bikes, presumably in order to generate power plus the “merits” that function as currency, even as they are forced to watch non-stop television, advertisements included. It is television—specifically a talent show—that offers an apparent escape to the episode’s protagonists. Brooker revisits these concerns—which combine anxieties regarding new media and ecological collapse in the context of a viciously unequal society—in the final episode of the new season, entitled “Hated in the Nation,” which features robotic bees, built for pollination in a world after colony collapse, that are hacked and turned to murderous use. Here is an apt metaphor for the virtual swarming that characterizes so much online interaction.

Black Mirror corresponds to what literary critic Tom Moylan calls a “critical dystopia.” [1] Rather than a simple exercise in pessimism or anti-utopianism, Moylan argues that critical dystopias, like their utopian counterparts, also offer emancipatory political possibilities in exposing the limits of our social and political status quo, such as the naïve techno-optimism that is certainly one object of Brooker’s satirical anatomies. Brooker in this way does what Jacobin Magazine editor and social critic Peter Frase claims to do in his Four Futures: Life After Capitalism, a speculative exercise in “social science fiction” that uses utopian and dystopian science fiction as means to explore what might come after global capitalism. Ironically, Frase includes both online reputational hierarchies and robotic bees in his two utopian scenarios: one of the more dramatic, if perhaps inadvertent, ways that Frase collapses dystopian into utopian futures

Frase echoes the opening lines of Marx and Engels’ Communist Manifesto as he describes the twin “specters of ecological catastrophe and automation” that haunt any possible post-capitalist future. While total automation threatens to make human workers obsolete, the global planetary crisis threatens life on earth, as we have known it for the past 12000 years or so. Frase contends that we are facing a “crisis of scarcity and a crisis of abundance at the same time,” making our moment one “full of promise and danger.” [2]

The attentive reader can already see in this introductory framework the too-often unargued assumptions and easy dichotomies that characterize the book as a whole. For example, why is total automation plausible in the next 25 years, according to Frase, who largely supports this claim by drawing on the breathless pronouncements of a technophilic business press that has made similar promises for nearly a hundred years? And why does automation equal abundance—assuming the more egalitarian social order that Frase alternately calls “communism” or “socialism”—especially when we consider the ecological crisis Frase invokes as one of his two specters? This crisis is very much bound to an energy-intensive technosphere that is already pushing against several of the planetary boundaries that make for a habitable planet; total automation would expand this same technosphere by several orders of magnitude, requiring that much more energy, materials, and environmental sinks to absorb tomorrow’s life-sized iPhone or their corpses. Frase deliberately avoids these empirical questions—and the various debates among economists, environmental scientists and computer programmers about the feasibility of AI, the extent to which automation is actually displacing workers, and the ecological limits to technological growth, at least as technology is currently constituted—by offering his work as the “social science fiction” mentioned above, perhaps in the vein of Black Mirror. He distinguishes this method from futurism or prediction, as he writes, “science fiction is to futurism as social theory is to conspiracy theory.” [3]

In one of his few direct citations, Frase invokes Marxist literary critic Fredric Jameson, who argues that conspiracy theory and its fictions are ideologically distorted attempts to map an elusive and opaque global capitalism: “Conspiracy, one is tempted to say, is the poor person’s cognitive mapping in the postmodern age; it is the degraded figure of the total logic of late capital, a desperate attempt to represent the latter’s system, whose failure is marked by its slippage into sheer theme and content.” [4] For Jameson, a more comprehensive cognitive map of our planetary capitalist civilization necessitates new forms of representation to better capture and perhaps undo our seemingly eternal and immovable status quo. In the words of McKenzie Wark, Jameson proposes nothing less than a “theoretical-aesthetic practice of correlating the field of culture with the field of political economy.” [5] And it is possibly with this “theoretical-aesthetic practice” in mind that Frase turns to science fiction as his preferred tool of social analysis.

The book accordingly proceeds in the way of a grid organized around the coordinates “abundance/scarcity” and “egalitarianism/hierarchy”—in another echo of Jameson, namely his structuralist penchant for Greimas squares. Hence we get abundance with egalitarianism, or “communism,” followed by its dystopian counterpart, rentism, or hierarchical plenty in the first two futures; similarly, the final futures move from an equitable scarcity, or “socialism” to a hierarchical and apocalyptic “exterminism.” Each of these chapters begins with a science fiction, ranging from an ostensibly communist Star Trek to the exterminationist visions presented in Orson Scott Card’s Ender’s Game, upon which Frase builds his various future scenarios. These scenarios are more often than not commentaries on present day phenomena, such as 3D printers or the sharing economy, or advocacy for various measures, like a Universal Basic Income, which Frase presents as the key to achieving his desired communist future.

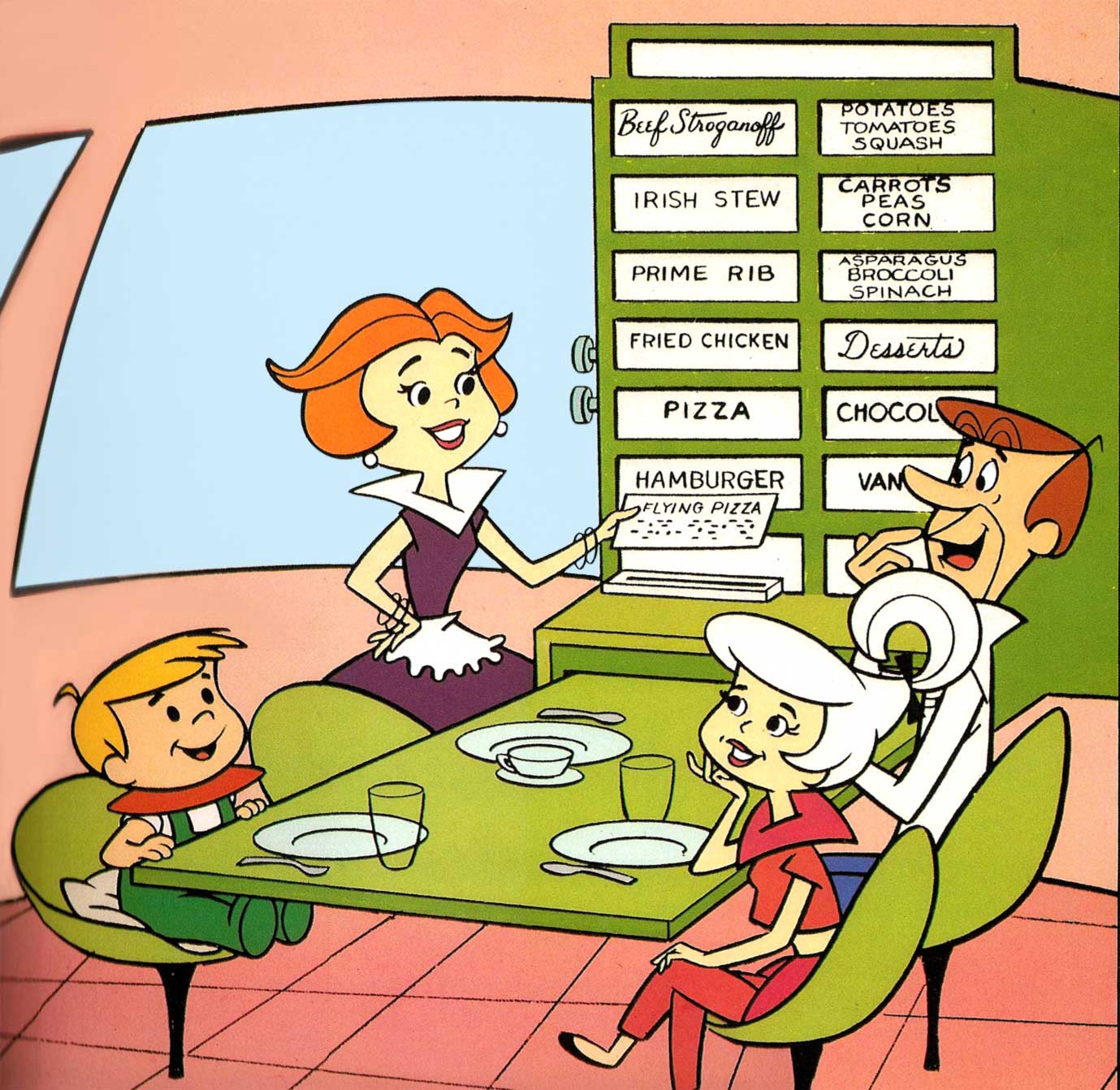

With each of his futures anchored in a literary (or cinematic, or televisual) science fiction narrative, Frase’s speculations rely on imaginative literature, even as he avoids any explicit engagement with literary criticism and theory, such as the aforementioned work of Jameson. Jameson famously argues (see Jameson 1982, and the more elaborated later versions in texts such as Jameson 2005) that the utopian text, beginning with Thomas More’s Utopia, simultaneously offers a mystified version of dominant social relations and an imaginative space for rehearsing radically different forms of sociality. But this dialectic of ideology and utopia is absent from Frase’s analysis, where his select space operas are all good or all bad: either the Jetsons or Elysium.

And, in a marked contrast with Jameson’s symptomatic readings, some science fiction is for Frase more equal than others when it comes to radical sociological speculation, as evinced by his contrasting views of George Lucas’s Star Wars and Gene Roddenberry’s Star Trek. According to Frase, in “Star Wars, you don’t really care about the particularities of the galactic political economy,” while in Star Trek, “these details actually matter. Even though Star Trek and Star Wars might superficially look like similar tales of space travel and swashbuckling, they are fundamentally different types of fiction. The former exists only for its characters and its mythic narrative, while the latter wants to root its characters in a richly and logically structured social world.” [6]

Frase here understates his investment in Star Trek, whose “structured social world” is later revealed as his ideal-type for a high tech fully automated luxury communism, while Star Wars is relegated to the role of the space fantasy foil. But surely the original Star Wars is at least an anticolonial allegory, in which a ragtag rebel alliance faces off against a technologically superior evil empire, that was intentionally inspired by the Vietnam War. Lucas turned to the space opera after he lost his bid to direct Apocalypse Now—which was originally based on Lucas’s own idea. According to one account of the franchise’s genesis, “the Vietnam War, which was an asymmetric conflict with a huge power unable to prevail against guerrilla fighters, instead became an influence on Star Wars. As Lucas later said, ‘A lot of my interest in Apocalypse Now carried over into Star Wars.” [7]

Texts—literary, cinematic, and otherwise—often combine progressive and reactionary, utopian and ideological elements. Yet it is precisely the mixed character of speculative narrative that Frase ignores throughout his analysis, reducing each of his literary examples to unequivocally good or bad, utopian or dystopian, blueprints for “life after capitalism.” Why anchor radical social analysis in various science fictions while refusing basic interpretive argument? As with so much else in Four Futures, Frase uses assumption—asserting that Star Trek has one specific political valence or that total automation guided by advanced AI is an inevitability within 25 years—in the service of his preferred policy outcomes (and the nightmare scenarios that function as the only alternatives to those outcomes), while avoiding engagement with debates related to technology, ecology, labor, and the utopian imagination.

Frase in this way evacuates the politically progressive and critical utopian dimensions from George Lucas’s franchise, elevating the escapist and reactionary dimensions that represent the ideological, as opposed to the utopian, pole of this fantasy. Frase similarly ignores the ideological elements of Roddenberry’s Star Trek: “The communistic quality of the Star Trek universe is often obscured because the films and TV shows are centered on the military hierarchy of Starfleet, which explores the galaxy and comes into conflict with alien races. But even this seems largely a voluntarily chosen hierarchy.” [8]

Frase’s focus, regarding Star Trek, is almost entirely on the replicators that can make something, anything, from nothing, so that Captain Picard, from the eighties era series reboot, orders a “cup of Earl Grey, hot,” from one of these magical machines, and immediately receives Earl Grey, hot. Frase equates our present-day 3D printers with these same replicators over the course of all his four futures, despite the fact that unlike replicators, 3D printers require inputs: they do not make matter, but shape it.

3D printing encompasses a variety of processes in which would-be makers create an image with a computer and CAD (computer aided design) software, which in turn provides a blueprint for the three-dimensional object to be “printed.” This requires either the addition of material—usually plastic—and the injection of that material into a mould. The most basic type of 3D printing involves heating “(plastic, glue-based) material that is then extruded through a nozzle. The nozzle is attached to an apparatus similar to a normal 2D ink-jet printer, just that it moves up and down, as well. The material is put on layer over layer. The technology is not substantially different from ink-jet printing, it only requires slightly more powerful computing electronics and a material with the right melting and extrusion qualities.” [9] This is still the most affordable and pervasive way to make objects with 3D printers—most often used to make small models and components. It is also the version of 3D printing that lends itself to celebratory narratives of post-industrial techno-artisanal home manufacture pushed by industry cheerleaders and enthusiasts alike. Yet, the more elaborate versions of 3D printing—“printing’ everything from complex machinery to food to human organs—rely on the more complex and expensive industrial versions of the technology that require lasers (e.g., stereolithography and selective laser sintering). Frase espouses a particular left techno-utopian line that sees the end of mass production in 3D printing—especially with the free circulation of the programs for various products outside of our intellectual property regime; this is how he distinguishes his communist utopia from the dystopian rentism that most resembles our current moment, with material abundance taken for granted. And it is this fantasy of material abundance and post-work/post-worker production that presumably appeals to Frase, who describes himself as an advocate of “enlightened Luddism.”

This is an inadvertently ironic characterization, considering the extent to which these emancipatory claims conceal and distort the labor discipline imperative that is central to the shape and development of this technology, as Johan Söderberg argues, “we need to put enthusiastic claims for 3D printers into perspective. One claim is that laid-off American workers can find a new source of income by selling printed goods over the Internet, which will be an improvement, as degraded factory jobs are replaced with more creative employment opportunities. But factory jobs were not always monotonous. They were deliberately made so, in no small part through the introduction of the same technology that is expected to restore craftsmanship. ‘Makers’ should be seen as the historical result of the negation of the workers’ movement.” [10]

Söderberg draws on the work of David Noble, who outlines how the numerical control technology central to the growth of post-war factory automation was developed specifically to de-skill and dis-empower workers during the Cold War period. Unlike Frase, both of these authors foreground those social relations, which include capital’s need to more thoroughly exploit and dominate labor, embedded in the architecture of complex megatechnical systems, from factory automation to 3D printers. In collapsing 3D printers into Star Trek-style replicators, Frase avoids these questions as well as the more immediately salient issue of resource constraints that should occupy any prognostication that takes the environmental crisis seriously.

The replicator is the key to Frase’s dream of endless abundance on the model of post-war US style consumer affluence and the end of all human labor. But, rather than a simple blueprint for utopia, Star Trek’s juxtaposition of techno-abundance with military hierarchy and a tacitly expansionist galactic empire—despite the show’s depiction of a Starfleet “prime directive” that forbids direct intervention into the affairs of the extraterrestrial civilizations encountered by the federation’s starships, the Enterprise’s crew, like its ostensibly benevolent US original, almost always intervenes—is significant. The original Star Trek is arguably a liberal iteration of Kennedy-era US exceptionalism, and reflects a moment in which relatively wide-spread first world abundance was underwritten by the deliberate underdevelopment, appropriation, and exploitation of various “alien races’” resources, land, and labor abroad. Abundance in fact comes from somewhere and some one.

As historian H. Bruce Franklin argues, the original series reflects US Cold War liberalism, which combined Roddenberry’s progressive stances regarding racial inclusion within the parameters of the United States and its Starfleet doppelganger, with a tacitly anti-communist expansionist viewpoint, so that the show’s Klingon villains often serve as proxies for the Soviet menace. Franklin accordingly charts the show’s depictions of the Vietnam War, moving from a pro-war and pro-American stance to a mildly anti-war position in the wake of the Tet Offensive over the course of several episodes: “The first of these two episodes, ‘The City on the Edge of Forever‘ and ‘A Private Little War,’ had suggested that the Vietnam War was merely an unpleasant necessity on the way to the future dramatized by Star Trek. But the last two, ‘The Omega Glory‘ and ‘Let That Be Your Last Battlefield,’ broadcast in the period between March 1968 and January 1969, are so thoroughly infused with the desperation of the period that they openly call for a radical change of historic course, including an end to the Vietnam War and to the war at home.” [11]

Perhaps Frase’s inattention to Jameson’s dialectic of ideology and utopia reflects a too-literal approach to these fantastical narratives, even as he proffers them as valid tools for radical political and social analysis. We could see in this inattention a bit too much of the fan-boy’s enthusiasm, which is also evinced by the rather narrow and backward-looking focus on post-war space operas to the exclusion of the self-consciously radical science fiction narratives of Ursula LeGuin, Samuel Delany, and Octavia Butler, among others. These writers use the tropes of speculative fiction to imagine profoundly different social relations that are the end-goal of all emancipatory movements. In place of emancipated social relations, Frase too often relies on technology and his readings must in turn be read with these limitations in mind.

Unlike the best speculative fiction, utopian or dystopian, Frase’s “social science fiction” too often avoids the question of social relations—including the social relations embedded in the complex megatechnical systems Frase takes for granted as neutral forces of production. He accordingly announces at the outset of his exercise: “I will make the strongest assumption possible: all need for human labor in the production process can be eliminated, and it is possible to live a life of pure leisure while machines do all the work.” [12] The science fiction trope effectively absolves Frase from engagement with the technological, ecological, or social feasibility of these predictions, even as he announces his ideological affinities with a certain version of post- and anti-work politics that breaks with orthodox Marxism and its socialist variants.

Frase’s Jetsonian vision of the future resonates with various futurist currents that can we now see across the political spectrum, from the Silicon Valley Singulitarianism of Ray Kurzweil or Elon Musk, on the right, to various neo-Promethean currents on the left, including so-called “left accelerationism.” Frase defends his assumption as a desire “to avoid long-standing debates about post-capitalist organization of the production process.” While such a strict delimitation is permissible for speculative fiction—an imaginative exercise regarding what is logically possible, including time travel or immortality—Frase specifically offers science fiction as a mode of social analysis, which presumably entails grappling with rather than avoiding current debates on labor, automation, and the production process.

Ruth Levitas, in her 2013 book Utopia as Method: The Imaginary Reconstitution of Society, offers a more rigorous definition of social science fiction via her eponymous “utopia as method.” This method combines sociological analysis and imaginative speculation, which Levitas defends as “holistic. Unlike political philosophy and political theory, which have been more open than sociology to normative approaches, this holism is expressed at the level of concrete social institutions and processes.” [13] But that attentiveness to concrete social institutions and practices combined with counterfactual speculation regarding another kind of human social world are exactly what is missing in Four Futures. Frase uses grand speculative assumptions-such as the inevitable rise of human-like AI or the complete disappearance of human labor, all within 25 years or so—in order to avoid significant debates that are ironically much more present in purely fictional works, such as the aforementioned Black Mirror or the novels of Kim Stanley Robinson, than in his own overtly non-fictional speculations. From the standpoint of radical literary criticism and radical social theory, Four Futures is wanting. It fails as analysis. And, if one primary purpose of utopian speculation, in its positive and negative forms, is to open an imaginative space in which wholly other forms of human social relations can be entertained, Frase’s speculative exercise also exhibits a revealing paucity of imagination.

This is most evident in Frase’s most explicitly utopian future, which he calls “communism,” without any mention of class struggle, the collective ownership of the means of production, or any of the other elements we usually associate with “communism”; instead, 3D printers-cum-replicators will produce whatever you need whenever you need it at home, an individualizing techno-solution to the problem of labor, production, and its organization that resembles alchemy in its indifference to material reality and the scarce material inputs required by 3D printers. Frase proffers a magical vision of technology so as to avoid grappling with the question of social relations; even more than this, in the coda to this chapter, Frase reveals the extent to which current patterns of social organization and stratification remain under Frase’s “communism.” Frase begins this coda with a question: “in a communist society, what do we do all day?” To which he responds: “The kind of communism I’ve described is sometimes mistakenly construed, by both its critics and its adherents, as a society in which hierarchy and conflict are wholly absent. But rather than see the abolition of the capital-wage relation as a single shot solution to all possible social problems, it is perhaps better to think of it in the terms used by political scientist, Corey Robin, as a way to ‘convert hysterical misery into ordinary unhappiness.’” [14]

Frase goes on to argue—rightly—that the abolition of class society or wage labor will not put an end to a variety of other oppressions, such as those based in gender and racial stratification; he in this way departs from the class reductionist tendencies sometimes on view in the magazine he edits. His invocation of Corey Robin is nonetheless odd considering the Promethean tenor of Frase’s preferred futures. Robin contends that while the end of exploitation, and capitalist social relations, would remove the major obstacle to human flourishing, human beings will remain finite and fragile creatures in a finite and fragile world. Robin in this way overlaps with Fredric Jameson’s remarkable essay on Soviet writer Andre Platonov’s Chevengur, in which Jameson writes: “Utopia is merely the political and social solution of collective life: it does not do away with the tensions and inherent contradictions inherent in both interpersonal relations and in bodily existence itself (among them, those of sexuality), but rather exacerbates those and allows them free rein, by removing the artificial miseries of money and self-preservation [since] it is not the function of Utopia to bring the dead back to life nor abolish death in the first place.” [15] Both Jameson and Robin recall Frankfurt School thinker Herbert Marcuse’s distinction between necessary and surplus repression: while the latter encompasses all of the unnecessary miseries attendant upon a class stratified form of social organization that runs on exploitation, the former represents the necessary adjustments we make to socio-material reality and its limits.

It is telling that while Star Trek-style replicators fall within the purview of the possible for Frase, hierarchy, like death, will always be with us, since he at least initially argues that status hierarchies will persist after the “organizing force of the capital relation has been removed” (59). Frase oscillates between describing these status hierarchies as an unavoidable, if unpleasant, necessity and a desirable counter to the uniformity of an egalitarian society. Frase illustrates this point in recalling Cory Doctorow’s Down and Out in The Magic Kingdom, a dystopian novel that depicts a world where all people’s needs are met at the same time that everyone competes for reputational “points”—called Whuffie—on the model of Facebook “likes” and Twitter retweets. Frase’s communism here resembles the world of Black Mirror described above. Although Frase shifts from the rhetoric of necessity to qualified praise in an extended discussion of Dogecoin, an alternative currency used to tip or “transfer a small number of to another Internet user in appreciation of their witty and helpful contributions” (60). Yet Dogecoin, among all cryptocurrencies, is mostly a joke, and like many cryptocurrencies is one whose “decentralized” nature scammers have used to their own advantage, most famously in 2015. In the words of one former enthusiast: “Unfortunately, the whole ordeal really deflated my enthusiasm for cryptocurrencies. I experimented, I got burned, and I’m moving on to less gimmicky enterprises.” [16]

But how is this dystopian scenario either necessary or desirable? Frase contends that “the communist society I’ve sketched here, though imperfect, is at least one in which conflict is no longer based in the opposition between wage workers and capitalists or on struggles…over scarce resources” (67). His account of how capitalism might be overthrown—through a guaranteed universal income—is insufficient, while resource scarcity and its relationship to techno-abundance remains unaddressed in a book that purports to take the environmental crisis seriously. What is of more immediate interest in the case of this coda to his most explicitly utopian future is Frase’s non-recognition of how internet status hierarchies and alternative currencies are modeled on and work in tandem with capitalist logics of entrepreneurial selfhood. We might consider Pierre Bourdieu’s theory of social and cultural capital in this regard, or how these digital platforms and their ever-shifting reputational hierarchies are the foundation of what Jodi Dean calls “communicative capitalism.” [17]

Yet Frase concludes his chapter by telling his readers that it would be a “misnomer” to call his communist future an “egalitarian configuration.” Perhaps Frase offers his fully automated Facebook utopia as counterpoint to the Cold War era critique of utopianism in general and communism in particular: it leads to grey uniformity and universal mediocrity. This response—a variation on Frase’s earlier discussion of Star Trek’s “voluntary hierarchy”—accepts the premise of the Cold War anti-utopian criticisms, i.e., how the human differences that make life interesting, and generate new possibilities, require hierarchy of some kind. In other words, this exercise in utopian speculation cannot move outside the horizon of our own present day ideological common sense.

We can again see this tendency at the very start of the book. Is total automation an unambiguous utopia or a reflection of Frase’s own unexamined ideological proclivities, on view throughout the various futures, for high tech solutions to complex socio-ecological problems? For various flavors of deus ex machina—from 3D printers to replicators to robotic bees—in place of social actors changing the material realities that constrain them through collective action? Conversely, are the “crisis of scarcity” and the visions of ecological apocalypse Frase evokes intermittently throughout his book purely dystopian or ideological? Surely, since Thomas Malthus’s 1798 Essay on Population, apologists for various ruling orders have used the threat of scarcity and material limits to justify inequity, exploitation, and class division: poverty is “natural.” Yet, can’t we also discern in contemporary visions of apocalypse a radical desire to break with a stagnant capitalist status quo? And in the case of the environmental state of emergency, don’t we have a rallying point for constructing a very different eco-socialist order?

Frase is a founding editor of Jacobin magazine and a long-time member of the Democratic Socialists of America. He nonetheless distinguishes himself from the reformist and electoral currents at those organizations, in addition to much of what passes for orthodox Marxism. Rather than full employment—for example—Frase calls for the abolition of work and the working class in a way that echoes more radical anti-work and post-workerist modes of communist theory. So, in a recent editorial published by Jacobin, entitled “What It Means to Be on the Left,” Frase differentiates himself from many of his DSA comrades in declaring that “The socialist project, for me, is about something more than just immediate demands for more jobs, or higher wages, or universal social programs, or shorter hours. It’s about those things. But it’s also about transcending, and abolishing, much of what we think defines our identities and our way of life.” Frase goes on to sketch an emphatically utopian communist horizon that includes the abolition of class, race, and gender as such. These are laudable positions, especially when we consider a new new left milieu some of whose most visible representatives dismiss race and gender concerns as “identity politics,” while redefining radical class politics as a better deal for some amorphous US working class within an apparently perennial capitalist status quo.

Frase’s utopianism in this way represents an important counterpoint within this emergent left. Yet his book-length speculative exercise—policy proposals cloaked as possible scenarios—reveals his own enduring investments in the simple “forces vs. relations of production” dichotomy that underwrote so much of twentieth century state socialism with its disastrous ecological record and human cost. And this simple faith in the emancipatory potential of capitalist technology—given the right political circumstances despite the complete absence of what creating those circumstances might entail— frequently resembles a social democratic version of the Californian ideology or the kind of Silicon Valley conventional wisdom pushed by Elon Musk. This is a more efficient, egalitarian, and techno-utopian version of US capitalism. Frase mines various left communist currents, from post-operaismo to communization, only to evacuate these currents of their radical charge in marrying them to technocratic and technophilic reformism, hence UBI plus “replicators” will spontaneously lead to full communism. Four Futures is in this way an important, because symptomatic, expression of what Jason Smith (2017) calls “social democratic accelerationism,” animated by a strange faith in magical machines in addition to a disturbing animus toward ecology, non-human life, and the natural world in general.

_____

Anthony Galluzzo earned his PhD in English Literature at UCLA. He specializes in radical transatlantic English language literary cultures of the late eighteenth- and nineteenth centuries. He has taught at the United States Military Academy at West Point, Colby College, and NYU.

_____

Notes

[1] See Tom Moylan, Scraps of the Untainted Sky: Science Fiction, Utopia, Dystopia (Boulder: Westview Press, 2000).

[2] Peter Frase, Four Futures: Life After Capitalism. (London: Verso Books, 2016),

3.

[3] Ibid, 27.

[4] Fredric Jameson, “Cognitive Mapping.” In C. Nelson and L. Grossberg, eds. Marxism and the Interpretation of Culture (Illinois: University of Illinois Press, 1990), 6.

[5] McKenzie Wark, “Cognitive Mapping,” Public Seminar (May 2015).

[6] Frase, 24.

[7] This space fantasy also exhibits the escapist, mythopoetic, and even reactionary elements Frase notes—for example, its hereditary caste of Jedi fighters and their ancient religion—as Benjamin Hufbauer notes, “in many ways, the political meanings in Star Wars were and are progressive, but in other ways the film can be described as middle-of-the-road, or even conservative. Hufbauer, “The Politics Behind the Original Star Wars,” Los Angeles Review of Books (December 21, 2015).

[8] Frase, 49.

[9] Angry Workers World, “Soldering On: Report on Working in a 3D-Printer Manufacturing Plant in London,” libcom. org (March 24, 2017).

[10] Johan Söderberg, “A Critique of 3D Printing as a Critical Technology,” P2P Foundation (March 16, 2013).

[11] Franklin, “Star Trek in the Vietnam Era,” Science Fiction Studies, #62 = Volume 21, Part 1 (March 1994).

[12] Frase, 6.

[13] Ruth Levitas, Utopia As Method: The Imaginary Reconstitution of Society. (London: Palgrave Macmillan, 2013), xiv-xv.

[14] Frase, 58.

[15] Jameson, “Utopia, Modernism, and Death,” in Seeds of Time (New York: Columbia University Press, 1996), 110.

[16] Kaleigh Rogers, “The Guy Who Ruined Dogecoin,” VICE Motherboard (March 6, 2015).

[17] See Jodi Dean, Democracy and Other Neoliberal Fantasies: Communicative Capitalism and Left Politics (Durham: Duke University Press, 2009).

_____

Works Cited

- Frase, Peter. 2016. Four Futures: Life After Capitalism. New York: Verso.

- Jameson, Fredric. 1982. “Progress vs. Utopia; Or Can We Imagine The Future?” Science Fiction Studies 9:2 (July). 147-158

- Jameson, Fredric. 1996. “Utopia, Modernism, and Death,” in Seeds of Time. New York: Columbia University Press.

- Jameson, Fredric. 2005. Archaeologies of the Future: The Desire Called Utopia and Other Science Fictions. London: Verso.

- Levitas, Ruth. 2013. Utopia As Method; The Imaginary Reconstitution of Society. London: Palgrave Macmillan.

- Moylan, Tom. 2000. Scraps of the Untainted Sky: Science Fiction, Utopia, Dystopia. Boulder: Westview Press.

- Smith, Jason E. 2017. “Nowhere To Go: Automation Then And Now.” The Brooklyn Rail (March 1).