a review of George Gilder, Life After Google: The Fall of Big Data and the Rise of the Blockchain Economy (Regnery, 2018)

by David Gerard

George Gilder is most famous as a conservative author and speechwriter. He also knows his stuff about technology, and has a few things to say.

But what he has to say about blockchain in his book Life After Google is rambling, ill-connected and unconvincing — and falls prey to the fixed points in his thinking.

Gilder predicts that the Google and Silicon Valley approach — big data, machine learning, artificial intelligence, not charging users per transaction — is failing to scale, and will collapse under its own contradictions.

The Silicon Valley giants will be replaced by a world built around cryptocurrency, blockchains, sound money … and the obsolescence of philosophical materialism — the theory that thought and consciousness needs only physical reality. That last one turns out to be Gilder’s main point.

At his best, as in his 1990 book Life After Television, Gilder explains consequences following from historical materialism — Marx and Engels’ theory that historical events emerge from economic developments and changes to the mode of production — to a conservative readership enamoured with the obsolete Great Man theory of history.

(That said, Gilder sure does love his Great Men. Men specifically.)

Life After Google purports to be about material forces that follow directly from technology. Gilder then mixes in his religious beliefs as, literally, claims about mathematics.

Gilder has a vastly better understanding of technology than most pop science writers. If Gilder talks tech, you should listen. He did a heck of a lot of work on getting out there and talking to experts for this book.

But Gilder never quite makes his case that blockchains are the solutions to the problems he presents — he just presents the existence of blockchains, then talks as if they’ll obviously solve everything.

Blockchains promise Gilder comfort in certainty: “The new era will move beyond Markov chains of disconnected probabilistic states to blockchain hashes of history and futurity, trust and truth,” apparently.

The book was recommended to me by a conservative friend, who sent me a link to an interview with Gilder on the Hoover Institution’s Uncommon Knowledge podcast. My first thought was “another sad victim of blockchain white papers.” You see this a lot — people tremendously excited by blockchain’s fabulous promises, with no idea that none of this stuff works or can work.

Gilder’s particular errors are more interesting. And — given his real technical expertise — less forgivable.

Despite its many structural issues — the book seems to have been left in dire need of proper editing — Life After Google was a hit with conservatives. Peter Thiel is a noteworthy fan. So we may need to pay attention. Fortunately, I’ve read it so you don’t have to.

About the Author

Gilder is fêted in conservative circles. His 1981 book Wealth and Poverty was a favourite of supply-side economics proponents in the Reagan era. He owned conservative magazine The American Spectator from 2000 to 2002.

Gilder is frequently claimed to have been Ronald Reagan’s favourite living author — mainly in his own publicity: “According to a study of presidential speeches, Mr. Gilder was President Reagan’s most frequently quoted living author.”

I tried tracking down this claim — and all citations I could find trace back to just one article: “The Gilder Effect” by Larissa MacFarquhar, in The New Yorker, 29 May 2000.

The claim is one sentence in passing: “It is no accident that Gilder — scourge of feminists, unrepentant supply-sider, and now, at sixty, a technology prophet — was the living author Reagan most often quoted.” The claim isn’t substantiated further in the New Yorker article — it reads like the journalist was told this and just put it in for colour.

Gilder despises feminism, and has described himself as “America’s number-one antifeminist.” He has written two books — Sexual Suicide, updated as Men and Marriage, and Naked Nomads — on this topic alone.

Also, per Gilder, Native American culture collapsed because it’s “a corrupt and unsuccessful culture,” as is Black culture — and not because of, e.g., massive systemic racism.

Gilder believes the biological theory of evolution is wrong. He co-founded the Discovery Institute in 1990, as an offshoot of the Hudson Institute. The Discovery Institute started out with papers on economic issues, but rapidly pivoted to promoting “intelligent design” — the claim that all living creatures were designed by “a rational agent,” and not evolved through natural processes. It’s a fancy term for creationism.

Gilder insisted for years that the Discovery Institute’s promotion of intelligent design totally wasn’t religious — even as judges ruled that intelligent design in schools was promotion of religion. Unfortunately for Gilder, we have the smoking gun documents showing that the Discovery Institute was explicitly trying to push religion into schools — the leaked Wedge Strategy document literally says: “Design theory promises to reverse the stifling dominance of the materialist worldview, and to replace it with a science consonant with Christian and theistic convictions.”

Gilder’s politics are approximately the polar opposite of mine. But the problems I had with Life After Google are problems his fans have also had. Real Clear Markets‘ review is a typical example — it’s from the conservative media sphere and written by a huge Gilder fan, and he’s very disappointed at how badly the book makes its case for blockchain.

Gilder’s still worth taking seriously on tech, because he’s got a past record of insight — particularly his 1990s books Life After Television and Telecosm.

Life After Television

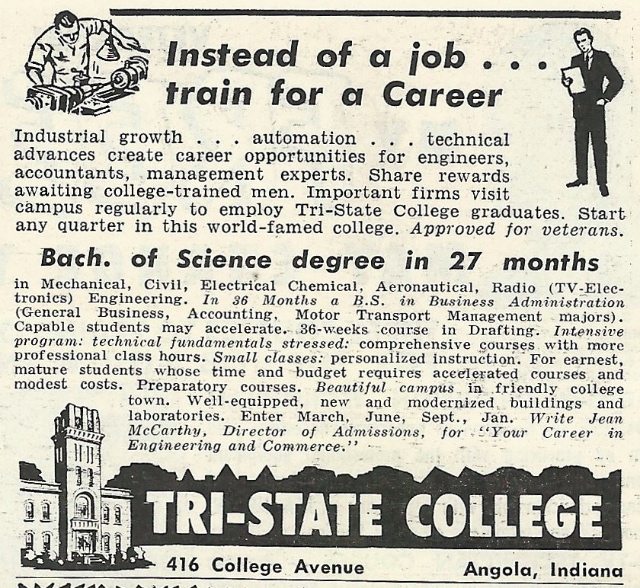

Life After Television: The Coming Transformation of Media and American Life is why people take Gilder seriously as a technology pundit. First published in 1990, it was expanded in 1992 and again in 1994.

The book predicts television’s replacement with computers on networks — the downfall of the top-down system of television broadcasting and the cultural hegemony it implies. “A new age of individualism is coming, and it will bring an eruption of culture unprecedented in human history.” Gilder does pretty well — his 1990 vision of working from home is a snapshot of 2020, complete with your boss on Zoom.

You could say this was obvious to anyone paying attention — Gilder’s thesis rests on technology that had already shown itself capable of supporting the future he spelt out — but not a lot of people in the mainstream were paying attention, and the industry was in blank denial. Even Wired, a few years later, was mostly still just terribly excited that the Internet was coming at all.

Life After Television talks way more about the fall of the television industry than the coming future network. In the present decade, it’s best read as a historical record of past visions of the astounding future.

If you remember the first two or three years of Wired magazine, that’s the world Gilder’s writing from. Gilder mentored Wired and executive editor Kevin Kelly in its first few years, and appeared on the cover of the March 1996 edition. Journalist and author Paulina Borsook detailed Gilder’s involvement in Wired in her classic 2000 book Cyberselfish: A Critical Romp through the Terribly Libertarian Culture of High Tech, (also see an earlier article of the same name in Mother Jones) which critiques his politics including his gender politics at length, noting that “Gilder worshipped entrepreneurs and inventors and appeared to have found God in a microchip” (132-3) and describing “a phallus worship he has in common with Ayn Rand” (143).

The only issue I have with Gilder’s cultural predictions in Life After Television is that he doesn’t mention the future network’s negative side-effects — which is a glaring miss in a world where E. M. Forster predicted social media and some of its effects in The Machine Stops in 1909.

The 1994 edition of Life After Television goes in quite a bit harder than the 1990 edition. The book doesn’t say “Internet,” doesn’t mention the Linux computer operating system — which was already starting to be a game-changer — and only says “worldwide web” in the sense of “the global ganglion of computers and cables, the new worldwide web of glass and light.” (p23) But then there’s the occasional blinder of a paragraph, such as his famous prediction of the iPhone and its descendants:

Indeed, the most common personal computer of the next decade will be a digital cellular phone. Called personal digital assistants, among many other coinages, they will be as portable as a watch and as personal as a wallet; they will recognise speech and navigate streets, open the door and start the car, collect the mail and the news and the paycheck, connecting to thousands of databases of all kinds. (p20)

Gilder’s 1996 followup Telecosm is about what unlimited bandwidth would mean. It came just in time for a minor bubble in telecom stocks, because the Internet was just getting popular. Gilder made quite a bit of money in stock-picking, and so did subscribers to his newsletter — everyone’s a financial genius in a bubble. Then that bubble popped, and Gilder and his subscribers lost their shirts. But his main error was just being years early.

So if Gilder talks tech, he’s worth paying attention to. Is he right, wrong, or just early?

Gilder, Bitcoin and Gold

Gilder used to publish through larger generalist publishers. But since around 2000, he’s published through small conservative presses such as Regnery, small conservative think tanks, or his own Discovery Institute. Regnery, the publisher of Life After Google, is functionally a vanity press for the US far right, famous for, among other things, promising to publish a book by US Senator Josh Hawley after Simon & Schuster dropped it due to Hawley’s involvement with the January 6th capital insurrection.

Gilder caught on to Bitcoin around 2014. He told Reason that Bitcoin was “the perfect libertarian solution to the money enigma.”

In 2015, his monograph The 21st Century Case for Gold: A New Information Theory of Money was published by the American Principles Project — a pro-religious conservative think tank that advocates a gold standard and “hard money.”

This earlier book uses Bitcoin as a source of reasons that an economy based on gold could work in the 21st century:

Researches in Bitcoin and other digital currencies have shown that the real source of the value of any money is its authenticity and reliability as a measuring stick of economic activity. A measuring stick cannot be part of what it measures. The theorists of Bitcoin explicitly tied its value to the passage of time, which proceeds relentlessly beyond the reach of central banks.

Gilder drops ideas and catch-phrases from The 21st Century Case for Gold all through Life After Google without explaining himself — he just seems to assume you’re fully up on the Gilder Cinematic Universe. An editor should have caught this — a book needs to work as a stand-alone.

Life After Google’s Theses

The theses of Life After Google are:

- Google and Silicon Valley’s hegemony is bad.

- Google and Silicon Valley do capitalism wrong, and this is why they will collapse from their internal contradictions.

- Blockchain will solve the problems with Silicon Valley.

- Artificial intelligence is impossible, because Gödel, Turing and Shannon proved mathematically that creativity cannot result without human consciousness that comes from God.

This last claim is the real point of the book. Gilder affirmed that this was the book’s point in an interview with WND.

I should note, by the way, that Gödel, Turing and Shannon proved nothing of the sort. Gilder claims repeatedly that they and other mathematicians did, however.

Marxism for Billionaires

Gilder’s objections to Silicon Valley were reasonably mainstream and obvious by 2018. They don’t go much beyond what Clifford Stoll said in Silicon Snake Oil in 1995. And Stoll was speaking to his fellow insiders. (Gilder cites Stoll, though he calls him “Ira Stoll.”) But Gilder finds the points still worth making to his conservative audience, as in this early 2018 Forbes interview:

A lot of people have an incredible longing to reduce human intelligence to some measurable crystallization that can be grasped, calculated, projected and mechanized. I think this is a different dimension of the kind of Silicon Valley delusion that I describe in my upcoming book.

Gilder’s scepticism of Silicon Valley is quite reasonable … though he describes Silicon Valley as having adopted “what can best be described as a neo-Marxist political ideology and technological vision.”

There is no thing, no school of thought, that is properly denoted “neo-Marxism.” In the wild, it’s usually a catch-all for everything the speaker doesn’t like. It’s a boo-word.

Gilder probably realises that it comes across as inane to label the ridiculously successful billionaire and near-trillionaire capitalists of the present day as any form of “Marxist.” He attempts to justify his usage:

Marx’s essential tenet was that in the future, the key problem of economics would become not production amid scarcity but redistribution of abundance.

That’s not really regarded as the key defining point of Marxism by anyone else anywhere. (Maybe Elon Musk, when he’s tweeting words he hasn’t looked up.) I expect the libertarian post-scarcity transhumanists of the Bay Area, heavily funded by Gilder’s friend Peter Thiel, would be disconcerted too.

“Neo-Marxism” doesn’t rate further mention in the book — though Gilder does use the term in the Uncommon Knowledge podcast interview. Y’know, there’s red-baiting to get in.

So — Silicon Valley’s “neo-marxism” sucks. “It is time for a new information architecture for a globally distributed economy. Fortunately, it is on its way.” Can you guess what it is?

You’re Doing Capitalism Wrong

Did you know that Isaac Newton was the first Austrian economist? I didn’t. (I still don’t.)

Gilder doesn’t say this outright. He does speak of Newton’s work in physics, as a “system of the world,” a phrase he confesses to having lifted from Neal Stephenson.

But Gilder is most interested in Newton’s work as Master of the Mint — “Newton’s biographers typically underestimate his achievement in establishing the information theory of money on a firm foundation.”

There is no such thing as “the information theory of money” — this is a Gilder coinage from his 2015 book The 21st Century Case for Gold.

Gilder’s economic ideas aren’t quite Austrian economics, but he’s fond of their jargon, and remains a huge fan of gold:

The failure of his alchemy gave him — and the world — precious knowledge that no rival state or private bank, wielding whatever philosopher’s stone, would succeed in making a better money. For two hundred years, beginning with Newton’s appointment to the Royal Mint in 1696, the pound, based on the chemical irreversibility of gold, was a stable and reliable monetary Polaris.

I’m pretty sure this is not how it happened, and that the ascendancy of Great Britain’s pound sterling had everything to do with it being backed by a world-spanning empire, and not any other factor. But Gilder goes one better:

Fortunately the lineaments of a new system of the world have emerged. It could be said to have been born in early September 1930, when a gold-based Reichsmark was beginning to subdue the gales of hyperinflation that had ravaged Germany since the mid-1920s.

I am unconvinced that this quite explains Germany in the 1930s. The name of an obvious and well-known political figure, who pretty much everyone else considers quite important in discussing Germany in the 1930s, is not mentioned in this book.

The rest of the chapter is a puréed slurry of physics, some actual information theory, a lot of alleged information theory, and Austrian economics jargon, giving the impression that these are all the same thing as far as Gilder is concerned.

Gilder describes what he thinks is Google’s “System of the World” — “The Google theory of knowledge, nicknamed ‘big data,’ is as radical as Newton’s and as intimidating as Newton’s was liberating.” There’s an “AI priesthood” too.

A lot of people were concerned early on about Google-like data sponges. Here’s Gilder on the forces at play:

Google’s idea of progress stems from its technological vision. Newton and his fellows, inspired by their Judeo-Christian world view, unleashed a theory of progress with human creativity and free will at its core. Google must demur.

… Finally, Google proposes, and must propose, an economic standard, a theory of money and value, of transactions and the information they convey, radically opposed to what Newton wrought by giving the world a reliable gold standard.

So Google’s failures include not proposing a gold standard, or perhaps the opposite.

Open source software is also part of this evil Silicon Valley plot — the very concept of open source. Because you don’t pay for each copy. Google is evil for participating in “a cult of the commons (rooted in ‘open source’ software)”.

I can’t find anywhere that Gilder has commented on Richard M. Stallman’s promotion of Free Software, of which “open source” was a business-friendly politics-washed rebranding — but I expect that if he had, the explosion would have been visible from space.

Gilder’s real problem with Google is how the company conducts its capitalism — how it applies creativity to the goal of actually making money. He seems to consider the successful billionaires of our age “neo-Marxist” because they don’t do capitalism the way he thinks they should.

I’m reminded of Bitcoin Austrians — Saifedean Ammous in The Bitcoin Standard is a good example — who argue with the behaviour of the real-life markets, when said markets are so rude as not to follow the script in their heads. Bitcoin maximalists regard Bitcoin as qualitatively unique, unable to be treated in any way like the hodgepodge of other things called “cryptos,” and a separate market of its own.

But the real-life crypto markets treat this as all one big pile of stuff, and trade it all on much the same basis. The market does not care about your ideology, only its own.

Gilder mixes up his issues with the Silicon Valley ideology — the Californian Ideology, or cyberlibertarianism, as it’s variously termed in academia — with a visceral hatred of capitalists who don’t do capitalism his way. He seems to despise the capitalists who don’t do it his way more than he despises people who don’t do capitalism at all.

(Gilder was co-author of the 1994 document “Magna Carta for the Knowledge Age” that spurred Langdon Winner to come up with the term “cyberlibertarianism” in the first place.)

Burning Man is bad because it’s a “commons cult” too. Gilder seems to be partially mapping out the Californian Ideology from the other side.

Gilder is outraged by Google’s lack of attention to security, in multiple senses of the word — customer security, software security, military security. Blockchain will fix all of this — somehow. It just does, okay?

Ads are apparently dying. Google runs on ads — but they’re on their way out. People looking to buy things search on Amazon itself first, then purchase things for money — in the proper businesslike manner.

Gilder doesn’t mention the sizable share of Amazon’s 2018 income that came from sales of advertising on its own platform. Nor does Gilder mention that Amazon’s entire general store business, which he approves of, posted huge losses in 2018, and was subsidised by Amazon’s cash-positive business line, the Amazon Web Services computing cloud.

Gilder visits Google’s data centre in The Dalles, Oregon. He notes that Google embodies Sun Microsystems’ old slogan “The Network is the Computer,” coined by John Gage of Sun in 1984 — though Gilder attributes this insight to Eric Schmidt, later of Google, based on an email that Schmidt sent Gilder when he was at Sun in 1993.

All successful technologies develop on an S-curve, a sigmoid function. They take off, raise in what looks like exponential growth … and then they level off. This is normal and expected. Gilder knows this. Correctly calling the levelling-off stage is good and useful tech punditry.

Gilder notes the siren call temptations of having vastly more computing power than anyone else — then claims that Google will therefore surely fail. Nothing lasts forever; but Gilder doesn’t make the case for his claimed reasons.

Gilder details Google’s scaling problems at length — but at no point addresses blockchains’ scaling problems: a blockchain open to all participants can’t scale and stay fast and secure (the “blockchain trilemma”). I have no idea how he missed this one. If he could see that Google has scaling problems, how could he not even mention that public blockchains have scaling problems?

Gilder has the technical knowledge to be able to understand this is a key question, ask it and answer it. But he just doesn’t.

How would a blockchain system do the jobs presently done by the large companies he’s talking about? What makes Amazon good when Google is bad? The mere act of selling goods? Gilder resorts entirely to extrapolation from axioms, and never bothers with the step where you’d expect him to compare his results to the real world. Why would any of this work?

Gilder is fascinated by the use of Markov chains to statistically predict the next element of a series: “By every measure, the most widespread, immense, and influential of Markov chains today is Google’s foundational algorithm, PageRank, which encompasses the petabyte reaches of the entire World Wide Web.”

Gilder interviews Robert Mercer — the billionaire whose Mercer Family Foundation helped bankroll Trump, Bannon, Brexit, and those parts of the alt-right that Peter Thiel didn’t fund.

Mercer started as a computer scientist. He made his money on Markov-related algorithms for financial trading — automating tiny trades that made no human sense, only statistical sense.

This offends Gilder’s sensibilities:

This is the financial counterpart of Markov models at Google translating languages with no knowledge of them. Believing as I do in the centrality of knowledge and learning in capitalism, I found this fact of life and leverage absurd. If no new knowledge was generated, no real wealth was created. As Peter Drucker said, ‘It is less important to do things right than to do the right things.’

Gilder is faced with a stupendously successful man, whose ideologies he largely concurs with, and who’s won hugely at capitalism — “Mercer and his consort of superstar scholars have, mutatis mutandis, excelled everyone else in the history of finance” — but in a way that is jarringly at odds with his own deeply-held beliefs.

Gilder believes Mercer’s system, like Google’s, “is based on big data that will face diminishing returns. It is founded on frequencies of trading that fail to correspond to any real economic activity.”

Gilder holds that it’s significant that Mercer’s model can’t last forever. But this is hardly a revelation — nothing lasts forever, and especially not an edge in the market. It’s the curse of hedge funds that any process that exploits inefficiencies will run out of other people’s inefficiencies in a few years, as the rest of the market catches on. Gilder doesn’t make the case that Mercer’s trick will fail any faster than it would be expected to just by being an edge in a market.

Ten Laws of the Cryptocosm

Chapter 5 is “Ten Laws of the Cryptocosm”. These aren’t from anywhere else — Gilder just made them up for this book.

“Cryptocosm” is a variant on Gilder’s earlier coinage “Telecosm,” the title of his 1996 book.

Blockchain spectators should be able to spot the magical foreshadowing term in rule four:

The fourth rule is “Nothing is free. This rule is fundamental to human dignity and worth. Capitalism requires companies to serve their customers and to accept their proof of work, which is money. Banishing money, companies devalue their customers.

Rules six and nine are straight out of The Bitcoin Standard:

The sixth rule: ‘Stable money endows humans with dignity and control.’ Stable money reflects the scarcity of time. Without stable money, an economy is governed only by time and power.

The ninth rule is ‘Private keys are held by individual human beings, not by governments or Google.’ … Ownership of private keys distributes power.

In a later chapter, Gilder critiques The Bitcoin Standard, which he broadly approves of.

Gödel’s Incompetence Theorem

Purveyors of pseudoscience frequently drop the word “quantum” or “chaos theory” to back their woo-mongering in areas that aren’t physics or mathematics. There’s a strain of doing the same thing with Gödel’s incompleteness theorems to make remarkable claims in areas that aren’t maths.

What Kurt Gödel actually said was that if you use logic to build your mathematical theorems, you have a simple choice: either your system is incomplete, meaning you can’t prove every statement that is true, and you can’t know which of the unproven statements are true — or you introduce internal contradictions. So you can have holes in your maths, or you can be wrong.

Gödel’s incompleteness theorems had a huge impact on the philosophy of mathematics. They seriously affected Bertrand Russell’s work on the logicism programme, to model all of mathematics as formal logic, and caused issues for Hilbert’s second problem, which sought a proof that arithmetic is consistent — that is, free of any internal contradictions.

It’s important to note that Gödel’s theorems only apply in a particular technical sense, to particular very specific mathematical constructs. All the words are mathematical jargon, and not English.

But humans have never been able to resist a good metaphor — so, as with quantum physics, chaos theory and Turing completeness, people seized upon “Gödel” and ran off in all directions.

One particular fascination was what the theorems meant for the idea of philosophical materialism — whether interesting creatures like humans could really be completely explained by ordinary mathematics-based physics, or if there was something more in there. Gödel himself essayed haltingly in the direction of saying he thought there might be more than physics there — though he was slightly constrained by knowing what the mathematics actually said.

Compare the metaphor abuse surrounding blockchains. Deploy a mundane data structure and a proof-of-work system to determine who adds the next bit of data, and thus provide technically-defined, constrained and limited versions of “trustlessness,” “irreversibility” and “decentralisation.” People saw these words, and attributed their favoured shade of meaning of the plain-language words to anything even roughly descended from the mundane data structure — or that claimed it would be descended from it some time in the future.

Gilder takes Gödel’s incompleteness theorems, adds Claude Shannon on information theory, and mixes in his own religious views. He asserts that the mathematics of Shannon’s information theory and Gödel’s incompleteness theorems prove that creativity can only come from a human consciousness, created by God. Therefore, artificial intelligence is impossible.

This startling conclusion isn’t generally accepted. Torkel Franzén’s excellent Gödel’s Theorem: An Incomplete Guide to Its Use and Abuse, chapter 4, spends several pages bludgeoning variations on this dumb and bad idea to death:

there is no such thing as the formally defined language, the axioms, and the rules of inference of “human thought,” and so it makes no sense to speak of applying the incompleteness theorem to human thought.

If something is not literally a mathematical “formal system,” Gödel doesn’t apply to it.

The free Google searches and the fiat currencies are side issues — what Gilder really loathes is the very concept of artificial intelligence. It offends him.

Gilder leans heavily on the ideas of Gregory Chaitin — one of the few mathematicians with a track record of achievement in information theory who also buys into the idea that Gödel’s incompleteness theorem may disprove philosophical materialism. Of the few people convinced by Chaitin’s arguments, most happen to have matching religious beliefs.

It’s one thing to evaluate technologies according to an ethical framework informed by your religion. It’s quite another to make technological pronouncements directly from your religious views, and to claim mathematical backing for your religious views.

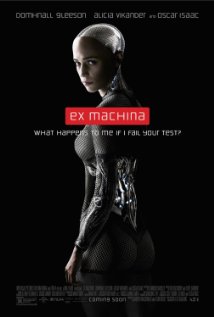

Your Plastic Pal Who’s Fun to Be With

Chapter 7 talks about artificial intelligence, and throwing hardware at the problem of machine learning. But it’s really about Gilder’s loathing of the notion of a general artificial intelligence that would be meaningfully comparable to a human being.

The term “artificial intelligence” has never denoted any particular technology — it’s the compelling science-fictional vision of your plastic pal who’s fun to be with, especially when he’s your unpaid employee. This image has been used through the past few decades to market a wide range of systems that do a small amount of the work a human might otherwise do.

But throughout Life After Google, Gilder conflates the hypothetical concept of human-equivalent general artificial intelligence with the statistical machine learning products that are presently marketed as “artificial intelligence.”

Gilder’s next book, Gaming AI: Why AI Can’t Think but Can Transform Jobs (Discovery Institute, 2020), confuses the two somewhat less — but still hammers on his completely wrong ideas about Gödel.

Gilder ends the chapter with three paragraphs setting out the book’s core thesis:

The current generation in Silicon Valley has yet to come to terms with the findings of von Neumann and Gödel early in the last century or with the breakthroughs in information theory of Claude Shannon, Gregory Chaitin, Anton Kolmogorov, and John R. Pierce. In a series of powerful arguments, Chaitin, the inventor of algorithmic information theory, has translated Gödel into modern terms. When Silicon Valley’s AI theorists push the logic of their case to explosive extremes, they defy the most crucial findings of twentieth-century mathematics and computer science. All logical schemes are incomplete and depend on propositions that they cannot prove. Pushing any logical or mathematical argument to extremes — whether ‘renormalized’ infinities or parallel universe multiplicities — scientists impel it off the cliffs of Gödelian incompleteness.

Chaitin’s ‘mathematics of creativity’ suggests that in order to push the technology forward it will be necessary to transcend the deterministic mathematical logic that pervades existing computers. Anything deterministic prohibits the very surprises that define information and reflect real creation. Gödel dictates a mathematics of creativity.

This mathematics will first encounter a major obstacle in the stunning successes of the prevailing system of the world not only in Silicon Valley but also in finance.

There’s a lot to unpack here. (That’s an academic jargon phrase meaning “yikes!”) But fundamentally, Gilder believes that Gödel’s incompleteness theorems mean that artificial intelligence can’t come up with true creativity. Because Gilder is a creationist.

The only place I can find Chaitin using a phrase akin to “mathematics of creativity” is in his 2012 book of intelligent design advocacy, Proving Darwin: Making Biology Mathematical, which Gilder cites. Chaitin writes:

To repeat: Life is plastic, creative! How can we build this out of static, perfect mathematics? We shall use postmodern math, the mathematics that comes after Gödel, 1931, and Turing, 1936, open not closed math, the math of creativity, in fact.

Whenever you see Gilder talk about “information theory,” remember that he’s using the special creationist sense of the term — a claim that biological complexity without God pushing it along would require new information being added, and that this is impossible.

Real information theory doesn’t say anything of the sort — the creationist version is a made-up pseudotheory, developed at the Discovery Institute. It’s the abuse of a scientific metaphor to claim that a loose analogy from an unrelated field is a solid scientific claim.

Gilder’s doing the thing that bitcoiners, anarchocapitalists and neoreactionaries do — where they ask a lot of the right questions, but come up with answers that are completely on crack, based on abuse of theories that they didn’t bother understanding.

Chapter 9 is about libertarian transhumanists of the LessWrong tendency, at the 2017 Future Of Life conference on hypothetical future artificial intelligences, hosted by physicist Max Tegmark.

Eliezer Yudkowsky, the founder of LessWrong, isn’t named or quoted, but the concerns are all reheated Yudkowsky: that a human-equivalent general artificial intelligence will have intelligence but not human values, will rapidly increase its intelligence, and thus its power, vastly beyond human levels, and so will doom us all. Therefore, we must program artificial intelligence to have human values — whatever those are.

Yudkowsky is not a programmer, but an amateur philosopher. His charity, the Machine Intelligence Research Institute (MIRI), does no programming, and its research outputs are occasional papers in mathematics. Until recently, MIRI was funded by Peter Thiel, but it’s now substantially funded by large Ethereum holders.

Gilder doesn’t buy Yudkowsky’s AI doomsday theory at all — he firmly believes that artificial intelligence cannot form a mind because, uh, Gödel: “The blind spot of AI is that consciousness does not emerge from thought; it is the source of it.”

Gilder doesn’t mention that this is because, as a creationist, he believes that true intelligence lies in souls. But he does say “The materialist superstition is a strange growth in an age of information.” So this chapter turns into an exposition of creationist “information theory”:

This materialist superstition keeps the entire Google generation from understanding mind and creation. Consciousness depends on faith—the ability to act without full knowledge and thus the ability to be surprised and to surprise. A machine by definition lacks consciousness. A machine is part of a determinist order. Lacking surprise or the ability to be surprised, it is self-contained and determined.

That is: Gilder defines consciousness as whatever it is a machine cannot have, therefore a machine cannot achieve consciousness.

Real science shows that the universe is a singularity and thus a creation. Creation is an entropic product of a higher consciousness echoed by human consciousness. This higher consciousness, which throughout human history we have found it convenient to call God, endows human creators with the space to originate surprising things.

You will be unsurprised to hear that “real science” does not say anything like this. But that paragraph is the closest Gilder comes in this book to naming the creationism that drives his outlook.

The roots of nearly a half-century of frustration reach back to the meeting in Königsberg in 1930, where von Neumann met Gödel and launched the computer age by showing that determinist mathematics could not produce creative consciousness.

You will be further unsurprised to hear that von Neumann and Gödel never produced a work saying any such thing.

We’re nine chapters in, a third of the way through the book, and someone from the blockchain world finally shows up — and, indeed, the first appearance of the word “blockchain” in the book at all. Vitalik Buterin, founder of Ethereum and MIRI’s largest individual donor, attends Tegmark’s AI conference: “Buterin succinctly described his company, Ethereum, launched in July 2015, as a ‘blockchain app platform.’”

The blockchain is “an open, distributed, unhackable ledger devised in 2008 by the unknown person (or perhaps group) known as ‘Satoshi Nakamoto’ to support his cryptocurrency, bitcoin.” This is the closest Gilder comes at any point in the book to saying what a blockchain in fact is.

Gilder says the AI guys are ignoring the power of blockchain — but they’ll get theirs, oh yes they will:

Google and its world are looking in the wrong direction. They are actually in jeopardy, not from an all-powerful artificial intelligence, but from a distributed, peer-to-peer revolution supporting human intelligence — the blockchain and new crypto-efflorescence … Google’s security foibles and AI fantasies are unlikely to survive the onslaught of this new generation of cryptocosmic technology.

Gilder asserts later in the book:

They see the advance of automation, machine learning, and artificial intelligence as occupying a limited landscape of human dominance and control that ultimately will be exhausted in a robotic universe — Life 3.0. But Charles Sanders Peirce, Kurt Gödel, Alonzo Church, Alan Turing, Emil Post, and Gregory Chaitin disproved this assumption on the most fundamental level of mathematical logic itself.

These mathematicians still didn’t do any such thing.

Gilder’s forthcoming book Life after Capitalism (Regnery, 2022), with a 2021 National Review essay as a taster, asserts that his favoured mode of capitalism will reassert itself. Its thesis invokes Gilder’s notions of what he thinks information theory says.

How Does Blockchain Do All This?

Gilder has explained the present-day world, and his problems with it. The middle section of the book then goes through several blockchain-related companies and people who catch Gilder’s attention.

It’s around here that we’d expect Gilder to start explaining what the blockchain is, how it works, and precisely how it will break the Google paradigm of big data, machine learning and artificial intelligence — the way he did when talking about the downfall of television.

Gilder doesn’t even bother — he just starts talking about bitcoin and blockchains as Google-beaters, and carries through on the assumption that this is understood.

But he can’t get away with this — he claims to be making a case for the successor to the Google paradigm, a technological case … and he just doesn’t ever do so.

By the end of this section, Gilder seems to think he’s made his point clear that Google is having trouble scaling up — because they don’t charge a micro-payment for each interaction, or something — therefore various blockchain promises will win.

The trouble with this syllogism is that the second part doesn’t follow. Gilder presents blockchain projects he thinks have potential — but that’s all. He makes the first case, and just doesn’t make the second.

Peter Thiel Hates Universities Very Much

Instead, let’s go to the 1517 Fund — “led by venture capitalist-hackers Danielle Strachman and Mike Gibson and partly financed by Peter Thiel.” Gilder is also a founding partner.

Gilder is a massive Thiel fan, calling him “the master investor-philosopher Peter Thiel”:

Thiel is the leading critic of Silicon Valley’s prevailing philosophy of ‘inevitable’ innovation. [Larry] Page, on the other hand, is a machine-learning maximalist who believes that silicon will soon outperform human beings, however you want to define the difference.

Thiel is a fan of Gilder, and Life After Google, in turn.

The 1517 Fund’s name comes from “another historic decentralization” — 31 October 1517 was the day that Martin Luther put up his ninety-five theses on a church door in Wittenberg.

The 1517 team want to take down the government conspiracy of paperwork university credentials, which ties into the fiat-currency-based system of the world. Peter Thiel offers Thiel Fellowships, where he pays young geniuses not to go to college. Vitalik Buterin, founder of Ethereum, got a Thiel Fellowship.

1517 also invests in the artificial intelligence stuff that Gilder derided in the previous section, but let’s never mind that.

The Universidad Francisco Marroquín in Guatemala is a university for Austrian and Chicago School economics. Gilder uses UFM as a launch pad for a rant about US academia, and the 1517 Fund’s “New 95” theses about how much Thiel hates the US university system. Again: they ask some good questions, but their premises are bizarre, and their answers are on crack.

Fictional Evidence

Gilder rambles about author Neal Stephenson, who he’s a massive fan of. The MacGuffin of Stephenson’s 1999 novel Cryptonomicon is a cryptographic currency backed by gold. Stephenson’s REAMDE (2011) is set in a Second Life-style virtual world whose currency is based on gold, and which includes something very like Bitcoin mining:

Like gold standards through most of human history — look it up — T’Rain’s virtual gold standard is an engine of wealth. T’Rain prospers mightily. Even though its money is metafictional, it is in fact more stable than currencies in the real world of floating exchange rates and fiat money.

Thus, fiction proves Austrian economics correct! Because reality certainly doesn’t — which is why Ludwig von Mises repudiated empirical testing of his monetary theories early on.

Is There Anything Bitcoin Can’t Do?

Gilder asserts that “Bitcoin has already fostered thousands of new apps and firms and jobs.” His example is cryptocurrency mining, which is notoriously light on labour requirements. Even as of 2022, the blockchain sector employed 18,000 software developers — or 0.07% of all developers.

“Perhaps someone should be building an ark. Or perhaps bitcoin is our ark — a new monetary covenant containing the seeds of a new system of the world.” I wonder why the story of the ark sprang to his mind.

One chapter is a dialogue, in which Gilder speaks to an imaginary Satoshi Nakamoto, Bitcoin’s pseudonymous creator, about how makework — Bitcoin mining — can possibly create value. “Think of this as a proposed screenplay for a historic docudrama on Satoshi. It is based entirely on recorded posts by Satoshi, interlarded with pleasantries and other expedients characteristic of historical fictions.”

Gilder fingers cryptographer Nick Szabo as the most likely candidate for Bitcoin’s pseudonymous creator, Satoshi Nakamoto — “the answer to three sophisticated textual searches that found Szabo’s prose statistically more akin to Nakomoto’s than that of any other suspected Satoshista.”

In the blockchain world, any amazing headline that would turn the world upside-down were it true is unlikely to be true. Gilder has referenced a CoinDesk article, which references research from Aston University’s Centre for Forensic Linguistics.

I tracked this down to an Aston University press release. The press release does not link to any research outputs — the “study” was an exercise that Jack Grieve at Aston gave his final-year students, then wrote up as a splashy bit of university press-release-ware.

The press release doesn’t make its case either: “Furthermore, the researchers found that the bitcoin whitepaper was drafted using Latex, an open-source document preparation system. Latex is also used by Szabo for all his publications.” LaTeX is used by most computer scientists anywhere for their publications — but the Bitcoin white paper was written in OpenOffice 2.4, not LaTeX.

This press release is still routinely used by lazy writers to claim that Szabo is Satoshi, ’cos they heard that linguistic analysis says so. Gilder could have dived an inch below the surface on this remarkable claim, and just didn’t.

Gilder then spends a chapter on Craig Wright, who — unlike Szabo — claims to be Satoshi. This is based on Andrew O’Hagan’s lengthy biographical piece on Wright, “The Satoshi Affair” for the London Review of Books, reprinted in his book The Secret Life: Three True Stories. This is largely a launch pad for how much better Vitalik Buterin’s ideas are than Wright’s.

Blockstack

We’re now into a list of blockchainy companies that Gilder is impressed with. This chapter introduces Muneeb Ali and his blockchain startup, Blockstack, whose pitch is a parallel internet where you own all your data, in some unspecified sense. Sounds great!

Ali wants a two-layer network: “monolith, the predictable carriers of the blockchain underneath, and metaverse, the inventive and surprising operations of its users above.” So, Ethereum then — a blockchain platform, with applications running on top.

Gilder recites the press release description of Blockstack and what it can do — i.e., might hypothetically do in the astounding future.

Under its new name, Stacks, the system is being used as a platform for CityCoins — local currencies on a blockchain — which was started in the 2021 crypto bubble. MiamiCoin notably collapsed in price a few months after its 2021 launch, and the city only didn’t show a massive loss on the cryptocurrency because Stacks bailed them out on their losses.

Brendan Eich and Brave

Brendan Eich is famous in the technical world as one of the key visionaries behind the Netscape web browser, the Mozilla Foundation, and the Firefox web browser, and as the inventor of the JavaScript programming language.

Eich is most famous in the non-technical world for his 2008 donation to Proposition 8, to make gay marriage against the California constitution. This donation came to light in 2012, and made international press at the time.

Techies can get away with believing the most awful things, as long as they stay locked away in their basement — but Eich was made CEO of Mozilla in 2014, and somehow the board thought the donation against gay marriage wouldn’t immediately become 100% of the story.

One programmer, whose own marriage had been directly messed up by Proposition 8, said he couldn’t in good conscience keep working on Firefox-related projects — and this started a worldwide boycott of Mozilla and Firefox. Eich refused to walk back his donation in any manner — though he did promise not to actively seek to violate California discrimination law in the course of his work at Mozilla, so that’s nice — and quit a few weeks later.

Eich went off to found Brave, a new web browser that promises to solve the Internet advertising problem using Basic Attention Tokens, a token that promises a decentralised future for paying publishers that is only slightly 100% centralised in all functional respects.

Gilder uses Eich mostly to launch into a paean to Initial Coin Offerings — specifically, in their rôle as unregistered penny stock offerings. Gilder approves of ICOs bypassing regulation, and doesn’t even mention how the area was suffused with fraud, nor the scarcity of ICOs that delivered on any of their promises. The ICO market collapsed after multiple SEC actions against these blatant securities frauds.

Gilder also approves of Brave’s promise to combat Google’s advertising monopoly, by, er, replacing Google’s ads with Brave’s own ads.

Goodbye Digital

Dan Berninger’s internet phone startup Hello Digital is, or was, an enterprise so insignificant it isn’t in the first twenty companies returned by a Google search on “hello digital”. Gilder loves it.

Berninger’s startup idea involved end-to-end non-neutral precedence for Hello Digital’s data. And the US’s net neutrality rules apparently preclude this. Berninger sued the FCC to make it possible to set up high-precedence private clearways for Hello Digital’s data on the public Internet.

This turns out to be Berninger’s suit against the FCC to protest “net neutrality” — on which the Supreme Court denied certiorari in December 2018.

Somehow, Skype and many other applications managed enormously successful voice-over-internet a decade previously on a data-neutral Internet. But these other systems “fail to take advantage of the spontaneous convergence of interests on particular websites. They provide no additional sources of revenue for Web pages with independent content. And they fail to add the magic of high-definition voice.” Apparently, all of this requires proprietary clearways for such data on the public network? Huge if true.

Gilder brings up 5G mobile Internet. I think it’s supposed to be in Google’s interests? Therefore it must be bad. Nothing blockchainy here, this chapter’s just “Google bad, regulation bad”.

The Empire Strikes Back

Old world big money guys — Jamie Dimon, Warren Buffett, Charlie Munger, Paul Krugman — say Bitcoin is trash. Gilder maintains that this is good news for Bitcoin.

Blockchain fans and critics — and nobody else — will have seen Kai Stinchcombe’s blog post of December 2017, “Ten years in, nobody has come up with a use for blockchain.” Stinchcombe points out that “after years of tireless effort and billions of dollars invested, nobody has actually come up with a use for the blockchain — besides currency speculation and illegal transactions.” It’s a good post, and you should read it.

Gilder spends an entire chapter on this blog post. Some guy who wrote a blog post is a mid-level boss in this book.

Gilder concedes that Stinchcombe’s points are hard to argue with. But Stinchcome merely being, you know, right, is irrelevant — because, astounding future!

Stinchcombe writes from the womb of the incumbent financial establishment, which has recently crippled world capitalism with a ten-year global recession.

One day a bitcoiner will come up with an argument that isn’t “but what about those other guys” — but today is not that day.

At Last, We Escape

We’ve made it to the last chapter. Gilder summarises how great the blockchain future will be:

The revolution in cryptography has caused a great unbundling of the roles of money, promising to reverse the doldrums of the Google Age, which has been an epoch of bundling together, aggregating, all the digital assets of the world.

Gilder confidently asserts ongoing present-day processes that are not, here in tawdry reality, happening:

Companies are abandoning hierarchy and pursuing heterarchy because, as the Tapscotts put it, ‘blockchain technology offers a credible and effective means not only of cutting out intermediaries, but also of radically lowering transaction costs, turning firms into networks, distributing economic power, and enabling both wealth creation and a more prosperous future.’

If you read Don and Alex Tapscott’s Blockchain Revolution (Random House, 2016), you’ll see that they too fail to demonstrate any of these claims in the existing present rather than the astounding future. Instead, the Tapscotts spend several hundred pages talking about how great it’s all going to be potentially, and only note blockchain’s severe technical limitations in passing at the very end of the book.

We finish with some stirring blockchain triumphalism:

Most important, the crypto movement led by bitcoin has reasserted the principle of scarcity, unveiling the fallacy of the prodigal free goods and free money of the Google era. Made obsolete will be all the lavish Google prodigies given away and Google mines and minuses promoted as ads, as well as Google Minds fantasizing superminds in conscious machines.

Bitcoin promoters routinely tout “scarcity” as a key advantage of their Internet magic beans — ignoring, as Gilder consistently does, that anyone can create a whole new magical Internet money by cut’n’paste, and they do. Austrian economics advocates had noted that issue ever since it started happening with altcoins in the early 2010s.

The Google era is coming to an end because Google tries to cheat the constraints of economic scarcity and security by making its goods and services free. Google’s Free World is a way of brazenly defying the centrality of time in economics and reaching beyond the wallets of its customers directly to seize their time.

The only ways in which the Google era has been shown to be “coming to an end” is that their technologies are reaching the tops of their S-curves. This absolutely counts as an end point as Gilder describes technological innovation, and he might even be right that Google’s era is ending — but his claimed reasons have just been asserted, and not at all shown.

By reestablishing the connections between computation, finance, and AI on the inexorable metrics of time and space, the great unbundling of the blockchain movement can restore economic reality.

The word “can” is doing all the work there. It was nine years at this book’s publication, and thirteen years now, and there’s a visible lack of progress on this front.

Everything will apparently decentralise naturally, because at last it can:

Disaggregated will be all the GAFAM (Google, Apple, Facebook, Amazon, Microsoft conglomerates) — the clouds of concentrated computing and commerce.

The trouble with this claim is that the whole crypto and blockchain middleman infrastructure is full of monopolies, rentiers and central points of failure — because centralisation is always more economically efficient than decentralisation.

We see recentralisation over and over. Bitcoin mining recentralised by 2014. Ethereum mining was always even more centralised than Bitcoin mining, and almost all practical use of Ethereum has long been dependent on ConsenSys’ proprietary Infura network. “Decentralisation” has always been a legal excuse to say “can’t sue me, bro,” and not any sort of operational reality.

Gilder concludes:

The final test is whether the new regime serves the human mind and consciousness. The measure of all artificial intelligence is the human mind. It is low-power, distributed globally, low-latency in proximity to its environment, inexorably bounded in time and space, and creative in the image of its creator.

Gilder wants you to know that he really, really hates the idea of artificial intelligence, for religious reasons.

Epilogue: The New System of the World

Gilder tries virtual reality goggles and likes them: “Virtual reality is the opposite of artificial intelligence, which tries to enhance learning by machines. Virtual reality asserts the primacy of mind over matter. It is founded on the singularity of human minds rather than a spurious singularity of machines.”

There’s a bit of murky restating of his theses: “The opposite of memoryless Markov chains is blockchains.” I’m unconvinced this sentence is any less meaningless with the entire book as context.

And Another Thing!

“Some Terms of Art and Information for Life after Google” at the end of the book isn’t a glossary — it’s a section for idiosyncratic assertions without justification that Gilder couldn’t fit in elsewhere, e.g.:

Chaitin’s Law: Gregory Chaitin, inventor of algorithmic information theory, ordains that you cannot use static, eternal, perfect mathematics to model dynamic creative life. Determinist math traps the mathematician in a mechanical process that cannot yield innovation or surprise, learning or life. You need to transcend the Newtonian mathematics of physics and adopt post-modern mathematics — the mathematics that follows Gödel (1931) and Turing (1936), the mathematics of creativity.

There doesn’t appear to be such a thing as “Chaitin’s Law” — all Google hits on the term are quotes of Gilder’s book.

Gilder also uses this section for claims that only make sense if you already buy into the jargon of goldbug economics that failed out in the real world:

Economic growth: Learning tested by falsifiability or possible bankruptcy. This understanding of economic growth follows from Karl Popper’s insight that a scientific proposition must be framed in terms that are falsifiable or refutable. Government guarantees prevent learning and thus thwart economic growth.

Summary

Gilder is sharp as a tack in interviews. I can only hope to be that sharp when I’m seventy-nine. But Life After Google fails in important ways — ways that Regnery bothering to bless the book with an editorial axe might have remedied. Gilder should have known better, in so many directions, and so should Regnery.

Gilder keeps making technological and mathematical claims based directly on his religious beliefs. This does none of his other ideas any favours.

Gilder is sincere. (Apart from that time he was busted lying about intelligent design not being intended to promote religion.) I think Gilder really does believe that Gödel’s incompleteness theorems and Shannon’s information theory, as further developed by Chaitin, mathematically prove that intelligence requires the hand of God. He just doesn’t show it, and nor has anyone else — particularly not any of the names he drops.

This book will not inform you as to the future of the blockchain. It’s worse than typical ill-informed blockchain advocacy text, because Gilder’s track record means we expect more of him. Gilder misses key points he has no excuse for missing.

The book may be of use in its rôle as some of what’s informing the technically incoherent blockchain dreams of billionaires. But it’s a slog.

Those interested in blockchain — for or against — aren’t going to get anything useful from this book. Bitcoin advocates may see new avenues and memes for evangelism. Gilder fans appear disappointed so far.

_____

David Gerard is a writer, technologist, and leading critic of bitcoin and blockchain. He is the author of Attack of the 50-Foot Blockchain: Bitcoin, Blockchain, Ethereum and Smart Contracts (2017) and Libra Shrugged: How Facebook Tried to Take Over the Money (2020), and blogs at https://davidgerard.co.uk/blockchain/.

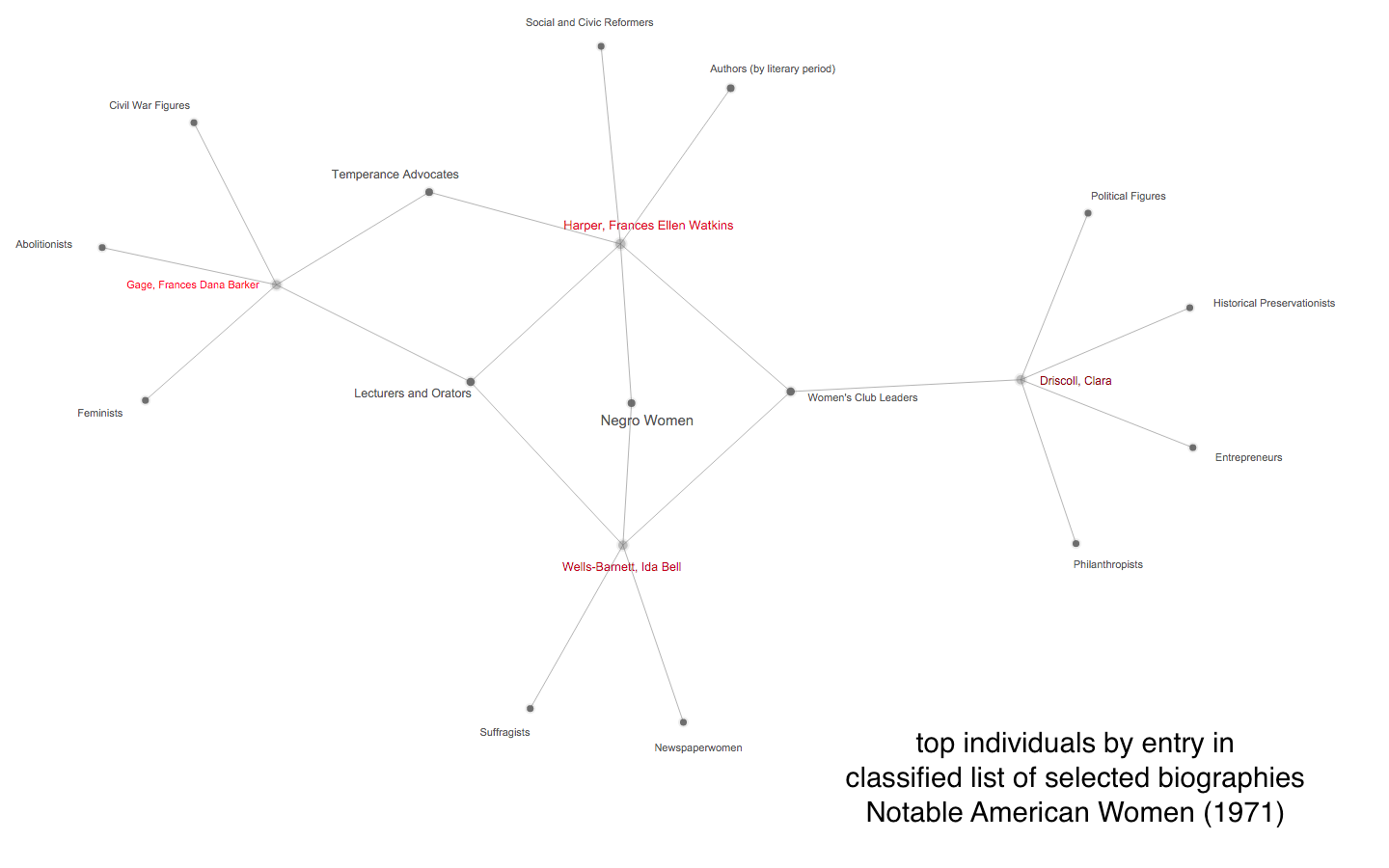

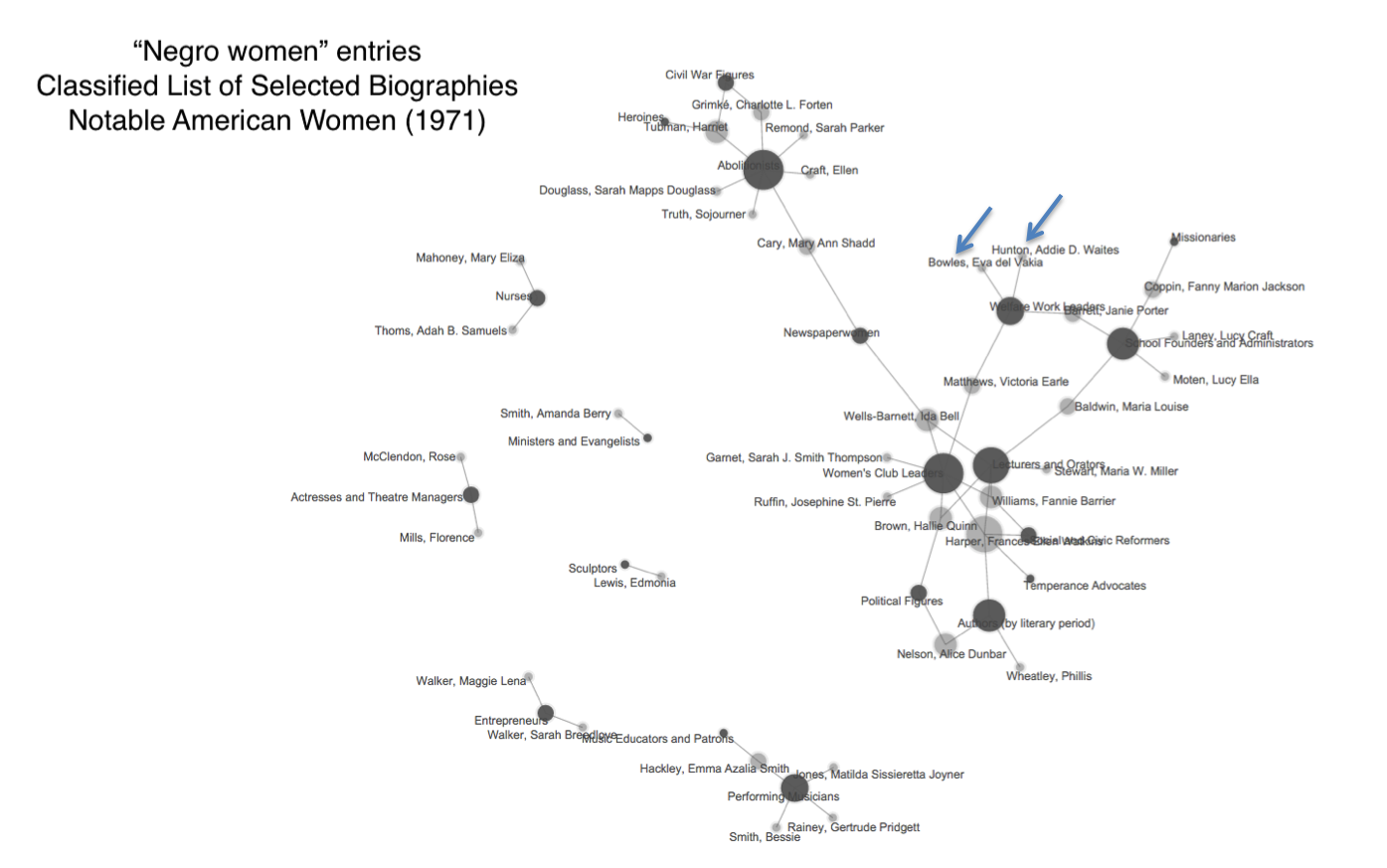

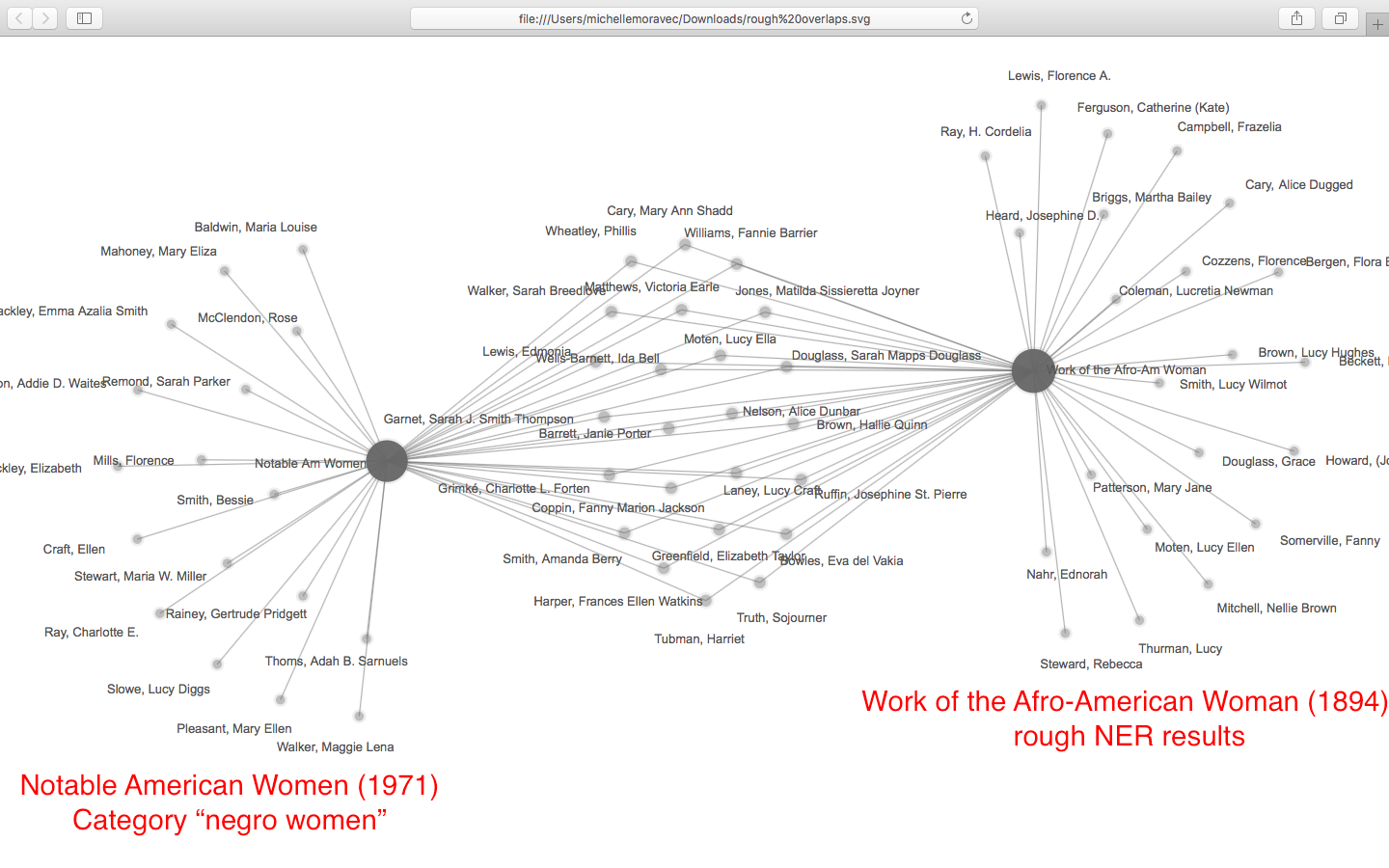

As this poetic quote by Sarah Josepha Hale, nineteenth-century author and influential editor reminds us, context is everything. The challenge, if we wish to write women back into history via Wikipedia, is to figure out how to shift the frame of references so that our stars can shine, since the problem of who precisely is “worthy of commemoration” or in Wikipedia language, who is deemed

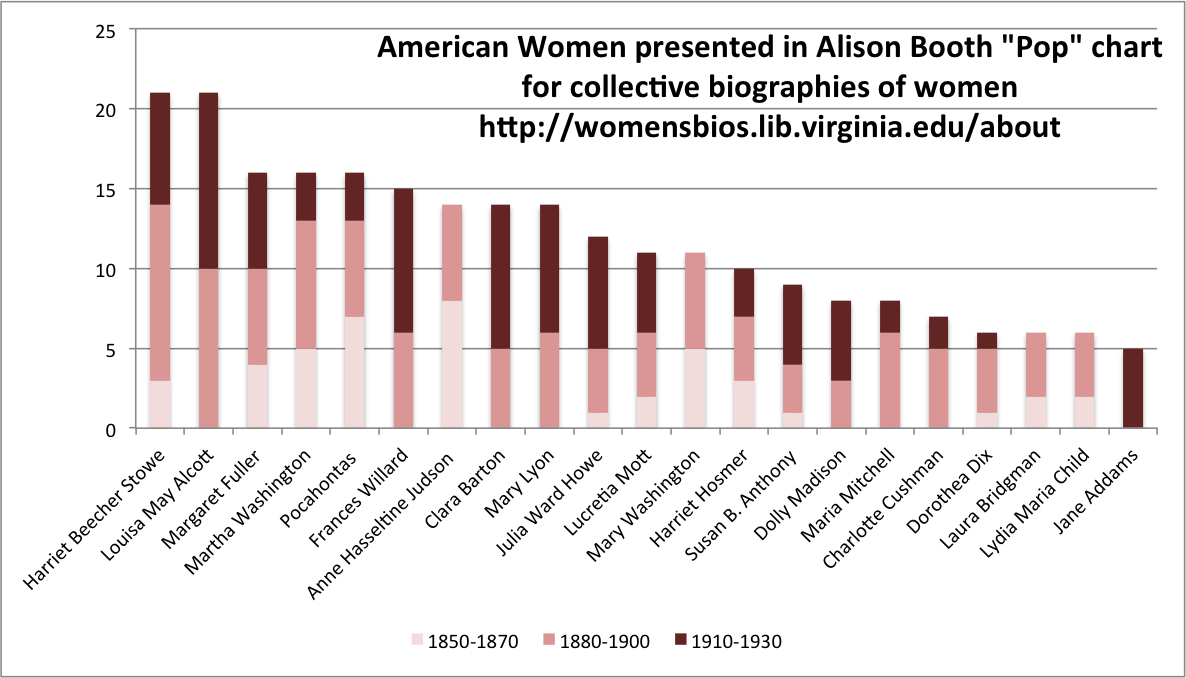

As this poetic quote by Sarah Josepha Hale, nineteenth-century author and influential editor reminds us, context is everything. The challenge, if we wish to write women back into history via Wikipedia, is to figure out how to shift the frame of references so that our stars can shine, since the problem of who precisely is “worthy of commemoration” or in Wikipedia language, who is deemed  mes of prosopography published during what might be termed the heyday of the genre, 1830-1940, when the rise of the middle class and increased literacy combined with relatively cheap production of books to make such volumes both practicable and popular. Booth also points out, that lest we consign the genre to the realm of mere curiosity, predating the invention of “women’s history” the compilers, editrixes or authors of these volumes considered them a contribution to “national history” and indeed Booth concludes that the volumes were “indispensable aids in the formation of nationhood.”

mes of prosopography published during what might be termed the heyday of the genre, 1830-1940, when the rise of the middle class and increased literacy combined with relatively cheap production of books to make such volumes both practicable and popular. Booth also points out, that lest we consign the genre to the realm of mere curiosity, predating the invention of “women’s history” the compilers, editrixes or authors of these volumes considered them a contribution to “national history” and indeed Booth concludes that the volumes were “indispensable aids in the formation of nationhood.”

a review of Frank Pasquale, The Black Box Society: The Secret Algorithms That Control Money and Information (Harvard University Press, 2015)

a review of Frank Pasquale, The Black Box Society: The Secret Algorithms That Control Money and Information (Harvard University Press, 2015)