By Dale Carrico

~

Science fiction is a genre of literature in which artifacts and techniques humans devise as exemplary expressions of our intelligence result in problems that perplex our intelligence or even bring it into existential crisis. It is scarcely surprising that a genre so preoccupied with the status and scope of intelligence would provide endless variations on the conceits of either the construction of artificial intelligences or contact with alien intelligences.

Of course, both the making of artificial intelligence and making contact with alien intelligence are organized efforts to which many humans are actually devoted, and not simply imaginative sites in which writers spin their allegories and exhibit their symptoms. It is interesting that after generations of failure the practical efforts to construct artificial intelligence or contact alien intelligence have often shunted their adherents to the margins of scientific consensus and invested these efforts with the coloration of scientific subcultures: While computer science and the search for extraterrestrial intelligence both remain legitimate fields of research, both AI and aliens also attract subcultural enthusiasms and resonate with cultic theology, each attracts its consumer fandoms and public Cons, each has its True Believers and even its UFO cults and Robot cults at the extremities.

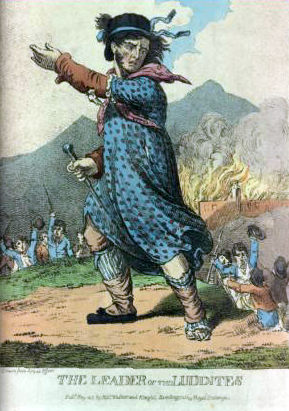

Champions of artificial intelligence in particular have coped in many ways with the serial failure of their project to achieve its desired end (which is not to deny that the project has borne fruit) whatever the confidence with which generation after generation of these champions have insisted that desired end is near. Some have turned to more modest computational ambitions, making useful software or mischievous algorithms in which sad vestiges of the older dreams can still be seen to cling. Some are simply stubborn dead-enders for Good Old Fashioned AI‘s expected eventual and even imminent vindication, all appearances to the contrary notwithstanding. And still others have doubled down, distracting attention from the failures and problems bedeviling AI discourse simply by raising its pitch and stakes, no longer promising that artificial intelligence is around the corner but warning that artificial super-intelligence is coming soon to end human history.

Another strategy for coping with the failure of artificial intelligence on its conventional terms has assumed a higher profile among its champions lately, drawing support for the real plausibility of one science-fictional conceit — construction of artificial intelligence — by appealing to another science-fictional conceit, contact with alien intelligence. This rhetorical gambit has often been conjoined to the compensation of failed AI with its hyperbolic amplification into super-AI which I have already mentioned, and it is in that context that I have written about it before myself. But in a piece published a few days ago in The New York Times, “Outing A.I.: Beyond the Turing Test,” Benjamin Bratton, a professor of visual arts at U.C. San Diego and Director of a design think-tank, has elaborated a comparatively sophisticated case for treating artificial intelligence as alien intelligence with which we can productively grapple. Near the conclusion of his piece Bratton declares that “Musk, Gates and Hawking made headlines by speaking to the dangers that A.I. may pose. Their points are important, but I fear were largely misunderstood by many readers.” Of course these figures made their headlines by making the arguments about super-intelligence I have already rejected, and mentioning them seems to indicate Bratton’s sympathy with their gambit and even suggests that his argument aims to help us to understand them better on their own terms. Nevertheless, I take Bratton’s argument seriously not because of but in spite of this connection. Ultimately, Bratton makes a case for understanding AI as alien that does not depend on the deranging hyperbole and marketing of robocalypse or robo-rapture for its force.

In the piece, Bratton claims “Our popular conception of artificial intelligence is distorted by an anthropocentric fallacy.” The point is, of course, well taken, and the litany he rehearses to illustrate it is enormously familiar by now as he proceeds to survey popular images from Kubrick’s HAL to Jonze’s Her and to document public deliberation about the significance of computation articulated through such imagery as the “rise of the machines” in the Terminator franchise or the need for Asimov’s famous fictional “Three Laws of Robotics.” It is easy — and may nonetheless be quite important — to agree with Bratton’s observation that our computational/media devices lack cruel intentions and are not susceptible to Asimovian consciences, and hence thinking about the threats and promises and meanings of these devices through such frames and figures is not particularly helpful to us even though we habitually recur to them by now. As I say, it would be easy and important to agree with such a claim, but Bratton’s proposal is in fact somewhat a different one:

[A] mature A.I. is not necessarily a humanlike intelligence, or one that is at our disposal. If we look for A.I. in the wrong ways, it may emerge in forms that are needlessly difficult to recognize, amplifying its risks and retarding its benefits. This is not just a concern for the future. A.I. is already out of the lab and deep into the fabric of things. “Soft A.I.,” such as Apple’s Siri and Amazon recommendation engines, along with infrastructural A.I., such as high-speed algorithmic trading, smart vehicles and industrial robotics, are increasingly a part of everyday life.

Here the serial failure of the program of artificial intelligence is redeemed simply by declaring victory. Bratton demonstrates that crying uncle does not preclude one from still crying wolf. It’s not that Siri is some sickly premonition of the AI-daydream still endlessly deferred, but that it represents the real rise of what robot cultist Hans Moravec once promised would be our “mind children” but here and now as elfen aliens with an intelligence unto themselves. It’s not that calling a dumb car a “smart” car is simply a hilarious bit of obvious marketing hyperbole, but represents the recognition of a new order of intelligent machines among us. Rather than criticize the way we may be “amplifying its risks and retarding its benefits” by reading computation through the inapt lens of intelligence at all, he proposes that we should resist holding machine intelligence to the standards that have hitherto defined it for fear of making its recognition “too difficult.”

The kernel of legitimacy in Bratton’s inquiry is its recognition that “intelligence is notoriously difficult to define and human intelligence simply can’t exhaust the possibilities.” To deny these modest reminders is to indulge in what he calls “the pretentious folklore” of anthropocentrism. I agree that anthropocentrism in our attributions of intelligence has facilitated great violence and exploitation in the world, denying the dignity and standing of Cetaceans and Great Apes, but has also facilitated racist, sexist, xenophobic travesties by denigrating humans as beastly and unintelligent objects at the disposal of “intelligent” masters. “Some philosophers write about the possible ethical ‘rights’ of A.I. as sentient entities, but,” Bratton is quick to insist, “that’s not my point here.” Given his insistence that the “advent of robust inhuman A.I.” will force a “reality-based” “disenchantment” to “abolish the false centrality and absolute specialness of human thought and species-being” which he blames in his concluding paragraph with providing “theological and legislative comfort to chattel slavery” it is not entirely clear to me that emancipating artificial aliens is not finally among the stakes that move his argument whatever his protestations to the contrary. But one can forgive him for not dwelling on such concerns: the denial of an intelligence and sensitivity provoking responsiveness and demanding responsibilities in us all to women, people of color, foreigners, children, the different, the suffering, nonhuman animals compels defensive and evasive circumlocutions that are simply not needed to deny intelligence and standing to an abacus or a desk lamp. It is one thing to warn of the anthropocentric fallacy but another to indulge in the pathetic fallacy.

Bratton insists to the contrary that his primary concern is that anthropocentrism skews our assessment of real risks and benefits. “Unfortunately, the popular conception of A.I., at least as depicted in countless movies, games and books, still seems to assume that humanlike characteristics (anger, jealousy, confusion, avarice, pride, desire, not to mention cold alienation) are the most important ones to be on the lookout for.” And of course he is right. The champions of AI have been more than complicit in this popular conception, eager to attract attention and funds for their project among technoscientific illiterates drawn to such dramatic narratives. But we are distracted from the real risks of computation so long as we expect risks to arise from a machinic malevolence that has never been on offer nor even in the offing. Writes Bratton: “Perhaps what we really fear, even more than a Big Machine that wants to kill us, is one that sees us as irrelevant. Worse than being seen as an enemy is not being seen at all.”

But surely the inevitable question posed by Bratton’s disenchanting expose at this point should be: Why, once we have set aside the pretentious folklore of machines with diabolical malevolence, do we not set aside as no less pretentiously folkloric the attribution of diabolical indifference to machines? Why, once we have set aside the delusive confusion of machine behavior with (actual or eventual) human intelligence, do we not set aside as no less delusive the confusion of machine behavior with intelligence altogether? There is no question were a gigantic bulldozer with an incapacitated driver to swerve from a construction site onto a crowded city thoroughfare this would represent a considerable threat, but however tempting it might be in the fraught moment or reflective aftermath poetically to invest that bulldozer with either agency or intellect it is clear that nothing would be gained in the practical comprehension of the threat it poses by so doing. It is no more helpful now in an epoch of Greenhouse storms than it was for pre-scientific storytellers to invest thunder and whirlwinds with intelligence. Although Bratton makes great play over the need to overcome folkloric anthropocentrism in our figuration of and deliberation over computation, mystifying agencies and mythical personages linger on in his accounting however he insists on the alienness of “their” intelligence.

Bratton warns us about the “infrastructural A.I.” of high-speed financial trading algorithms, Google and Amazon search algorithms, “smart” vehicles (and no doubt weaponized drones and autonomous weapons systems would count among these), and corporate-military profiling programs that oppress us with surveillance and harass us with targeted ads. I share all of these concerns, of course, but personally insist that our critical engagement with infrastructural coding is profoundly undermined when it is invested with insinuations of autonomous intelligence. In “Art in the Age of Mechanical Reproducibility,” Walter Benjamin pointed out that when philosophers talk about the historical force of art they do so with the prejudices of philosophers: they tend to write about those narrative and visual forms of art that might seem argumentative in allegorical and iconic forms that appear analogous to the concentrated modes of thought demanded by philosophy itself. Benjamin proposed that perhaps the more diffuse and distracted ways we are shaped in our assumptions and aspirations by the durable affordances and constraints of the made world of architecture and agriculture might turn out to drive history as much or even more than the pet artforms of philosophers do. Lawrence Lessig made much the same point when he declared at the turn of the millennium that “Code Is Law.”

It is well known that special interests with rich patrons shape the legislative process and sometimes even explicitly craft legislation word for word in ways that benefit them to the cost and risk of majorities. It is hard to see how our assessment of this ongoing crime and danger would be helped and not hindered by pretending legislation is an autonomous force exhibiting an alien intelligence, rather than a constellation of practices, norms, laws, institutions, ritual and material artifice, the legacy of the historical play of intelligent actors and the site for the ongoing contention of intelligent actors here and now. To figure legislation as a beast or alien with a will of its own would amount to a fetishistic displacement of intelligence away from the actual actors actually responsible for the forms that legislation actually takes. It is easy to see why such a displacement is attractive: it profitably abets the abuses of majorities by minorities while it absolves majorities from conscious complicity in the terms of their own exploitation by laws made, after all, in our names. But while these consoling fantasies have an obvious allure this hardly justifies our endorsement of them.

I have already written in the past about those who want to propose, as Bratton seems inclined to do in the present, that the collapse of global finance in 2008 represented the working of inscrutable artificial intelligences facilitating rapid transactions and supporting novel financial instruments of what was called by Long Boom digerati the “new economy.” I wrote:

It is not computers and programs and autonomous techno-agents who are the protagonists of the still unfolding crime of predatory plutocratic wealth-concentration and anti-democratizing austerity. The villains of this bloodsoaked epic are the bankers and auditors and captured-regulators and neoliberal ministers who employed these programs and instruments for parochial gain and who then exonerated and rationalized and still enable their crimes. Our financial markets are not so complex we no longer understand them. In fact everybody knows exactly what is going on. Everybody understands everything. Fraudsters [are] engaged in very conventional, very recognizable, very straightforward but unprecedentedly massive acts of fraud and theft under the cover of lies.

I have already written in the past about those who want to propose, as Bratton seems inclined to do in the present, that our discomfiture in the setting of ubiquitous algorithmic mediation results from an autonomous force over which humans intentions are secondary considerations. I wrote:

[W]hat imaginary scene is being conjured up in this exculpatory rhetoric in which inadvertent cruelty is ‘coming from code’ as opposed to coming from actual persons? Aren’t coders actual persons, for example? … [O]f course I know what [is] mean[t by the insistence…] that none of this was ‘a deliberate assault.’ But it occurs to me that it requires the least imaginable measure of thought on the part of those actually responsible for this code to recognize that the cruelty of [one user’s] confrontation with their algorithm was the inevitable at least occasional result for no small number of the human beings who use Facebook and who live lives that attest to suffering, defeat, humiliation, and loss as well as to parties and promotions and vacations… What if the conspicuousness of [this] experience of algorithmic cruelty indicates less an exceptional circumstance than the clarifying exposure of a more general failure, a more ubiquitous cruelty? … We all joke about the ridiculous substitutions performed by autocorrect functions, or the laughable recommendations that follow from the odd purchase of a book from Amazon or an outing from Groupon. We should joke, but don’t, when people treat a word cloud as an analysis of a speech or an essay. We don’t joke so much when a credit score substitutes for the judgment whether a citizen deserves the chance to become a homeowner or start a small business, or when a Big Data profile substitutes for the judgment whether a citizen should become a heat signature for a drone committing extrajudicial murder in all of our names. [An] experience of algorithmic cruelty [may be] extraordinary, but that does not mean it cannot also be a window onto an experience of algorithmic cruelty that is ordinary. The question whether we might still ‘opt out’ from the ordinary cruelty of algorithmic mediation is not a design question at all, but an urgent political one.

I have already written in the past about those who want to propose, as Bratton seems inclined to do in the present, that so-called Killer Robots are a threat that must be engaged by resisting or banning “them” in their alterity rather than by assigning moral and criminal responsibility on those who code, manufacture, fund, and deploy them. I wrote:

Well-meaning opponents of war atrocities and engines of war would do well to think how tech companies stand to benefit from military contracts for ‘smarter’ software and bleeding-edge gizmos when terrorized and technoscientifically illiterate majorities and public officials take SillyCon Valley’s warnings seriously about our ‘complacency’ in the face of truly autonomous weapons and artificial super-intelligence that do not exist. It is crucial that necessary regulation and even banning of dangerous ‘autonomous weapons’ proceeds in a way that does not abet the mis-attribution of agency, and hence accountability, to devices. Every ‘autonomous’ weapons system expresses and mediates decisions by responsible humans usually all too eager to disavow the blood on their hands. Every legitimate fear of ‘killer robots’ is best addressed by making their coders, designers, manufacturers, officials, and operators accountable for criminal and unethical tools and uses of tools… There simply is no such thing as a smart bomb. Every bomb is stupid. There is no such thing as an autonomous weapon. Every weapon is deployed. The only killer robots that actually exist are human beings waging and profiting from war.

“Arguably,” argues Bratton, “the Anthropocene itself is due less to technology run amok than to the humanist legacy that understands the world as having been given for our needs and created in our image. We hear this in the words of thought leaders who evangelize the superiority of a world where machines are subservient to the needs and wishes of humanity… This is the sentiment — this philosophy of technology exactly — that is the basic algorithm of the Anthropocenic predicament, and consenting to it would also foreclose adequate encounters with A.I.” The Anthropocene in this formulation names the emergence of environmental or planetary consciousness, an emergence sometimes coupled to the global circulation of the image of the fragility and interdependence of the whole earth as seen by humans from outer space. It is the recognition that the world in which we evolved to flourish might be impacted by our collective actions in ways that threaten us all. Notice, by the way, that multiculture and historical struggle are figured as just another “algorithm” here.

I do not agree that planetary catastrophe inevitably followed from the conception of the earth as a gift besetting us to sustain us, indeed this premise understood in terms of stewardship or commonwealth would go far in correcting and preventing such careless destruction in my opinion. It is the false and facile (indeed infantile) conception of a finite world somehow equal to infinite human desires that has landed us and keeps us delusive ignoramuses lodged in this genocidal and suicidal predicament. Certainly I agree with Bratton that it would be wrong to attribute the waste and pollution and depletion of our common resources by extractive-industrial-consumer societies indifferent to ecosystemic limits to “technology run amok.” The problem of so saying is not that to do so disrespects “technology” — as presumably in his view no longer treating machines as properly “subservient to the needs and wishes of humanity” would more wholesomely respect “technology,” whatever that is supposed to mean — since of course technology does not exist in this general or abstract way to be respected or disrespected.

The reality at hand is that humans are running amok in ways that are facilitated and mediated by certain technologies. What is demanded in this moment by our predicament is the clear-eyed assessment of the long-term costs, risks, and benefits of technoscientific interventions into finite ecosystems to the actual diversity of their stakeholders and the distribution of these costs, risks, and benefits in an equitable way. Quite a lot of unsustainable extractive and industrial production as well as mass consumption and waste would be rendered unprofitable and unappealing were its costs and risks widely recognized and equitably distributed. Such an understanding suggests that what is wanted is to insist on the culpability and situation of actually intelligent human actors, mediated and facilitated as they are in enormously complicated and demanding ways by technique and artifice. The last thing we need to do is invest technology-in-general or environmental-forces with alien intelligence or agency apart from ourselves.

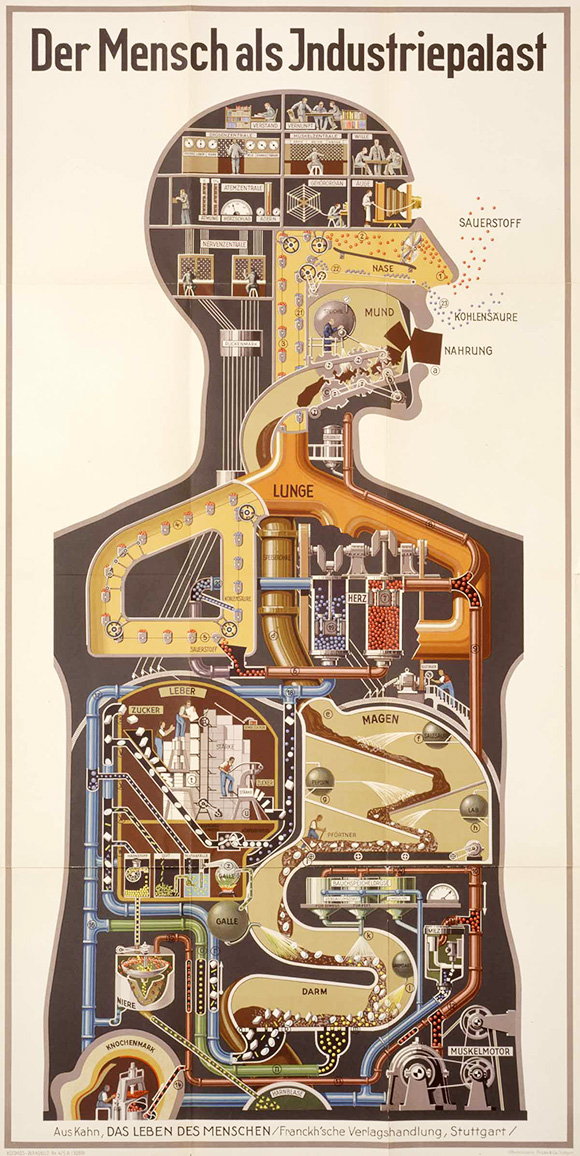

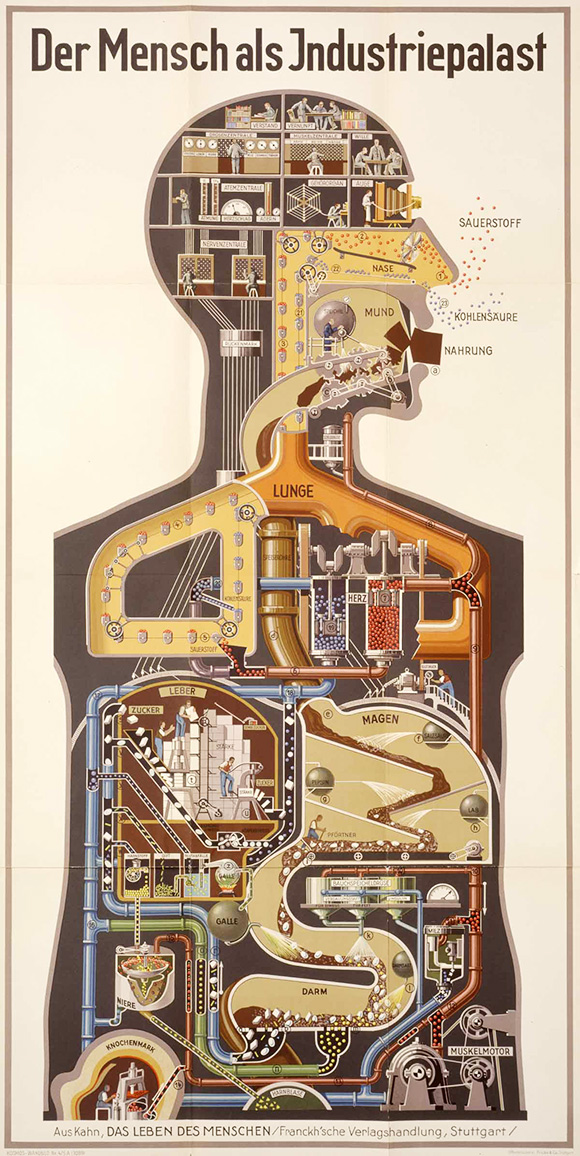

I am beginning to wonder whether the unavoidable and in many ways humbling recognition (unavoidable not least because of environmental catastrophe and global neoliberal precarization) that human agency emerges out of enormously complex and dynamic ensembles of interdependent/prostheticized actors gives rise to compensatory investments of some artifacts — especially digital networks, weapons of mass destruction, pandemic diseases, environmental forces — with the sovereign aspect of agency we no longer believe in for ourselves? It is strangely consoling to pretend our technologies in some fancied monolithic construal represent the rise of “alien intelligences,” even threatening ones, other than and apart from ourselves, not least because our own intelligence is an alienated one and prostheticized through and through. Consider the indispensability of pedagogical techniques of rote memorization, the metaphorization and narrativization of rhetoric in songs and stories and craft, the technique of the memory palace, the technologies of writing and reading, the articulation of metabolism and duration by timepieces, the shaping of both the body and its bearing by habit and by athletic training, the lifelong interplay of infrastructure and consciousness: all human intellect is already technique. All culture is prosthetic and all prostheses are culture.

Bratton wants to narrate as a kind of progressive enlightenment the mystification he recommends that would invest computation with alien intelligence and agency while at once divesting intelligent human actors, coders, funders, users of computation of responsibility for the violations and abuses of other humans enabled and mediated by that computation. This investment with intelligence and divestment of responsibility he likens to the Copernican Revolution in which humans sustained the momentary humiliation of realizing that they were not the center of the universe but received in exchange the eventual compensation of incredible powers of prediction and control. One might wonder whether the exchange of the faith that humanity was the apple of God’s eye for a new technoscientific faith in which we aspired toward godlike powers ourselves was really so much a humiliation as the exchange of one megalomania for another. But what I want to recall by way of conclusion instead is that the trope of a Copernican humiliation of the intelligent human subject is already quite a familiar one:

In his Introductory Lectures on Psychoanalysis Sigmund Freud notoriously proposed that

In the course of centuries the naive self-love of men has had to submit to two major blows at the hands of science. The first was when they learnt that our earth was not the center of the universe but only a tiny fragment of a cosmic system of scarcely imaginable vastness. This is associated in our minds with the name of Copernicus… The second blow fell when biological research destroyed man’s supposedly privileged place in creation and proved his descent from the animal kingdom and his ineradicable animal nature. This revaluation has been accomplished in our own days by Darwin… though not without the most violent contemporary opposition. But human megalomania will have suffered its third and most wounding blow from the psychological research of the present time which seeks to prove to the ego that it is not even master in its own house, but must content itself with scanty information of what is going on unconsciously in the mind.

However we may feel about psychoanalysis as a pseudo-scientific enterprise that did more therapeutic harm than good, Freud’s works considered instead as contributions to moral philosophy and cultural theory have few modern equals. The idea that human consciousness is split from the beginning as the very condition of its constitution, the creative if self-destructive result of an impulse of rational self-preservation beset by the overabundant irrationality of humanity and history, imposed a modesty incomparably more demanding than Bratton’s wan proposal in the same name. Indeed, to the extent that the irrational drives of the dynamic unconscious are often figured as a brute machinic automatism, one is tempted to suggest that Bratton’s modest proposal of alien artifactual intelligence is a fetishistic disavowal of the greater modesty demanded by the alienating recognition of the stratification of human intelligence by unconscious forces (and his moniker a symptomatic citation). What is striking about the language of psychoanalysis is the way it has been taken up to provide resources for imaginative empathy across the gulf of differences: whether in the extraordinary work of recent generations of feminist, queer, and postcolonial scholars re-orienting the project of the conspicuously sexist, heterosexist, cissexist, racist, imperialist, bourgeois thinker who was Freud to emancipatory ends, or in the stunning leaps in which Freud identified with neurotic others through psychoanalytic reading, going so far as to find in the paranoid system-building of the psychotic Dr. Schreber an exemplar of human science and civilization and a mirror in which he could see reflected both himself and psychoanalysis itself. Freud’s Copernican humiliation opened up new possibilities of responsiveness in difference out of which could be built urgently necessary responsibilities otherwise. I worry that Bratton’s Copernican modesty opens up new occasions for techno-fetishistic fables of history and disavowals of responsibility for its actual human protagonists.

_____

Dale Carrico is a member of the visiting faculty at the San Francisco Art Institute as well as a lecturer in the Department of Rhetoric at the University of California at Berkeley from which he received his PhD in 2005. His work focuses on the politics of science and technology, especially peer-to-peer formations and global development discourse and is informed by a commitment to democratic socialism (or social democracy, if that freaks you out less), environmental justice critique, and queer theory. He is a persistent critic of futurological discourses, especially on his Amor Mundi blog, on which an earlier version of this post first appeared.