I

Victor Frankenstein, the titular character and “Modern Prometheus” of Mary Shelley’s 1818 novel, drawing on his biochemical studies at the University of Ingolstadt, creates life by reanimating the dead. While the gothic elements of Shelley’s narrative ensure its place, or those of its twentieth-century film adaptations, in the pantheons of popular horror, it is also arguably the first instance of science fiction, used by its young author to interrogate the Prometheanism that animated the intellectual culture of her day.

Prometheus—the titan who steals fire from the Olympian gods and for humankind, suffering imprisonment and torture at the hands of Zeus as a result —was an emblem for both socio-political emancipation and techno-scientific mastery during the European enlightenment. These two overlapping, yet distinct, models of progress are nonetheless confused, one with the other, then and now, with often disastrous results, as Shelley dramatizes over the course of her novel.

Frankenstein embarks on his experiment to demonstrate that “life and death” are merely “ideal bounds” that can be surpassed, to conquer death and “pour a torrent of light into our dark world.” Frankenstein’s motives are not entirely beneficent, as we can see in the lines that follow:

A new species would bless me as its creator and source; many happy and excellent natures would owe their being to me. No father could claim the gratitude of his child so completely as I should deserve their’s. Pursuing these reflections, I thought, that if I could bestow animation upon lifeless matter, I might in process of time (although I now found it impossible) renew life where death had apparently devoted the body to corruption. (Shelley 1818, 80-81)

The will to Promethean mastery, over nature, merges here with a will to power over other humanoid, if not entirely human, beings. Frankenstein abandons his creation, with disastrous results for the creature, his family, and himself. Over the course of the two centuries since its publication, “The Modern Prometheus” has been read, too simply, as a cautionary tale regarding the pitfalls of techno-scientific hubris, invoked in regard to the atomic bomb or genetic engineering, for example, which it is in part.

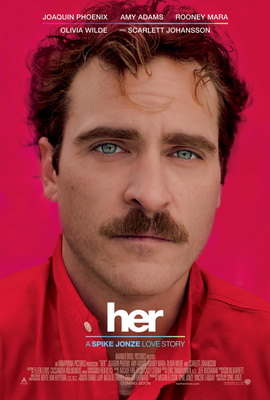

If we survey the history of the twentieth century, this caution is understandable. Even in the twenty-first century, a new Frankensteinism has taken hold among the digital overlords of Silicon Valley. Techno-capitalists from Elon Musk to Peter Thiel to Ray Kurzweil and their transhumanist fellow travelers now literally pursue immortality and divinity, strive to build indestructible bodies or merge with their supercomputers; preferably on their own high-tech floating island, or perhaps off-world, as the earth and its masses burn in a climate catastrophe entirely due to the depredations of industrial capitalism and its growth imperative.

This last point is significant, as it represents the most recent example of the way progress-as-emancipation—social and political freedom and equality for all, including non-human nature—is distinct from and often at odds with progress as technological development: a distinction that many of today’s techno-utopians embrace under the rubric of a “dark enlightenment,” in a seemingly deliberate echo of Victor Frankenstein. Mary Shelley’s great theme is the substantive distinction of these two models of progress and enlightenment, which are intertwined for historical and ideological reasons: a tragic marriage. It is no coincidence that she chose to explore this problem in a tale of tortured familial relationships, which includes the fantasy of male birth alongside immortality. It was both a personal and family matter for her, as the daughter of radical enlightenment intellectuals Mary Wollstonecraft and William Godwin. While her mother died a few days after Mary’s birth, she was raised according to strict radical enlightenment principles, by her father, who in his 1793 Enquiry Concerning Political Justice and its Influence on Morals and Happiness argues against the state, private property, and marriage; a text in which Godwin also predicts a future when human beings, perfected through the force of reason, would achieve a sexless, sleepless, god-like immortality, in what is a 1790s-era version of the technological Singularity. Godwin’s daughter drew on this vision in crafting her own Victor Frankenstein.

While Godwin would later modify these early proto-futurist views—in the wake of his wife’s death and a debate with the Reverend Thomas Malthus—even as he maintained his radical political commitments, his early work demonstrates the extent to which radical enlightenment thinking was entwined, from the very start, with “dark enlightenment” in today’s parlance, ranging from accelerationism to singulatarianism and ecomodernism.[1] His subsequent revision of his earlier views offers us an early example of how we might separate an emancipatory social and political program from those Promethean dreams of technological mastery used by capitalist and state socialist ideologues to justify development at any cost. In early Godwinism we find one prototype for today’s Promethean techno-utopianism. His subsequent debate with Thomas Malthus and concomitant retreat from his own earlier futurist Prometheanism illuminates how we might combine radical, or even utopian, political commitments with an awareness of biophysical limits in our own moment of ecological collapse.

Godwin defines the “justice” that animates his 1793 Enquiry Concerning Political Justice as that “which benefits the whole, because individuals are parts of the whole. Therefore to do it is just, and to forbear it is unjust. If justice have any meaning, it is just that I should contribute every thing in my power to the benefit of the whole” (Godwin 1793, 52). Godwin illustrates his definition with a hypothetical scenario that provoked accusations of heartlessness among both conservative detractors and radical allies at the time. Godwin asks us to imagine a fire striking the palace of François Fénelon, the progressive archbishop of Cambray, author of an influential attack on absolute monarchy:

In the same manner the illustrious archbishop of Cambray was of more worth than his chambermaid, and there are few of us that would hesitate to pronounce, if his palace were in flames, and the life of only one of them could be preserved, which of the two ought to be preferred. But there is another ground of preference, beside the private consideration of one of them being farther removed from the state of a mere animal. We are not connected with one or two percipient beings, but with a society, a nation, and in some sense with the whole family of mankind. Of consequence that life ought to be preferred which will be most conducive to the general good. In saving the life of Fénelon, suppose at the moment when he was conceiving the project of his immortal Telemachus, I should be promoting the benefit of thousands, who have been cured by the perusal of it of some error, vice and consequent unhappiness. Nay, my benefit would extend farther than this, for every individual thus cured has become a better member of society, and has contributed in his turn to the happiness, the information and improvement of others. (Godwin 1793, 55)

This passage illustrates the consequentialist perfectibilism that distinguished the philosopher’s theories from those of his better-known contemporaries, such as Thomas Paine, with his theory of natural right and social contract, or even utilitarian Jeremy Bentham, to whom Godwin is sometimes compared. In the words of Mark Philp, “only by improving people’s understanding can they become more fully virtuous, and only as they become more fully virtuous will the highest and greatest pleasures be realized in society” (Philp 1986, 84). In other words, the unfortunate chambermaid must be sacrificed if that is what it takes to save the philosophe whose written output will benefit multitudes by sharpening their rational capacities, congruent with the triumph of reason, virtue, and human emancipation.

Godwin goes on to make this line of reasoning clear:

Supposing I had been myself the chambermaid, I ought to have chosen to die, rather than that Fénelon should have died. The life of Fénelon was really preferable to that of the chambermaid. But understanding is the faculty that perceives the truth of this and similar propositions; and justice is the principle that regulates my conduct accordingly. It would have been just in the chambermaid to have preferred the archbishop to herself. To have done otherwise would have been a breach of justice. Supposing the chambermaid had been my wife, my mother or my benefactor. This would not alter the truth of the proposition. The life of Fénelon would still be more valuable than that of the chambermaid; and justice, pure, unadulterated justice, would still have preferred that which was most valuable. Justice would have taught me to save the life of Fénelon at the expence of the other. What magic is there in the pronoun “my,” to overturn the decisions of everlasting truth? (Godwin 1793, 55)

Godwin amends the puritan rigor of these positions in subsequent editions of his work, as he came to recognize the value of affective bonds and personal attachments. But here in the first edition of Political Justice we see a pristine expression of his rationalist radicalism, for which the good of the whole necessitates the sacrifice of a chambermaid, a mother, and one’s own self to Reason, which Godwin equates with the greatest good.

The early Godwin here exemplifies a central antinomy of the European enlightenment, as he strives to yoke an inadvertently inhuman plan for human perfection and mastery to an emancipatory vision of egalitarian social relations. Godwin pushes the Enlightenment-era deification of ratiocination to a visionary extreme in presenting very real inequities as so many cases of benighted judgment waiting for a personified, yet curiously disembodied, Reason’s correction in the fullness of time and entirely by way of debate. It was this aspect of Godwin’s project that inspired John Thelwall, the radical writer and public speaker, to declare that while Godwin recommends “the most extensive plan of freedom and innovation ever discussed by a writer in English,” he “reprobate {s} every measure from which even the most moderate reform can be rationally expected” (Thelwall 2008, 122). E.P. Thompson would later echo this verdict in his Poverty of Theory, when he compared the vogue for structuralist—or Althussererian—Marxism among certain segments of the 1970s-era New Left to Godwinism, which he described as another “moment of intellectual extremism, divorced from correlative action or actual social commitment” (Thompson 1978, 244).

Godwin blends a necessitarian theory of environmental influence, a belief in the perfectibility of the human race, a perfectionist version of the utilitarian calculus, and a quasi-idealist model of objective reason into an incongruous and extravagantly speculative rationalist metaphysics. The Godwinian system, in its first iteration at least, resembles Kantian and post-Kantian German idealism as much as it does the systems of Locke, Hume, and Helvetius, Godwin’s acknowledged sources. So, according to Godwin’s syllogistic precepts, it is only through the exercise of private judgment and a process of rational debate—“the clash of mind with mind”—that Truth will emerge, and with Truth, Political Justice; here is a model of enlightenment that resonates with Kant’s roughly contemporaneous ideal-type of progress and Jürgen Habermas’s twentieth century reconstruction of that ideal in the form of a “liberal-bourgeois public sphere.” It is for this reason, and in spite of his conflicted sympathies with French revolutionaries and British radicals alike, that the philosopher rejects both violent revolution and the kind of mass political action exemplified by Thelwall and the London Corresponding Society, hence Thelwall’s and Thompson’s damning judgments. Rational persuasion is the only feasible way of effecting the wholesale revolutionary transformation of “things as they are” for Godwin.

But this precise reconstruction of Godwin’s philosophical and political system does not capture the striking novelty of Godwin’s project. In the example above, we find a supplementary argument of sorts running underneath the consequentialist perfectibilism. Although we can certainly read in Godwin’s disparagement and hypothetical sacrifice of both a chambermaid and his own mother a historically typical, if unconscious, example of the class prejudice and misogyny the radical philosopher otherwise attacks at length in this same treatise, I would instead call attention to the implicit metaphor of embodiment and natality that unites maid, mother, and Godwin’s own unperfected self. The chambermaid is one step closer to the “mere animal” from which Fénelon, or his significantly disembodied work, offers an escape. If the chambermaid were rational in the Godwinian sense, she would easily offer herself as sacrifice to Fénelon and the Reason that finds a fitting emblem in the flames that consume our hypothetical building. While Godwin underlines the disinterested character of this choice in next substituting himself for the chambermaid, his willingness to hypothetically sacrifice his mother points to his rigid rejection of personal attachments and emotional ties. Godwin would substantially modify this viewpoint a few years later in the wake of his relationship with first feminist Mary Wollstonecraft.

The figure of the mother—whose embodied life Godwin would consign to the fire for the sake of Fénelon’s future intellectual output and its refining effects on humanity—is an overdetermined symbol that unites affective ties with the irrational fact of our bodily and sexual life: all of which must and will be mastered through a Promethean process of ratiocination indistinguishable from justice and reason. If one function of metaphor, according to Hans Blumenberg, is to provide the seedbed for conceptual thought, Godwin translates these subtexts into an explicit vision of a totally rational and rationalized future in the final, speculative, chapter of Political Justice.[2] It is in this chapter, as we shall see below, that Godwin responds to those critics who argued that population growth and material scarcity made perfectibilism impossible with a vision of humans made superhuman through reason.

Here is the characteristically Godwinian combination of “striking insight” and “complete wackiness,” which emerges from the “science fictional quality of his imagination” in the words of Jenny Davidson.[3] Godwin moves from a prescient critique of oppressive human institutional arrangements, motivated by the radical desire for a substantively just and free form of social organization under which all human beings can realize their capacities, to a rationalist metaphysics that enshrines Reason as a theological entity that realizes itself through a teleological human history. Reason reaches its apotheosis at that point when human beings become superhuman, transcending contingent and creaturely qualities, such as sexual desire, physical reproduction, and death, eviscerated like so many animal bodies thrown into a great fire.

We can see in Godwin’s early rationalist radicalism a significant antinomy. Godwin oscillates between a radical enlightenment critique that uses ratiocination to expose unjust institutional arrangements—from marriage to private property and the state—and a positive, even theological, version of Reason, for which creaturely limitations and human needs are not only secondary considerations, but primary obstacles to be surpassed on the way to a rationalist super-humanity that resembles nothing so much as a supercomputer, avant la lettre.

Many critics of the European Enlightenment—from an older Godwin and his younger romantic contemporaries through twentieth-century feminist, post-colonial, and ecological critics—have underlined the connection between these Promethean metaphysics, ostensibly in the service of human liberation, and various projects of domination. Western Marxists, like Max Horkheimer and Theodor Adorno (1947) overlap with later feminist critics of the scientific revolution, such as Carolyn Merchant (1980), in naming instrumental rationality as the problem. As opposed to an ends-oriented version of reason—the ends being emancipation or human flourishing— rationalism as technical means for dominating the natural world, or managing populations, or disciplining labor, became the dominant model of rationality during and after the European enlightenment in keeping with the ideological requirements of a nascent capitalism and colonialism. But in the case of the early Godwin and other Prometheans, we can see a substantive version of reason, reified as an end-in-itself, which overlaps with the critical philosophy of Hegel, the philosophical foundation of Marxism and the Frankfurt School variant on display in the work of Adorno and Horkheimer.[4] The problem with Prometheanism is that its proponents’ ideal-type of technological rationality is not instrumental enough: rather than a reason or technology subordinate to human flourishing and collective human agency, the proponents of Prometheus subordinate collective human (and creaturely) ends to a vision of reason indistinguishable from a fantasy of an autonomous technology with its own imperatives.

Langdon Winner, in analyzing autonomous technology as idea and ideology in twentieth-century industrial capitalist (and state socialist) societies, underlines this reversal of means and ends or what he calls “reverse adaptation”: “The adjustment of human ends to match the character of available means. We have already seen arguments to the effect that persons adapt themselves to the order, discipline, and pace of the organizations in which they work. But even more significant is the state of affairs in which people come to accept the norms and standards of technical processes as central to their lives as a whole” (Winner 1977, 229). Winner’s critique of “rationality in technological thinking” is made even more striking when we consider that the early Godwin’s Promethean force of reason, as evinced in by the final chapter of the 1793 Political Justice—in contradistinction to the ethical and political rationalism that is also present in the text—anticipates twentieth and twenty-first century techno-utopianism. For Winner, “if one takes rationality to mean the accommodation of means to ends, then surely reverse-adapted systems represent the most flagrant violation of rationality” (Winner 1977, 229).

This version of rationality, still inchoate in the eighteenth-century speculations of Godwin, takes mega-technological systems as models, rather than tools, for human beings, as Günther Anders argues—against those who depict anti-Prometheans as bio-conservative defenders of things as they are just because they are that way. The problem with Prometheanism does not reside in its adherents’ endorsement of technological possibilities as such so much as their embrace of the “machine as measure” of individual and collective human development (Anders 2016). Anders converges with Adorno and Horkheimer, his Marxist contemporaries, for whom this “machine” is a mystified metonym for irrational capitalist imperatives.

Rationalist humanism becomes technological inhumanism under the sign of Prometheus, which, according to present day “accelerationism” enthusiast Ray Brassier, must recognize “the disturbing consequences of our technological ingenuity” and extol “the fact that progress is savage and violent” (Brassier 2013). Brassier, operating from a radically different, avowedly nihilist, set of presuppositions than William Godwin, nonetheless recalls the 1793 Political Justice in once again defining rationalism as a reinvigorated Promethean “project of re-engineering ourselves and our world on a more rational basis.” Accelerationists strive to revive both rationalist radicalism—with the omniscient algorithm standing in for the perfectibilists’ reason—and the Promethean imperative to reengineer society and the natural world, because or in spite of the ongoing global climate change catastrophe. Rather than the great driver of an ecologically catastrophic growth, self-described “left” accelerationists Nick Williams and Alex Srnicek argue that capitalism must be dismantled because it “cannot be identified as the agent of true acceleration,” or #accelerate (Williams and Srnicek 2013, 486-7): a shorthand for their attempt to reboot a version of progress that arguably finds its first apotheosis in the 1790s. Brassier’s defense of Prometheanism takes the form of an extended reply to various critics, whose emphasis on limits and equilibrium, the given and the made, he rejects as in thrall to religious, and specifically Christian, notions. Brassier, who outlines his rationalism as systematic method or technique without presupposition or limits—along the lines of “God is dead, anything is possible”—seems unaware of actual material limitations and the theological, specifically Gnostic, origins of a very old human deification fantasy, the Enlightenment-era secularization of which was arguably first recognized by Godwin’s daughter in her Frankenstein (Shelley 1818).

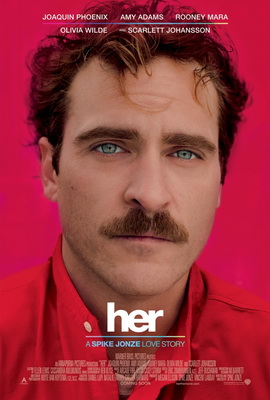

The Godwin of 1793 in this way also and more dramatically looks forward to our own transhumanist devotees of the coming technological singularity, who claim that human beings will soon merge with immensely powerful and intelligent supercomputers, becoming something else entirely in the process, hence “transhumanism.” According to prominent Silicon Valley “singulatarian” Ray Kurzweil, “The Singularity will allow us to transcend these limitations of our biological bodies and brains. We will gain power over our fates. Our mortality will be in our own hands. We will be able to live as long as we want (a subtly different statement from saying we will live forever)” (Kurzweil 2006, 25).

Kurzweil explicitly frames this transformation as the inevitable culmination of a mechanically teleological movement; and, like many futurists and their eighteenth-century perfectibilist forerunners, human perfection necessitates the supersession of the human. Kurzweil illustrates the paradoxical character of a Promethean futurism that, in seeking both human perfection and mastery, seeks to dispense with the human altogether: “The Singularity will represent the culmination of the merger of our biological thinking and existence with our technology, resulting in a world that is still human but that transcends our biological roots. There will be no distinction, post-Singularity, between human and machine or between physical and virtual reality. If you wonder what will remain unequivocally human in such a world, it’s simply this quality: ours is the species that inherently seeks to extend its physical and mental reach beyond current limitations” (25).

Even more than the accelerationists, Kurzweil’s Singulatarianism illustrates the “hubristic humility” that defines twentieth- and twenty-first century Prometheanism, according to Anders. Writing in the wake of the atomic bomb, the cybernetic revolution, and the mass-produced affluence exemplified by the post-war United States, Anders recognized how certain self-described rationalist techno-utopians combined a hubristic faith in technological achievement with a “Promethean shame” before these same technological creations. This shame arises from the perceived gap between human beings and their technological products; how, unlike our machines, we are “pre-given,” saddled with contingent bodies we neither choose nor design, bodies that are fragile, needy, and mortal. The mechanical reproducibility of the technological system or industrial artifact represents a virtual immortality that necessarily eludes unique and perishable human beings, according to Anders. Here Anders seemingly develops the earlier work of Walter Benjamin, his cousin, on aura and mechanical reproduction—but in a very different direction, as Anders writes: “in contrast to the light bulb or the vinyl record, none of us has the opportunity to outlive himself or herself in a new copy. In short: we must continue to live our lifetimes in obsolete singularity and uniqueness. For those who recognize the machine-world as exemplary, this is a flaw and as such a reason for shame.”[5]

Although we can situate the work of Godwin at the intersection of various eighteenth- and nineteenth-century discourses, including perfectibilist rationalism, civic republicanism, and Sandemanian Calvinism, on the one hand, or anarchism and romanticism, on the other, I will argue here that in juxtaposing Godwinism with present-days analogs like the transhumanism or accelerationism briefly described above, we can see the extent to which older—late eighteenth- and early nineteenth- century—utopian forms are returning, lending some credence to Alain Badiou’s claim that

We are much closer to the 19th century than to the last century. In the dialectical division of history we have, sometimes, to move ahead of time. Just like maybe around 1840, we are now confronted with an absolutely cynical capitalism, more and more inspired by the ideas that only work backwards: poor are justly poor, the Africans are underdeveloped, and that the future with no discernable limit belongs to the civilized bourgeoisie of the Western world. (Badiou 2008)

We can also see in recent conflicts between accelerationists and certain partisans of radical ecology the return of another seeming antinomy—one which pits cornucopian futurists against Malthusians, or at least those who emphasize the material limits to growth and how human beings might reconcile ourselves to those limits— that has its origin point in the Reverend Thomas Malthus’s anonymously published An Essay on the Principle of Population, as it affects the Future Improvement of Society with remarks on the Speculations of Mr. Godwin, M. Condorcet, and Other Writers (1798). Malthus’s demographic response to Godwinism led in turn to a long running debate and the rise of an ostensibly empirical political economy that took material scarcity as its starting point. Yet, if we examine Malthus’s initial response, alongside the Political Justice of 1793, we can observe several shared assumptions and lines of continuity that unite these seemingly opposed perspectives, as each of these thinkers delineates a recognizably bio-political project, for human improvement and population management, in left and right variants. Each of these variants obscures the social determinants of revolutionary movements and technological progress. Finally, it was as much Godwin’s debate with Malthus as the philosopher’s tumultuous and tragic relationship with Mary Wollstonecraft that precipitated a shift in his perspective regarding the value of emotional bonds, personal connections, and material limits: seemingly disparate concerns linked in Godwin’s imagination through the sign of the body. The body also functions as metonym for that same natural world, the limits of which Malthus brandished in order to discredit the utopian aspirations that drove the revolutionary upheavals of the 1790s; Godwin later sought to reconcile his utopianism with these limits.[6] This intellectual reconciliation—which was very much in line with the English romantics’ own version of a more benign natural world threatened by incipient industrialism, as opposed to Malthus’s brutally utilitarian nature—was a response to Malthus and the early Godwin’s own early Prometheanism, best exemplified in the final section of 1793 Political Justice, to which we will turn below.

Two generations of Romantics—from Wordsworth and Coleridge through De Quincey, Hazlitt, and Shelley—sought to counter Malthus’s version of the natural world as resource stock and constraint with a holistic and dynamic model of nature, under which natural limits and possibilities are not inconsistent with human aspirations and utopian hopes. Malthus offered the Romantics “a malign muse,” in the words of his biographer Robert Mayhew, who writes of two exemplary Romantic figures from this period: “ if nature is made of antipathies, Blake and Hegel in their different ways suggest that such binaries can be productive of a dialectic advance in our reasoning” as “we look for ways to respect nature and to use it with a population of 7 billion” (Mayhew 2014, 86).

One irony of intellectual and political history is how often our new Prometheans—transhumanists, singulatarians, accelerationists, and others—lump both narrowly Malthusian and more expansive “Romantic” ecologies under the rubric of Malthusianism, which is nowadays more slur than accurate description of Malthus’s project. Malthus wielded the threat of natural scarcity or “the Principle of Population” as an ideological tool against reform, revolution, or “the Future Improvement of Society,” as evinced in the very title of his long essay. In the words of Kerryn Higgs, to follow Malthus involves “several key elements” above a concern with overpopulation, such as “a resistance to notions of social improvement and social welfare, punitive policies for the poor, a tendency to blame the poor for their own plight, and recourse to speculative theorizing in the service of an essentially politically argument” (Higgs 2014, 43). It is among eugenicists, social Darwinists, but also today’s cornucopian detractors of Malthusianism, that we find Malthus’s heirs, if we attend to his and now their instrumental view of the natural world as factor in capitalist economic calculation—as exemplified by the rhetoric of “ecosystem services” and “decoupling”—in addition to a shared faith in material growth, to which Malthus was not opposed. While self-declared Malthusians, like Paul and Anne Ehrlich, in their misplaced focus on overpopulation, often in the developing world, conveniently avoid any discussion of consumption in the developed world, let alone the unsustainable growth imperative built into capitalism itself. In fact, for Malthus and his epigones, necessity—growth outstripping available resources—functions as spur for technological innovation, mirroring, in negative form, the teleological trajectory of the early Godwin—a telling convergence I will explore at length in the latter part of this essay.

“Malthusianism” is a shorthand used by orthodox economists and apologists for capitalist growth to dismiss ecological concerns. Marxists and other radicals—heirs to Godwin’s project, in ways good and bad, despite their protestations of materialism—too often share this investment in growth and techno-science as an end in itself. While John Bellamy Foster and others have made a persuasive case for Marx’s ecology—to be found in his work on nineteenth-century soil exhaustion, inspired by Liebig, and the town/country rift under capitalism—we can also find a broadly Promethean rejection of anything resembling a discourse of natural limits within various orthodox Marxisms, beginning in the later nineteenth century. Yet to recognize both the possibilities and limits of our situation—which must include the biophysical conditions of possibility for capitalist accumulation and any program that aims to supplant it— is, for me, the foundation for any radical and materialist approach to the world and politics, against Malthus and the young, futurist Godwin, to whom we now move.

II

Godwin translates this metaphorical substrate of his Fénelon thought experiment into an explicitly conceptual and argumentative form. He pushes the logic of eighteenth-century perfectibilism to a spectacular, speculative, and science-fictional extreme in Chapter 12 of Political Justice’s final volume on “property.” It is in this chapter that the philosopher outlines a future utopia on the far side of rational perfection. Beginning with the remark, attributed to Benjamin Franklin by Richard Price, that “mind will one day become omnipotent over matter,” Godwin offers us a series of methodical speculations as to how this might literally come to pass. He begins with the individual mind’s power to either exacerbate or alleviate illness or the effects of age, in order to illustrate his central contention: that we can overcome apparently hard and fast physical limits and subject ostensibly involuntary physiological processes to the dictates of our rational will. It is on this basis that Godwin concludes: “if we have in any respect a little power now, and if mind be essentially progressive…that power may…and inevitably will, extend beyond any bounds we are able to ascribe to it” (Godwin 1793, 455).

Godwin marries magical voluntarism on the ontogenetic level to the teleological arc of Reason on the phylogenetic level, all of which culminates in perhaps the first—Godwinian—articulation of the singularity: “The men who therefore exist when the earth shall refuse itself to a more extended population will cease to propagate, for they will no longer have any motive, either of error or duty, to induce them. In addition to this they will perhaps be immortal. The whole will be a people of men, and not of children. Generation will not succeed generation, nor truth have in a certain degree to recommence at the end of every thirty years. There will be no war, no crimes, no administration of justice as it is called, and no government” (Godwin 1793, 458).

James Preu (1959) long ago established Godwin’s peculiar intellectual debt to Jonathan Swift, and we can discern some resemblance between Godwin’s future race of hyper-rational, sexless immortals and the Houyhnhnms; as with Godwin’s other misprisions of Swift, the differences are as telling as are the similarities. Godwin transforms Swift’s ambiguous, arguably dystopian and misanthropic, depiction of equine ultra-rationalists, and their animalistic Yahoo humanoid stock, into an unequivocally utopian sketch of future possibility. For Godwin, it is our Yahoo-like “animal nature” that must be subdued or even exterminated, as Gulliver’s Houynnhnm master at one point suggests in a coolly calculating way that begs comparison to Swift’s “Modest Proposal,” even as both texts look forward to the utilitarian discourse of population control that finds its apotheosis in Malthus’s 1798 response to Godwin. Godwin would later embrace some version of heritable characteristics, or at least innate human inclinations, but despite his revisions of his views through subsequent editions of Political Justice and beyond, he is very still much the Helvetian environmentalist in the 1793 disquisition. He was therefore free of, or even at odds with, a proto-eugenic eighteenth-century discourse of breeding—after Jenny Davidson’s (2008) formulation—that overlapped with other variants of perfectibilism.

But as Davidson and others note, Godwin shares with his antagonist Malthus a Swiftian aversion to sex, which we can also see in the Godwinian critique of marriage and the family. This critique begins with a still-radical indictment of marriage as a proprietary relationship under which men exercise “the most odious of monopolies over women” (Godwin 1793, 447). Godwin predicts that marriage, and the patriarchal family it safeguards, will be abolished alongside other modes of unequal property. But, rather than inaugurating a regime of free love and license, as conservative critics of Godwinism contended at the time, the philosopher predicts that this “state of equal property would destroy the relish for luxury, would decrease our inordinate appetites of every kind, and lead us universally to prefer the pleasures of intelligence to the pleasures of the sense” (Godwin 1793, 447). Rather than simply ascribing this sentiment to a residual Calvinism on Godwin’s part, this programmatic elimination of sexual desire is of a piece with “killing the Yahoo,” consigning the maid-servant’s, his mother’s, his own body to the fires for Fénelon and a perfectly rational future state, i.e., the biopolitical rationalization of human bodies for Promethean reason and Promethean shame. The early Godwin here again suggests our own transhumanist devotees who, on the one hand, embrace the sexual possibilities supposedly afforded by AI while they manifest a “complete disgust with actual human bodies,” exemplifying Anders’s Promethean shame according to Michael Hauskeller (2014). From the messy body to virtual bodies, from the uncertainties and coercions of cooperation to self-sex, finally from sex to orgasmic cognition, transhumanists—in an echo of the young Godwin, who predicted sexual intercourse would give way to rational intercourse with the triumph of Reason—want to “make the pleasures of mind as intense and orgiastic as … certain bodily pleasures as they hope for a new and improved rational intercourse with a new and improved, virtual body, in the future” (Hauskeller, 2014).

In this speculative, coda to his visionary political treatise, Godwin’s predictive sketch of human rationalization as transformation, from Yahoo to Houyhnhnm and/or post-human, represents a disciplinary program in Michel Foucault’s sense: “a technique” that “centers on the body, produces individualizing effects, and manipulates the body as a source of forces that have to be rendered both useful and docile” (Foucault 2003, 249).[7] We can trace the intersection between perfectibilist, even transhumanist, dreams and disciplinary program in Godwin’s comments regarding the elimination of sleep, an apparent prerequisite for overcoming death, which he describes as “one of the most conspicuous infirmities of the human frame, specifically “because it is…not a suspension of thought, but an irregular and distempered state of the faculty” (456).

Dreams, or the unregulated and irrational affective processes they embody, provoke a panicked response on the part of Godwin at this point in the text. Godwin’s response accords with the consistent rejection of sensibility and sentimental attachments of all kinds—seen throughout the 1793 PJ—from romantic love to familial bonds. We can find in Godwin’s account of sleep and his plan for its elimination through an exertion of rational will and attention—something like an internal monitor in the mold of a Benthamite watchman presiding over a 24/7 panoptic mind—the exertion of an internalized disciplinary power indistinguishable from our new, wholly rational and rationalized, subject’s private judgment operating in a system without external authority, government, or disciplinary power. And it is no coincidence that Godwin’s proposed subjugation of sleep immediately follows a curiously contemporary passage: “If we can have three hundred and twenty successive ideas in a second of time, why should it be supposed that we should not hereafter arrive at the skill of carrying on a great number of contemporaneous processes without disorder” (456).

It should be noted again here that Godwin’s futurist idyll, which includes sexless immortals engaged in purely rational intercourse, specifically responds to earlier eighteenth-century arguments regarding human population and the resource constraints that limit population growth and, by extension, the wide abundance promised in various perfectibilist plans for the future. This new focus on demography and the management of populations during the latter half of the eighteenth century in the Euro-American world is a second technology of power, for Foucault, that “centers not upon the body but upon life: a technology that brings together the mass effects characteristic of a population,” in order to “to establish a sort of homeostasis, not by training individuals, not by training individuals, but by achieving an overall equilibrium that protects the security of the whole from internal dangers” (Foucault 2003, 249). This is the biopolitical mode of governance—the regulation of the masses’ bio-social processes—that characterizes the modern epoch for Foucault and his followers.

Yet, while Foucault admits that both technologies—disciplinary and biopolitical—are “technologies of the body,” he nonetheless counterpoises the “individualizing” to the demographic technique. But, as we can see in the 1793 PJ, in which Godwin proffers a largely disciplinary program as solution to the original bio-political problem—a solution that would inspire Thomas Malthus’s classic formulation of the population problem a few years later, as we shall explore below—these two technologies were intertwined from the start. The subsequent history of futurism in the west marries various disciplinary programs, powered by Promethean shame and its fantasies of becoming “man-machine,” to narrowly bio-political campaigns. These campaigns range from the exterminationist eugenicism of the twentieth century interwar period to more recent techno-survivalist responses to the ecological crisis on the part of Silicon Valley’s Singulatarian elites, some of whom look forward to immortality on Mars while the Earth and its masses burn.[8]

Godwin further highlights these futurist hopes in the second revision of Political Justice (1798), in which he underlines the central role of mechanical invention—in keeping with the general principle enshrined at the first volume of the treatise under the title, “Human Inventions Capable of Perpetual Improvement—making the technological prostheses implicit in these early speculations explicit. In predicting an ever-accelerating multiplication of cognitive processes—assuming these processes are delinked from disorder, human or Yahoo—Godwin anticipates both the discourse of cybernetics and its more recent accelerationist successors, for whom the dream of perfectibility—and Godwin’s sexless, sleepless rationalist immortals—can only be achieved through AI and the machinic supersession of the human Yahoo.

In fact, our new futurists frequently invoke the methodologically dubious Moore’s Law in defense of their claims for acceleration and its inevitability. Moore’s Law—named after Intel founder Gordon Moore, who, in 1965, predicted that the number of transistors, with their processing power, in an integrated circuit increases exponentially every two years or so—revives Godwin’s prophecy in a cybernetic register. It also suggests Thomas Malthus’s “iron” law of population. Malthus argued that “Population, when unchecked, increases in a geometrical ratio,” while “subsistence”—by which he denotes agricultural yield—increases only in arithmetical ratio. Malthus rendered this dubious “law” as a mathematical formula, thereby making it indisputable, although he makes his motivations clear when he writes, explicitly in response to Godwin’s speculations in the last chapter of the 1793 Political Justice, that his law “is decisive against the possible existence of a society, all of the members of which should live in ease, happiness, and comparative leisure; and feel no anxiety about providing the means of subsistence for themselves and families” (Malthus 1798, 16-17; see also Engels 1845).

Godwinism was for Malthus a synecdoche for both 1790s radicalism and radical egalitarianism generally, while the first Essay on Population is arguably the late, “proto-scientific,” entry in the paper war between English radicals and counterrevolutionary antijacobins—initiated by Edmund Burke’s Reflections on the Revolution in France (1790) and to which Godwin’s treatise was one among many responses—that defined literary and political debate in the wake of the French Revolution. Rather than simply arguing for biophysical limits, Malthus reveals his ideological hand in his discussion of the poor and the Poor Laws—the parish-based system of charity established in late medieval England to alleviate extreme distress among the poorest classes—against which he railed in the several editions of the Essay. Whereas in the past, population was maintained through “positive” checks, such as pestilence, famine, or warfare, for Malthus, the growth of civilization introduced “preventive” checks, including chastity, restraint within marriage, or even the conscious decision to delay or forego marriage and reproduction due to the “foresight of the difficulties attending the rearing of a family,” often prompted by “the actual distresses of some of the lower classes, by which they are disabled from giving the proper food and attention to their children” (35).

Parson Malthus largely ascribes this decidedly Christian and specifically protestant capacity for “rational” self-restraint, to his own industrious middle class; and, insofar as the peasantry possessed this preventive urge, alongside a “spirit of independence,” it was undermined by the eighteenth-century British Poor Laws. Malthus provides the template for what are now standard issue conservative attacks on social provision in his successful attacks on the Poor Laws, which in providing a safety net eradicated restraint among the poor, leading them to marry, reproduce, and “increase reproduction without increasing the food for its support.” Malthus invokes the same absolute limit in his naturalistic rejection of Godwin’s (and others’) egalitarian radicalism, foreclosing any examination of the production distribution of surplus and scarcity in a class society. Even more than this, Malthus uses his natural laws to rationalize all of those institutional arrangements under threat during the French Revolutionary Period, from the existing division of property to traditional marriage arrangements, but in an ostensibly objective manner that distinguished his approach from Burke’s earlier encomia to a dying age of chivalry. It is arguably for this reason that the idea of natural limits, in general, is a suspect one among subsequent left-wing formations, for good and ill.

III

Malthus, who anonymously published his Essay on Population in 1798, proclaims at the outset that his “argument is “conclusive against the perfectibility of the mass of mankind” (Malthus 1798, 95). And if there were any doubt as to the identity of Malthus’s target we need only look to the work’s subtitle, of which Malthus explains in the book’s first sentence that “the following essay owes its origin to a conversation with a friend on the subject of Mr. Godwin’s essay on avarice and profusion, in his Enquirer.” The friend was Thomas’s father, Daniel Malthus, an admiring acquaintance of Godwin’s who nonetheless encouraged (and subsidized) his son’s writing on this topic. The parson dedicates six chapters (chapters 10-15) to a refutation of Godwinism, often larded with mockery of Godwin, against whose speculative rationalism Malthus counterpoises his own supposedly empirical method; the same method that allowed him to discover that “lower classes of people” should never be “sufficiently free from want and labour, to attain any high degree of intellectual improvement” (95). And although Malthus explicitly names The Enquirer—the 1797 collection of essays in which Godwin admits to changing his mind on a variety of positions, as Malthus acknowledges at one point—as the impetus for his Essay, the work primarily responds to the earlier Political Justice and its final chapter in particular, because Godwin’s futurist speculations (and their more ominous biopolitical subtexts) respond to an “Objection to This System From the Principle of Population,” in the words of the chapter subheading. Godwin replies to this hypothetical objection several years prior to Malthus’s critique, the originality of which was said at the time to consist in his break with eighteenth-century doxa regarding population. Despite their differences, Montesquieu, Hume, Franklin, and Price all agreed that a growing population is the indisputable marker of progress, and the primary sign of a successful nation, since “the more men in the state, the more it flourishes.” And while Johann Süssmilch, an early pioneer of statistical demography, argued for a fixed limit to the planetary carrying capacity in regard to human population, he also inferred that the planet could hold up to six times as many people than the total global population offered by Süssmilch at the time.

Only Robert Wallace, in his 1761 Various Prospects of Mankind, Nature, and Providence—which Marx and Engels would accuse Malthus of plagiarizing—argued that excessive population is an obstacle to human improvement. Wallace offers a prototype for the Godwin/Malthus debate in constructing an elaborate argument for a proto-communist utopian social arrangement, only to undermine his own argument via recourse to the limits of population growth. Godwin invokes Wallace by name, before adverting to Süssmilch and a far-flung future when human beings will have transcended the limits of finitude. Immortals won’t have to reproduce, a point Godwin makes even clearer in both the final edition of Political Justice (1798) and in his first response to the Essay on The Population—in an 1801 pamphlet entitled Thoughts Occasioned By The Perusal of Dr. Parr’s Spital Sermon—in which he opts for a minimal population of perfected human beings living in a utopian society, rather than an ever- expanding human population.

While contemporary scholars still read the Godwin/Malthus Debate as a simple conflict between progressive optimism and conservative pessimism, we can still discern some peculiar commonalities between the early Godwin of the 1793 Political Justice and Malthus. Godwin’s speculations on human perfectibility represent a bio-perfectionist solution to the problems of population, sex, and embodiment generally—a Promethean program for overcoming Promethean shame—as I sketch above. Malthus rejects perfectibility along with the feasibility of physical immortality and pure rationality, adverting to humanity’s “compound nature,” a variation on original sin. In this vein, he also rejects Godwin’s prediction regarding “the extinction of the passion between the sexes,” which has not “taken place in the five or six thousand years that world has existed” (92). Yet Malthus—in proffering disciplinary self-restraint in the service of a biopolitical equilibrium between population and food supply—offers another such solution, motivated by antithetical political principles, while operating from a common set of Enlightenment-era assumptions regarding the need to regulate bodies and populations (Foucault 2003). The overlap between these ostensible antagonists should not surprise us, since, as Fredrik Albritton Jonsson notes in his critical genealogy of cornucopianism, “cornucopianism and environmental anxieties have been closely intertwined in theory and practice from the eighteenth century onward” (2014). Albritton Jonsson connects the alternation between cornucopian fantasy and environmental anxiety to the booms and busts of environmental appropriation and capitalist accumulation, while he locates the roots of cornucopia “in the realm of alchemy and natural theology. To overcome the effects of the Fall, Francis Bacon hoped to remake the natural order into a second, artificial world. Such theological and alchemical aspirations were intertwined with imperial ideology” (Albritton Jonsson 2014, 167). This strange convergence is most evident in Malthus’s own vision of progress and growth—driven exactly by the population pressure and scarcity that serve as analogue for the early Godwin’s reason—which Malthus, a pioneering apologist for industrial capitalism, did not reject, despite later misrepresentations.

IV

Both Marx and Engels would later discern in Malthus’s ostensibly scientific outline of nature’s positive checks on the poor—aimed at both eighteenth century British poor laws and various enlightenment era visions of social improvement—the primacy of surplus population and a reserve army of the unemployed for a nascent industrial capitalism, as Engels notably “summarizes” Malthus’s argument in his Condition of the Working Class in England (1845):

If, then, the problem is not to make the ‘surplus population’ useful, … but merely to let it starve to death in the least objectionable way, … this, of course, is simple enough, provided the surplus population perceives its own superfluousness and takes kindly to starvation. There is, however, in spite of the strenuous exertions of the humane bourgeoisie, no immediate prospect of its succeeding in bringing about such a disposition among the workers. The workers have taken it into their heads that they, with their busy hands, are the necessary, and the rich capitalists, who do nothing, the surplus population.

Despite the transparently political impetus behind Malthus’s Essay, his work was taken up by a certain segment of the environmental movement in the twentieth century. These same environmentalists often read and reject both Marx’s and Engels’s critiques of Malthusian political economy, with the disastrous environmental record of orthodox communist and specifically Soviet Prometheanism in mind. John Bellamy Foster notes that many “ecological socialists,” have gone so far as to argue that Marx and Engels were guilty of “a Utopian overreaction to Malthusian epistemic conservatism” which led them to downplay (or deny) “any ultimate natural limits to population” and indeed natural limits in general. Faced with Malthusian natural limits, we are told, Marx and Engels responded with “‘Prometheanism’—a blind faith in the capacity of technology to overcome all ecological barriers” (Foster 1998).

While Marx rejected a fixed and universal law of population growth or food production, stressing instead how population increases and agricultural yields vary from one socio-material context to another, he accepted ecological limits—to soil fertility, for example—in his theory of metabolic rift, as both Foster (2000) and Kohei Saito (2017) demonstrate in their respective projects on Marx’s ecology.

This perspective was arguably anticipated by the later Godwin himself, in the long and now forgotten Enquiry Concerning Population (1820), written at the urging of his son-in-law Percy Shelley, in order to salvage his reputation from Malthus’s attacks; Malthus was awarded the first chair in political economy at the East India Company College in Hertfordshire, while Godwin’s utopian philosophy was fading from the public consciousness, when it was not an explicit object of ridicule. Godwin returned to the absurdity of Malthus’s theological fixation on the human inability to resist the sexual urge, with a special emphasis on the poor, which we can see in first response to Malthus’s Essay in the 1790s, although in a more openly vitriolic fashion, perhaps at the urging of Shelley, for whom the Malthusian emphasis on abstinence and chastity among the poor was “seeking to deny them even the comfort of sexual love” in addition to “keeping them ignorant, miserable, and subservient” (St. Clair 1989, 464). Shelley, unlike the young Godwin of the 1793 Political Justice that influenced the poet’s radical political development, saw in unrestrained sexual intercourse a vehicle of communion with nature.

The older Godwin offers, in his Of Population, 600 pages of historical accounts and reports regarding population and agriculture—an empiricist overcorrection to Malthus’s accusations of visionary rationalism—in order to show us the variability of different social metabolisms, the efficacy of birth control, and, most importantly, how utopian social organization can and must be built with biophysical limits in mind against “the occult and mystical state of population” in Malthus’s thinking (Godwin 1820, 476). More than a response to Malthus, this later work also represents a rejoinder to the young proto-accelerationist Godwin and that nevertheless retains most of his radical social and political commitments. Of Population troubles the earlier Malthusian-Godwinian binary that arguably still underwrites our present-day Anthropocene narrative and the standard historiography of the English Industrial Revolution.

In 1798, Malthus argued in favor of population and resource constraints, for largely ideological reasons, at the exact moment that the steam engine and the widespread adoption of fossil energy, in the form of coal, enabled what seemed like self-sustaining growth, seemingly rendering that paradigm obsolete. But Malthus also argues, toward the end of the Essay, that as just the “first great awakeners of the mind seem to be the wants of the body,” so necessity is “the mother of invention” (Malthus 1793, 95) and progress Malthus’s myth of capitalist modernity, the negative image of perfectibilism, underwrites the political economy of industrialization. Malthus stressed the power of natural necessity—scarcity and struggle—to compel human accomplishment, against the universal luxury proffered by the perfectibilists.

Like the good bourgeois moralist he was, Malthus saw in the individual and collective struggle against scarcity—laws of population that function as secularized analogues for original sin—the drivers of technological development and material growth. This is a familiar story of progress and one that, no less than the perfectibilists’ teleological arc of history, elides conflict and contingency in rendering the rise of industrial capitalism and Euro-American capitalism as both natural and inevitable. For example, E. A. Wrigley argues, in a substantively Malthusian vein, that it was overpopulation, land exhaustion, and food scarcity in eighteenth-century England that necessitated the use of coal as an engine for growth, the invention of the steam engine in 1784, and widespread adoption of fossil power over the next century. Prometheans left and right nonetheless use the term “Malthusian” as synonym for (equally imprecise) “primitivist” or Luddite. But, as Andreas Malm persuasively contends, our dominant narratives of technological progress proceed from assumptions inherited from Malthus (and his disciple Ricardo): “Coal resolved a crisis of overpopulation. Like all innovations that composed the Industrial Revolution, the outcome was a valiant struggle of ‘a society with its back to ecological wall’” (Malm 2016, 23).

Malthus’s force of necessity is here indistinguishable from Godwinian Progress, spurring on the inevitable march of innovation, without any mention of the extent to which technological development, in England and the capitalist west, was and is shaped by capitalist imperatives, such as the quest for profit or labor discipline. We can see this same dynamic at play in much present-day Anthropocene discourse, some of whose exponents trace a direct line from the discovery of fire to the human transformation of the biosphere. These “Anthropocenesters” oscillate between a Godwinian-accelerationist pole—best exemplified by would-be accelerationists and ecomodernists like Mark Lynas (2011), who wholeheartedly embraces the role of Godwin avatar Victor Frankenstein in arguing how we must assume our position as the God species and completely reengineer the planet we have remade in our own image—and a Malthusian-pessimist pole, according to which all we can do now is learn how to die with the planet we have undone, to paraphrase the title of Roy Scranton’s popular Learning How to Die in the Anthropocene (2015).[9]

Rather than the enforced austerity conjured up by cornucopians and neo-Prometheans across the ideological spectrum when confronted with the biophysical limits now made palpable by our global ecological catastrophe, we must pursue a radical social and political project under these limits and conditions. Indeed, a decelerationist socialism might be the only way to salvage human civilization and creaturely life while repairing the biosphere of which both are parts: utopia among the ruins. While all the grand radical programs of the modern era, including Godwin’s own early perfectibilism, have been oriented toward the future, this project must contend with the very real burden of the past, as Malm notes: “every conjuncture now combines relics and arrows, loops and postponements that stretch from the deepest past to the most distant future, via a now that is non-contemporaneous with itself” (Malm 2016, 8).

The warming effects of coal or oil burnt in the past misshape our collective present and future, due to the cumulative effects of CO2 in the atmosphere, even if—for example—all carbon emissions were to stop tomorrow. Global warming in this way represents the weight of those dead generations and a specific tradition—fossil capitalism and its self-sustaining growth— as literal gothic nightmare; one that will shape any viable post-carbon and post-capitalist future.

Perhaps the post-accelerationist Godwin of the later 1790s and afterward is instructive in this regard. Although chastened by the death of his wife, the collapse of the French Revolution, and the campaign of vilification aimed at him and fellow radicals—in addition to the debate with Malthus outlined here—Godwin nonetheless retained the most important of his emancipatory commitments, as outlined in the 1793 Political Justice, even as he recognized physical constraints, the value of the past, and the primacy of affective bonds in building communal life. In a long piece, published in 1809, entitled Essay On Sepulchres, Or, A Proposal For Erecting Some Memorial of the Illustrious Dead in All Ages on the Spot , for example, Godwin reveals his new intellectual orientation in arguing for the physical commemoration of the dead; against a purely rationalist or moral remembrance of the deceased’s accomplishments and qualities, and against the younger Godwin’s horror of the body and its imperfections, the older man underlines the importance of our physical remains and our the visceral attachments they engender: “It is impossible therefore that I should not follow by sense the last remains of my friend; and finding him no where above the surface of the earth, should not feel an attachment to the spot where his body has been deposited in the earth” (Godwin 1809, 4).

These ruminations follow a preface in which Godwin reaffirms his commitment to the utopian anarchism of Political Justice, with the caveat that any radical future must recognize both the past and remember the dead. He draws a tacitly anti-Promethean line between our embodied mortality and utopian political aspiration, severing the two often antithetical modes of progress that constitute a dialectic of European enlightenment. While first-generation Romantics, such as Wordsworth and Coleridge, abandoned the futurist Godwinism of their youth, alongside their “Jacobin” political sympathies, for an ambivalent conservatism, the second generation of Romantics, including the extended Godwin-Shelley circle, combine the emancipatory social and political commitments of Political Justice with an appreciation of the natural world and its limits. One need look no further than Frankenstein and Prometheus Unbound—the Shelleys’ revisionist interrogations of the Prometheus myth and modern Prometheanism, which should be read together—to see how this radical romantic constellation represents a bridge between 1790s-era utopianism and later radicalisms, including Marxism and ecosocialism.[10] And if we group the later Godwin with these second-generation Romantics, then Michael Löwy and Robert Sayre’s reading of radical Romanticism as critical supplement to enlightenment makes perfect sense (see Löwy and Sayre 2001).

Instead of the science fictional fantasies of total automation and decoupling, largely derived from the pre-Marxist socialist utopianisms that drive today’s various accelerationisms, this Romanticism provides one historical resource for thinking through a decelerationist radicalism that dispenses with the grand progressive narrative: the linear, self-sustaining, and teleological model of improvement, understood in the quantitative terms of more, shared by capitalist and state socialist models of development. Against Prometheanism both old and new, let us reject the false binaries and shared assumptions inaugurated by the Godwin/Malthus debate, and instead join hands with the Walter Benjamin of the “Theses on the Philosophy of History” (1940) in order to better pull the emergency brake on a runaway capitalist modernity rushing headlong into the precipice.

_____

Anthony Galluzzo earned his PhD in English Literature at UCLA. He specializes in radical transatlantic English-language literary cultures of the late eighteenth and nineteenth centuries. He has taught at the United States Military Academy at West Point, Colby College, and NYU.

_____

Notes

[1] The “Dark Enlightenment” is a term coined by accelerationist and “neo-reactionary” Nick Land, to describe his own orientation as well as that of authoritarian futurist, Curtis Yarvin. The term is often used to describe a range of technofuturist discourses that blend libertarian, authoritarian, and post-Marxist elements, in the case of “left” accelerationist, with a belief in technological transcendence. For a good overview, see Haider (2017).

[2] This is a simplification of Blumenberg’s point in his Paradigms for a Metaphorology:

Metaphors can first of all be leftover elements, rudiments on the path from mythos to logos; as such, they indicate the Cartesian provisionality of the historical situation in which philosophy finds itself at any given time, measured against the regulative ideality of the pure logos. Metaphorology would here be a critical reflection charged with unmasking and counteracting the inauthenticity of figurative speech. But metaphors can also—hypothetically, for the time being—be foundational elements of philosophical language, ‘translations’ that resist being converted back into authenticity and logicality. If it could be shown that such translations, which would have to be called ‘absolute metaphors’, exist, then one of the essential tasks of conceptual history (in the thus expanded sense) would be to ascertain and analyze their conceptually irredeemable expressive function. Furthermore, the evidence of absolute metaphors would make the rudimentary metaphors mentioned above appear in a different light, since the Cartesian teleology of logicization in the context of which they were identified as ‘leftover elements’ in the first place would already have foundered on the existence of absolute translations. Here the presumed equivalence of figurative and ‘inauthentic’ speech proves questionable; Vico had already declared metaphorical language to be no less ‘proper’ than the language commonly held to be such,4 only lapsing into the Cartesian schema in reserving the language of fantasy for an earlier historical epoch. Evidence of absolute metaphors would force us to reconsider the relationship between logos and the imagination. The realm of the imagination could no longer be regarded solely as the substrate for transformations into conceptuality—on the assumption that each element could be processed and converted in turn, so to speak, until the supply of images was used up—but as a catalytic sphere from which the universe of concepts continually renews itself, without thereby converting and exhausting this founding reserve. (Blumenberg 2010, 3-4)

[3] Davidson situates Godwin, and his ensuing debate with Thomas Malthus on the limits to human population growth and improvement, within a longer eighteenth-century argument regarding perfectibility, the nature of human nature, and the extent to which we are constrained by our biological inheritance. Preceding Darwin and Mendel by more than a century, Davidson contends later models of eugenics and recognizably modern schemes for human enhancement or perfection emerge in the eighteenth, rather than the nineteenth, century. See Davidson (2009), 165.

[4] Horkheimer and Adorno’s Dialectic of Enlightenment (1947), with its critique of enlightenment as domination and instrumental rationality, is the classic text here.

[5] Benjamin famously argues for the emancipatory potential of mechanical reproducibility—of the image—in new visual media, such as film, against the unique “aura” of the original artwork. Benjamin sees in artistic aura a secularized version of the sacred object at the center of religious ritual. I am, of course, simplifying a complex argument that Benjamin himself would later qualify, especially as regards modern industrial technology, new media, and revolution. Anders—Benjamin’s husband and first husband of Hannah Arendt, who introduced Benjamin’s work to the English-speaking world—pushes this line of argument in a radically different direction, as human beings in the developed world increasingly feel “obsolete” on account of their perishable irreplaceability—a variation and inversion of artistic and religious aura, since “singularity” here is bound up with transience and imperfection—as compared to the assembly line proliferation of copies, all of which embody an immaterial model in the service of “industrial-Platonism” in Anders’s coinage. See Anders (2016), 53. See also Benjamin, “The Work of Art in The Age of Mechanical Reproduction” (1939).

[6] This shift, which includes a critique of what I am calling the Promethean or proto-futurist dimension of the early Godwin, is best exemplified in Godwin’s St. Leon, his second novel, which recounts the story of an alchemist who sacrifices his family and his sanity for the sake of immortality and supernatural power: a model for his daughter’s Frankenstein (Shelley 1818).

[7] I use Foucault’s descriptive models here with the caveat that, unlike Foucault, these techniques—of the sovereign, disciplinary, or biopolitical sort—should be anchored in specific socio-economic modes of organization as opposed to his diffuse “power.” Nor is this list of techniques exhaustive.

[8] One recent example of this is the vogue for a reanimated Russian Cosmism among Silicon Valley technologists and the accelerationists of the art and para-academic worlds alike. The original cosmists of the early Soviet period managed to recreate heterodox Christian and Gnostic theologies in secular and ostensibly materialist and/or Marxist-Leninist forms, i.e., God doesn’t exist, but we will become Him; with our liberated forces of production, we will make the universal resurrection of the dead a reality. The latter is now an obsession of various tech entrepreneurs such as Peter Thiel, who have invested money in “parabiosis” start-ups, for instance. One contribution to recent e-flux collection on (neo)cosmism and resurrection admits that cosmism is “biopolitics because it is concerned with the administration of life, rejuvenation, and even resurrection. Furthermore, it is radicalized biopolitics because its goals are ahead of the current normative expectations and extend even to the deceased” (Steyerl and Vidokle 2018, 33). Frankensteinism is real apparently. But Frankensteinism in the service of what? For a good overview of the newest futurism and its relationship to social and ecological catastrophe, see Pein (2015).

[9] Lynas and Scranton arguably exemplify these antithetical poles, although the latter has recently expressed some sympathy for something like revolutionary pessimism, very much in line with the decelerationist perspective that animates this essay. In a 2018 New York Times editorial called “Raising My Child in a Doomed World,” he writes: “there is some narrow hope that revolutionary socio-economic transformation today might save billions of human lives and preserve global civilization as we know it in more or less recognizable form, or at least stave off human extinction” (Scranton 2018). Also see Scranton, Learning to Die in the Anthropocene (2015) and Lynas (2011).

The eco-modernists, who include Ted Nordhaus, Michael Shellenberger, and Stewart Brand, are affiliated with the Breakthrough Institute, a California-based environmental think tank. They are, according to their mission statement, “progressives who believe in the potential of human development, technology, and evolution to improve human lives and create a beautiful world.” The development of this potential is, in turn, predicated on “new ways of thinking about energy and the environment.” Luckily, these ecomoderns have published their own manifesto in which we learn that these new ways include embracing “the Anthropocene” as a good thing.

This “good Anthropocene” provides human beings a unique opportunity to improve human welfare, and protect the natural world in the bargain, through a further “decoupling” from nature, at least according to the ecomodernist manifesto. The ecomodenists extol the “role that technology plays” in making humans “less reliant upon the many ecosystems that once provided their only sustenance, even as those same ecosystems have been deeply damaged.” The ecomodernists reject natural limits of any sort. They recommend our complete divorce from the natural world, like soul from body, although, as they constantly reiterate, this time it is for nature’s own good. How can human beings completely “decouple” from a natural world that is, in the words of Marx, our “inorganic body” outside of species-wide self-extinction, which is current policy? The eco-modernists’ policy proposals run the gamut from a completely nuclear energy economy and more intensified industrial agriculture to insufficient or purely theoretical (non-existent) solutions to our environmental catastrophe, such as geoengineering or cold fusion reactors (terraforming Mars, I hope, will appear in the sequel). This rebooted Promethean vision is still ideologically useful, while the absence of any analysis of modernization as a specifically capitalist process is telling. In the words of Chris Smaje (2015),

Ecomodernists offer no solutions to contemporary problems other than technical innovation and further integration into private markets which are structured systematically by centralized state power in favour of the wealthy, in the vain if undoubtedly often sincere belief that this will somehow help alleviate global poverty. They profess to love humanity, and perhaps they do, but the love seems to curdle towards those who don’t fit with its narratives of economic, technological and urban progress. And, more than humanity, what they seem to love most of all is certain favoured technologies, such as nuclear power.

[10] Terrence Hoagwood (1988), for example, argues for Shelley’s philosophical significance as bridge between 1790s radicalism and dialectical materialism.

__

Works Cited

- Anders, Günther. 2016. Prometheanism: Technology, Digital Culture, and Human Obsolescence, ed. Christopher John Müller. London: Rowman and Littlefield.

- Badiou, Alain. 2008. “Is the Word Communism Forever Doomed?” Lacanian Ink lecture (Nov).

- Benjamin, Walter. (1939) 1969. “The Work of Art in The Age of Mechanical Reproduction.” In Illuminations. New York: Shocken Books. 217-241.

- Benjamin, Walter. (1940) 1969. “Theses on the Philosophy of History.” In Illuminations. New York: Shocken Books. 253-265.

- Blumenberg, Hans. 2010. Paradigms for a Metaphorology, trans. Robert Savage. Ithaca: Cornell UP.

- Brassier, Ray. 2014. “Prometheanism and Its Critics.” In Mackay and Avanessian (2014). 467-488.

- Davidson, Jenny. 2008. Breeding: A Partial History of the Eighteenth Century. New York: Columbia UP.

- Engels, Friedrich. (1845) 2009. The Condition of the Working Class in England. Ed. David McLellan. New York: Penguin.

- Foster, John Bellamy. 1998. “Malthus’ Essay on Population at Age 200.” Monthly Review (Dec 1).

- Foster, John Bellamy. 2000. Marx’s Ecology: Materialism and Nature. New York: Monthly Review Press.

- Foucault, Michel. 2003. “Society Must Be Defended”: Lectures At The College de France 1975-1976. Trans. David Macey. New York: Picador.

- Haider, Shuja. 2017. “The Darkness at the End of the Tunnel: Artificial Intelligence and Neoreaction.” Viewpoint (Mar 28).

- Hauskeller, Michael. 2014. Sex and the Posthuman Condition. Hampshire: Palgrave.

- Higgs, Kerryn. 2014. Collision Course: Endless Growth on a Finite Planet. Cambridge: The MIT Press.

- Hoagwood, Terrence Allan. 1988. Skepticism and Ideology: Shelley’s Political Prose and Its Philosophical Context From Bacon to Marx. Iowa City: University of Iowa Press.

- Horkheimer, Max, and Theodor Adorno. (1947) 2007. Dialectic of Enlightenment. Stanford: Stanford University Press.

- Jonsson, Fredrik Albritton. 2014. “The Origins of Cornucopianism: A Preliminary Genealogy.” Critical Historical Studies (Spring).

- Kurzweil, Ray. 2006. The Singularity Is Near: When Humans Transcend Biology. New York: Penguin Books.

- Löwy Michael, and Robert Sayre. 2001. Romanticism Against the Tide of Modernity. Durham: Duke University Press.

- Lynas, Mark. 2011. The God Species: Saving the Planet in the Age of Humans. Washington DC: National Geographic Press.

- Mackay, Robin and Armen Avanessian, eds. 2014. #Accelerate: The Accelerationist Reader. Falmouth, UK: Urbanomic.

- Malm, Andreas. 2016. Fossil Capital. London: Verso.

- Malthus, Thomas. (1798) 2015. An Essay on the Principle of Population. In An Essay on the Principle of Population and Other Writings, ed. Robert Mayhew. New York: Penguin.

- Mayhew, Robert. 2014. Malthus: The Life and Legacies of an Untimely Prophet. Cambridge: Harvard University Press.

- Merchant, Carolyn. 1980. The Death of Nature: Women, Ecology, and the Scientific Revolution. Reprint edition, New York: HarperOne, 1990.

- Pein, Corey. 2015. “Cyborg Soothsayers of the High-Tech Hogwash Emporia: In Amsterdam with the Singularity.” The Baffler 28 (July).

- Philp, Mark. 1986. Godwin’s Political Justice. Ithaca: Cornell UP.

- Preu, James. 1959. The Dean and the Anarchist. Tallahassee: Florida State University.

- Saito, Kohei. 2017. Karl Marx’s Ecosocialism: Capital, Nature, and the Unfinished Critique of Political Economy. New York: Monthly Review Press.

- Scranton, Roy. 2018. “Raising My Child in a Doomed World.” The New York Times (Jul 16).

- Scranton, Roy. 2015. Learning to Die in the Anthropocene. San Francisco: City Lights.

- Shelley, Mary. (1818) 2012. Frankenstein: The Original 1818 Edition, eds. D.l. Macdonald and Kathleen Scherf. Ontario: Broadview Press.

- Smaje, Chris. 2015. “Ecomodernism: A Response to My Critics.” Resilience (Sep 10).

- St. Clair, William. 1989. The Godwins and The Shelleys: A Biography of a Family. Baltimore, Johns Hopkins UP.

- Steyerl, Hito and Anton Vidokle. 2018. “Cosmic Catwalk and the Production of Time.” In Art Without Death: Conversations on Cosmism. New York: Sternberg Press/e-Flux.

- Thelwall John. 2008. Selected Political Writings of John Thelwall, Volume Two. London: Pickering & Chatto.

- Thompson, E. P. 1978. The Poverty of Theory: or An Orrery of Errors. London: Merlin Press.

- Williams, Nick and Alex Srnicek, 2013. “#Accelerate: A Manifesto for Accelerationist Politics.” Also in Mackay and Avanessian (2014). 347-362.

- Winner, Langdon. 1977. Autonomous Technology: Technics-out-of-Control as a Theme in Political Thought. Cambridge, MA: The MIT Press.

works by William Godwin