Zachary Loeb

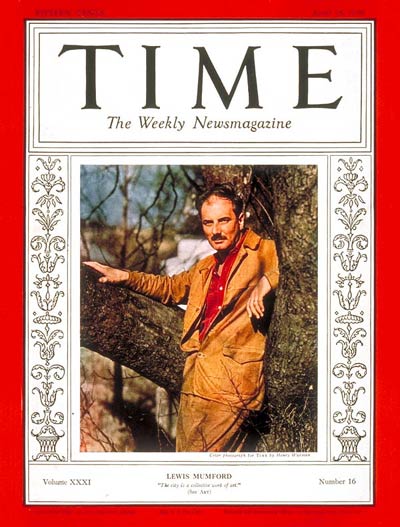

Without even needing to look at the copyright page, an aware reader may be able to date the work of a technology critic simply by considering the technological systems, or forms of media, being critiqued. Unfortunately, in discovering the date of a given critique one may be tempted to conclude that the critique itself must surely be dated. Past critiques of technology may be read as outdated curios, can be considered as prescient warnings that have gone unheeded, or be blithely disregarded as the pessimistic braying of inveterate doomsayers. Yet, in the case of Lewis Mumford, even though his activity peaked by the mid-1970s, it would be a mistake to deduce from this that his insights are of no value to the world of today. Indeed, when it comes to the “digital turn,” it is a “turn” in the road which Mumford saw coming.

It would be reductive to simply treat Mumford as a critic of technology. His body of work includes literary analysis, architectural reviews, treatises on city planning, iconoclastic works of history, impassioned calls to arms, and works of moral philosophy (Mumford 1982; Miller 1989; Blake 1990; Luccarelli 1995; Wojtowicz 1996). Leo Marx described Mumford as “a generalist with strong philosophic convictions,” one whose body of work represents the steady unfolding of “a single view of reality, a comprehensive historical, moral, and metaphysical—one might say cosmological—doctrine” (L. Marx 1990: 167). In the opinion of the literary scholar Charles Molesworth, Mumford is an “axiologist with a clear social purpose: he wants to make available to society a better and fuller set of harmoniously integrated values” (Molesworth 1990: 241), while Christopher Lehmann-Haupt caricatured Mumford as “perhaps our most distinguished flagellator,” and Lewis Croser denounced him as a “prophet of doom” who “hates almost all modern ideas and modern accomplishments without discrimination” (Mendelsohn 1994: 151-152). Perhaps Mumford is captured best by Rosalind Williams, who identified him alternately as an “accidental historian” (Williams 1994: 228) and as a “cultural critic” (Williams 1990: 44) or by Don Ihde who referred to him as an “intellectual historian” (Ihde 1993; 96). As for Mumford’s own views, he saw himself in the mold of the prophet Jonah, “that terrible fellow who keeps on uttering the very words you don’t want to hear, reporting the bad news and warning you that it will get even worse unless you yourself change your mind and alter your behavior” (Mumford 1979: 528).

Therefore, in the spirit of this Jonah let us go see what is happening in Ninevah after the digital turn. Drawing upon Mumford’s oeuvre, particularly the two volume The Myth of the Machine, this paper investigates similarities between Mumford’s concept of “the megamachine” and the post digital-turn technological world. In drawing out these resonances, I pay particular attention to the ways in which computers featured in Mumford’s theorizing of the “megamachine” and informed his darkening perception. In addition I expand upon Mumford’s concept of “the megatechnic bribe” to argue that, after the digital-turn, what takes place is a move from “the megatechnic bribe” towards what I term “megatechnic blackmail.”

In a piece provocatively titled “Prologue for Our Times,” which originally appeared in The New Yorker in 1975, Mumford drolly observed: “Even now, perhaps a majority of our countrymen still believe that science and technics can solve all human problems. They have no suspicion that our runaway science and technics themselves have come to constitute the main problem the human race has to overcome” (Mumford 1975: 374). The “bad news” is that more than forty years later a majority may still believe that.

Towards “The Megamachine”

The two-volume Myth of the Machine was not Mumford’s first attempt to put forth an overarching explanation of the state of the world mixing cultural criticism, historical analysis, and free-form philosophizing; he had previously attempted a similar feat with his Renewal of Life series.

Mumford originally planned the work as a single volume, but soon came to realize that this project was too ambitious to fit within a single book jacket (Miller 1989, 299). The Renewal of Life ultimately consisted of four volumes: Technics and Civilization (1934), The Culture of Cities (1938), The Condition of Man (1944), and The Conduct of Life (1951)—of which Technics and Civilization remains the text that has received the greatest continued attention. A glance at the nearly twenty-year period encompassed in the writing of these four books should make it obvious that they were written during a period of immense change and upheaval in the world and this certainly impacted the shape and argument of these books. These books fall evenly on opposite sides of two events that were to have a profound influence on Mumford’s worldview: the 1944 death of his son Geddes on the Italian front during World War II, and the dropping of atomic bombs on Hiroshima and Nagasaki in 1945.

The four books fit oddly together and reflect Mumford’s steadily darkening view of the world—a pendulous swing from hopefulness to despair (Blake 1990, 286-287). With the Renewal of Life, Mumford sought to construct a picture of the sort of “whole” which could develop such marvelous potential, but which was so morally weak that it wound up using that strength for destructive purposes. Unwelcome though Mumford’s moralizing may have been, it was an attempt, albeit from a tragic perspective (Fox 1990), to explain why things were the way that they were, and what steps needed to be taken for positive change to occur. That the changes that were taking place were those which, in Mumford’s estimation, were for the worse propelled him to develop concepts like “the megamachine” and the “megatechnic bribe” to explain the societal regression he was witnessing.

By the time Mumford began work on The Renewal of Life he had already established himself as a prominent architectural critic and public intellectual. Yet he remained outside of any distinct tradition, school, or political ideology. Mumford was an iconoclastic thinker whose ethically couched regionalist radicalism, influenced by the likes of Ebenezer Howard, Thorstein Veblen, Peter Kropotkin and especially Patrick Geddes, placed him at odds with liberals and socialists alike in the early decades of the twentieth century (Blake 1990, 198-199). For Mumford the prevailing progressive and radical philosophies had been buried amongst the rubble of World War I and he felt that a fresh philosophy was needed, one that would find in history the seeds for social and cultural renewal, and Mumford thought himself well-equipped to develop such a philosophy (Miller 1989, 298-299). Mumford was hardly the first in his era to attempt such a synthesis (Lasch 1991): by the time Mumford began work on The Renewal of Life, Oswald Spengler had already published a grim version of such a new philosophy (300). Indeed, there is something of a perhaps not-accidental parallel between Spengler’s title The Decline of the West and Mumford’s choice of The Renewal of Life as the title for his own series.

In Mumford’s estimation, Spengler’s work was “more than a philosophy of history” it was “a work of religious consolation” (Mumford 1938, 218). The two volumes of The Decline of the West are monuments to Prussian pessimism in which Spengler argues that cultures pass “from the organic to the inorganic, from spring to winter, from the living to the mechanical, from the subjectively conditioned to the objectively conditioned” (220). Spengler argued that this is the fate of all societies, and he believed that “the West” had entered into its winter. It is easy to read Spengler’s tracts as woebegone anti-technology dirges (Farrenkopf 2001, 110-112), or as a call for “Faustian man” (Western man) to assert dominance over the machine and wield it lest it be wielded against him (Herf 1984, 49-69); but Mumford observed that Spengler had “predicted, better than more hopeful philosophers, the disastrous downward course that modern civilization is now following” (Mumford 1938, 235). Spengler had been an early booster of the Nazi regime, if a later critic of it, and though Mumford criticized Spengler for the politics he helped unleash, Mumford still saw him as one with “much to teach the historian and the sociologist” (Mumford 1938, 227). Mumford was particularly drawn to, and influenced by, Spengler’s method of writing moral philosophy in the guise of history (Miller 1989, 301). And it may well be that Spengler’s woebegone example prompted Mumford to distance himself from being a more “hopeful” philosopher in his later writings. Nevertheless, where Spengler had gazed longingly towards the coming fall, Mumford, even in the grip of the megamachine, still believed that the fall could be avoided.

Mumford concludes the final section of The Renewal of Life, called The Conduct of Life, with measured optimism, noting: “The way we must follow is untried and heavy with difficulty; it will test to the utmost our faith and our powers. But it is the way toward life, and those who follow it will prevail” (Mumford 1951, 292). Alas, as the following sections will demonstrate, Mumford grew steadily less confident in the prospects of “the way toward life,” and the rise of the computer only served to make the path more “heavy with difficulty.”

The Megamachine

The volumes of The Renewal of Life hardly had enough time to begin gathering dust, before Mumford was writing another work that sought to explain why the prophesized renewal had not come. In the two volumes of The Myth of the Machine Mumford revisits the themes from The Renewal of Life while advancing an even harsher critique and developing his concept of the “megamachine.” The idea of the megamachine has been taken up for its explanatory potential by many others beyond Mumford in a range of fields, it was drawn upon by some of his contemporary critics of technology (Fromm 1968; Illich 1973; Ellul 1980), has been commented on by historians and philosophers of technology (Hughes 2004; Jacoby 2005; Mitcham 1994; Segal 1994), has been explored in post-colonial thinking (Alvares 1988), and has sparked cantankerous disagreements amongst those seeking to deploy the term to advance political arguments (Bookchin 1995; Watson 1997). It is a term that shares certain similarities with other concepts that aim to capture the essence of totalitarian technological control such as Jacque Ellul’s “technique,” (Ellul 1967) and Neil Postman’s “technopoly” (Postman 1993). It is an idea that, as I will demonstrate, is still useful for describing, critiquing, and understanding contemporary society.

Mumford first gestured in the direction of the megamachine in his 1964 essay “Authoritarian and Democratic Technics” (Mumford 1964). There Mumford argued that small scale technologies which require the active engagement of the human, that promote autonomy, and that are not environmentally destructive are inherently “democratic” (2-3); while large scale systems that reduce humans to mere cogs, that rely on centralized control and are destructive of planet and people, are essentially “authoritarian” (3-4). For Mumford, the rise of “authoritarian technics” was a relatively recent occurrence; however, by “recent” he had in mind “the fourth millennium B.C.” (3). Though Mumford considered “nuclear bombs, space rockets, and computers” all to be examples of contemporary “authoritarian technics” (5) he considered the first examples of such systems to have appeared under the aegis of absolute rulers who exploited their power and scientific knowledge for immense construction feats such as the building of the pyramids. As those endeavors had created “complex human machines composed of specialized, standardized, replaceable, interdependent parts—the work army, the military army, the bureaucracy” (3). In drawing out these two tendencies, Mumford was clearly arguing in favor of “democratic technics,” but he moved away from these terms once he coined the neologism “megamachine.”

Like the Renewal of Life before it, The Myth of the Machine was originally envisioned as a single book (Mumford 1970, xi). The first volume of the two represents something of a rewriting of Technics and Civilization, but gone from Technics and Human Development is the optimism that had animated the earlier work. By 1959 Mumford had dismissed of Technics and Civilization as “something of a museum piece” wherein he had “assumed, quite mistakenly, that there was evidence for a weakening of faith in the religion of the machine” (Mumford 1934, 534). As Mumford wrote The Myth of the Machine he found himself looking at decades of so-called technological progress and seeking an explanation as to why this progress seemed to primarily consist of mountains of corpses and rubble.

With the rise of kingship, in Mumford’s estimation, so too came the ability to assemble and command people on a scale that had been previously unknown (Mumford 1967, 188). This “machine” functioned by fully integrating all of its components to complete a particular goal and “when all the components, political and economic, military, bureaucratic and royal, must be included” what emerges is “the megamachine” and along with it “megatechnics” (188-189). It was a structure in which, originally, the parts were not made of steel, glass, stone or copper but flesh and blood—though each human component was assigned and slotted into a position as though they were a cog. While the fortunes of the megamachine ebbed and flowed for a period, Mumford saw the megamachine as becoming resurgent in the 1500s as faith in the “sun god” came to be replaced by the “divine king” exploiting new technical and scientific knowledge (Mumford 1970: 28-50). Indeed, in assessing the thought of Hobbes, Mumford goes so far as to state “the ultimate product of Leviathan was the megamachine, on a new and enlarged model, one that would completely neutralize or eliminate its once human parts” (100).

Unwilling to mince words, Mumford had started The Myth of the Machine by warning that with the “new ‘megatechnics’ the dominant minority will create a uniform, all-enveloping, super-planetary structure, designed for automatic operation” in which “man will become a passive, purposeless, machine-conditioned animal” (Mumford 1967, 3). Writing at the close of the 1960s, Mumford observed that the impossible fantasies of the controllers of the original megamachines were now actual possibilities (Mumford 1970, 238). The rise of the modern megamachine was the result of a series of historic occurrences: the French revolution which replaced the power of the absolute monarch with the power of the nation state; World War I wherein scientists and scholars were brought into service of the state whilst moderate social welfare programs were introduced to placate the masses (245); and finally the emergence of tools of absolute control and destructive power such as the atom bomb (253). Figures like Stalin and Hitler were not exceptions to the rule of the megamachine but only instances that laid bare “the most sinister defects of the ancient megamachine” its violent, hateful and repressive tendencies (247).

Even though the power of the megamachine may make it seem that resistance is futile, Mumford was no defeatist. Indeed, The Pentagon of Power ends with a gesture towards renewal that is reminiscent of his argument in The Conduct of Life—albeit with a recognition that the state of the world had grown steadily more perilous. A core element of Mumford’s arguments is that the megamachine’s power was reliant on the belief invested in it (the “myth”), but if such belief in the megamachine could be challenged, so too could the megamachine itself (Miller 1989, 156). The Pentagon of Power met with a decidedly mixed reaction: it was selected as a main selection by the Book-of-the-Month-Club and The New Yorker serialized much of the argument about the megamachine (157). Yet, many of the reviewers of the book denounced Mumford for his pessimism; it was in a review of the book in the New York Times that Mumford was dubbed “our most distinguished flagellator” (Mendelsohn 1994, 151-154). And though Mumford chafed at being dubbed a “prophet of doom” (Segal 1994, 149) it is worth recalling that he liked to see himself in the mode of that “prophet of doom” Jonah (Mumford 1979).

After all, even though Mumford held out hope that the megamachine could be challenged—that the Renewal of Life could still beat back The Myth of the Machine—he glumly acknowledged that the belief that the megamachine was “absolutely irresistible” and “ultimately beneficent…still enthralls both the controllers and the mass victims of the megamachine today” (Mumford 1967, 224). Mumford described this myth as operating like a “magical spell,” but as the discussion of the megatechnic bribe will demonstrate, it is not so much that the audience is transfixed as that they are bought off. Nevertheless, before turning to the topic of the bribe and blackmail, it is necessary to consider how the computer fit into Mumford’s theorizing of the megamachine.

The Computer and the Megamachine

Five years after the publication of The Pentagon of Power, Mumford was still claiming that “the Myth of the Machine” was “the ultimate religion of our seemingly rational age” (Mumford 1975, 375). While it is certainly fair to note that Mumford’s “today” is not our today, it would be foolhardy to merely dismiss the idea of the megamachine as anachronistic moralizing. And to credit the megamachine for its full prescience and continued utility, it is worth closely reading the text to consider the ways in which Mumford was writing about the computer—before the digital turn.

Writing to his friend, the British garden city advocate Frederic J. Osborn, Mumford noted: “As to the megamachine, the threat that it now offers turns out to be even more frightening, thanks to the computer, than even I in my most pessimistic moments had ever suspected. Once fully installed our whole lives would be in the hands of those who control the system…no decision from birth to death would be left to the individual” (M. Hughes 1971, 443). It may be that Mumford was merely engaging in a bit of hyperbolic flourish in referring to his view of the computer as trumping his “most pessimistic moments,” but Mumford was no stranger (or enemy) of pessimistic moments. Mumford was always searching for fresh evidence of “renewal,” his deepening pessimism points to the types of evidence he was actually finding. In constructing a narrative that traced the origins of the megamachine across history Mumford had been hoping to show “that human nature is biased toward autonomy and against submission to technology,” (Miller 1990, 157) but in the computer Mumford saw evidence pointing in the opposite direction.

In assessing the computer, Mumford drew a contrast between the basic capabilities of the computers of his day and the direction in which he feared that “computerdom” was moving (Mumford 1970, plate 6). Computers to him were not simply about controlling “the mechanical process” but also “the human being who once directed it” (189). Moving away from historical antecedents like Charles Babbage, Mumford emphasized Norbert Wiener’s attempt to highlight human autonomy and he praised Wiener’s concern for the tendency on the part of some technicians to begin to view the world only in terms of the sorts of data that computers could process (189). Mumford saw some of the enthusiasm for the computer’s capability as being rather “over-rated” and he cited instances—such as the computer failure in the case of the Apollo 11 moon landing—as evidence that computers were not quite as all-powerful as some claimed (190). In the midst of a growing ideological adoration for computers, Mumford argued that their “life-efficiency and adaptability…must be questioned” (190). Mumford’s critiquing of computers can be read as an attempt on his part to undermine the faith in computers when such a belief was still in its nascent cult state—before it could become a genuine world religion.

Mumford does not assume a wholly dismissive position towards the computer. Instead he takes a stance toward it that is similar to his position towards most forms of technology: its productive use “depends upon the ability of its human employers quite literally to keep their own heads, not merely to scrutinize the programming but to reserve the right for ultimate decision” (190). To Mumford, the computer “is a big brain in its most elementary state: a gigantic octopus, fed with symbols instead of crabs,” but just because it could mimic some functions of the human mind did not mean that the human mind should be discarded (Mumford 1967: 29). The human brain was for Mumford infinitely more complex than a computer could be, and even where computers might catch up in terms of quantitative comparison, Mumford argued that the human brain would always remain superior in qualitative terms (39). Mumford had few doubts about the capability of computers to perform the functions for which they had been programmed, but he saw computers as fundamentally “closed” systems whereas the human mind was an “open” one; computers could follow their programs but he did not think they could invent new ones from scratch (Mumford 1970: 191). For Mumford the rise in the power of computers was linked largely to the shift away from the “old-fashioned” machines such as Babbage’s Calculating Engine—and towards the new digital and electric machines which were becoming smaller and more commonplace (188). And though Mumford clearly respected the ingenuity of scientists like Weiner, he amusingly suggested that “the exorbitant hopes for a computer dominated society” were really the result of “the ‘pecuniary-pleasure’ center” (191). While Mumford’s measured consideration of the computer’s basic functioning is important, what is of greater significance is his thinking regarding the computer’s place in the megamachine.

Whereas much of Technics and Human Development focuses upon the development of the first megamachine, in The Pentagon of Power Mumford turns his focus to the fresh incarnation of the megamachine. This “new megamachine” was distinguished by the way in which it steadily did away with the need for the human altogether—now that there were plenty of actual cogs (and computers) human components were superfluous (258). To Mumford, scientists and scholars had become a “new priesthood” who had abdicated their freedom and responsibility as they came to serve the “megamachine” (268). But if they were the “priesthood” than who did they serve? As Mumford explained, in the command position of this new megamachine was to be found a new “ultimate ‘decision-maker’ and Divine King” and this figure had emerged in “a transcendent, electronic form” it was “the Central Computer” (273).

Writing in 1970, before the rise of the personal computer or the smartphone, Mumford’s warnings about computers may have seemed somewhat excessive. Yet, in imagining the future of a “a computer dominated society” Mumford was forecasting that the growth of the computer’s power meant the consolidation of control by those already in power. Whereas the rulers of yore had dreamt of being all-seeing, with the rise of the computer such power ceased being merely a fantasy as “the computer turns out to be the Eye of the reinstated Sun God” capable of exacting “absolute conformity to his demands, because no secret can be hidden from him, and no disobedience can go unpunished” (274). And this “eye” saw a great deal: “In the end, no action, no conversation, and possibly in time no dream or thought would escape the wakeful and relentless eye of this deity: every manifestation of life would be processed into the computer and brought under its all-pervading system of control. This would mean, not just the invasion of privacy, but the total destruction of autonomy: indeed the dissolution of the human soul” (274-275). The mention of “the human soul” may be evocative of a standard bit of Mumfordian moralizing, but the rest of this quote has more to say about companies like Google and Facebook, as well as about the mass surveillance of the NSA than many things written since. Indeed, there is something almost quaint about Mumford writing of “no action” decades before social media made it so that an action not documented on social media is of questionable veracity. While the comment regarding “no conversation” seems uncomfortably apt in an age where people are cautioned not to disclose private details in front of their smart TVs and in which the Internet of Things populates people’s homes with devices that are always listening.

Mumford may have written these words in the age of large mainframe computers but his comments on “the total destruction of autonomy” and the push towards “computer dominated society” demonstrate that he did not believe that the power of such machines could be safely locked away. Indeed, that Mumford saw the computer as an example of an “authoritarian technic” makes it highly questionable that he would have been swayed by the idea that personal computers could grant individuals more autonomy. Rather, as I discuss below, it is far more likely that he would have seen the personal computer as precisely the sort of democratic seeming gadget used to “bribe” people into accepting the larger “authoritarian” system. As it is precisely through the placing of personal computers in people’s homes, and eventually on their persons, that the megamachine is able to advance towards its goal of total control.

The earlier incarnations of the megamachine had dreamt of the sort of power that became actually available in the aftermath of World War II thanks to “nuclear energy, electric communication, and the computer” (274). And finally the megamachine’s true goal became clear: “to furnish and process an endless quantity of data, in order to expand the role and ensure the domination of the power system” (275). In short, the ultimate purpose of the megamachine was to further the power and enhance the control of the megamachine itself. It is easy to see in this a warning about the dangers of “big data” many decades before that term had entered into common use. Aware of how odd these predictions may have sounded to his contemporaries, Mumford recognized that only a few decades earlier such ideas could have been dismissed of as just so much “satire,” but he emphasized that such alarming potentialities were now either already in existence or nearly within reach (275).

In the twenty-first century, after the digital turn, it is easy to find examples of entities that fit the bill of the megamachine. It may, in fact, be easier to do this today than it was during Mumford’s lifetime. For one no longer needs to engage in speculative thinking to find examples of technologies that ensure that “no action” goes unnoticed. The handful of massive tech conglomerates that dominate the digital world today—companies like Google, Facebook, and Amazon—seem almost scarily apt manifestations of the megamachine. Under these platforms “every manifestation of life” gets “processed into the computer and brought under its all-pervading system of control,” whether it be what a person searches for, what they consider buying, how they interact with friends, how they express their likes, what they actually purchase, and so forth. And as these companies compete for data they work to ensure that nothing is missed by their “relentless eye[s].” Furthermore, though these companies may be technology firms they are like the classic megamachines insofar as they bring together the “political and economic, military, bureaucratic and royal.” Granted, today’s “royal” are not those who have inherited their thrones but those who owe their thrones to the tech empires at the heads of which they sit. While the status of these platform’s users, reduced as they are to cogs supplying an endless stream of data, further demonstrates the totalizing effects of the megamachine as it coordinates all actions to serve its purposes. And yet, Google, Facebook, and Amazon are not the megamachine, but rather examples of megatechnics; the megamachine is the broader system of which all of those companies are merely parts.

Though the chilling portrait created by Mumford seems to suggest a definite direction, and a grim final destination, Mumford tried to highlight that such a future “though possible, is not determined, still less an ideal condition of human development” (276). Nevertheless, it is clear that Mumford saw the culmination of “the megamachine” in the rise of the computer and the growth of “computer dominated society.” Thus, “the megamachine” is a forecast of the world after “the digital turn.” Yet, the continuing strength of Mumford’s concept is based not only on the prescience of the idea itself, but in the way in which Mumford sought to explain how it is that the megamachine secures obedience to its strictures. It is to this matter that our attention, at last, turns.

From the Megatechnic Bribe to Megatechnic Blackmail

To explain how the megamachine had maintained its power, Mumford provided two answers, both of which avoid treating the megamachine as a merely “autonomous” force (Winner 1989, 108-109). The first explanation that Mumford gives is an explanation of the titular idea itself: “the ultimate religion of our seemingly rational age” which he dubbed ““the myth of the machine” (Mumford 1975, 375). The key component of this “myth” is “the notion that this machine was, by its very nature, absolutely irresistible—and yet, provided that one did not oppose it, ultimately beneficial” (Mumford 1967, 224) —once assembled and set into action the megamachine appears inevitable, and those living in megatechnic societies are conditioned from birth to think of the megamachine in such terms (Mumford 1970, 331).

Yet, the second part of the myth is especially, if not more, important: it is not merely that the megamachine appears “absolutely irresistible” but that many are convinced that it is “ultimately beneficial.” This feeds into what Mumford described as “the megatechnic bribe,” a concept which he first sketched briefly in “Authoritarian and Democratic Technics” (Mumford 1964, 6) but which he fully developed in The Pentagon of Power (Mumford 1970, 330-334). The “bribe” functions by offering those who go along with it a share in the “perquisites, privileges, seductions, and pleasures of the affluent society” so long that is as they do not question or ask for anything different from that which is offered (330). And this, Mumford recognizes, is a truly tempting offer, as it allows its recipients to believe they are personally partaking in “progress” (331). After all, a “bribe” only really works if what is offered is actually desirable. But Mumford warns, once a people opt for the megamachine, once they become acclimated to the air-conditioned pleasure palace of the megatechnic bribe “no other choices will remain” (332).

By means of this “bribe,” the megamachine is able to effect an elaborate bait and switch: one through which people are convinced that an authoritarian technic is actually a democratic one. For the bribe accepts “the basic principle of democracy, that every member of society should have a share in its goods,” (Mumford 1964, 6). Mumford did not deny the impressive things with which people were being bribed, but to see them as only beneficial required, in his estimation, a one-sided assessment which ignored “long-term human purposes and a meaningful pattern of life” (Mumford 1970, 333). It entailed confusing the interests of the megamachine with the interests of actual people. Thus, the problem was not the gadgets as such, but the system in which these things were created, produced, and the purposes for which they were disseminated: the problem was that the true purpose of these things was to incorporate people into the megamachine (334). The megamachine created a strange and hostile new world, but offered its denizens bribes to convince them that life in this world was actually a treat. Ruminating on the matter of the persuasive power of the bribe, Mumford wondered if democracy could survive after “our authoritarian technics consolidates its powers, with the aid of its new forms of mass control, its panoply of tranquilizers and sedatives and aphrodisiacs” (Mumford 1964, 7). And in typically Jonah-like fashion, Mumford balked at the very question, noting that in such a situation “life itself will not survive, except what is funneled through the mechanical collective” (7).

If one chooses to take the framework of the “megatechnic bribe” seriously then it is easy to see it at work in the 21st century. It is the bribe that stands astride the dais at every gaudy tech launch, it is the bribe which beams down from billboards touting the slightly sleeker design of the new smartphone, it is the bribe which promises connection or health or beauty or information or love or even technological protection from the forces that technology has unleashed. The bribe is the offer of the enticing positives that distracts from the legion of downsides. And in all of these cases that which is offered is that which ultimately enhances the power of the megamachine. As Mumford feared, the values that wind up being transmitted across these “bribes,” though they may attempt a patina of concern for moral or democratic values, are mainly concerned with reifying (and deifying) the values of the system offering up these forms of bribery.

Yet this reading should not be taken as a curmudgeonly rejection of technology as such, in keeping with Mumford’s stance, one can recognize that the things put on offer after the digital turn provide people with an impressive array of devices and platforms, but such niceties also seem like the pleasant distraction that masks and normalizes rampant surveillance, environmental destruction, labor exploitation, and the continuing concentration of wealth in a few hands. It is not that there is a total lack of awareness about the downsides of the things that are offered as “bribes,” but that the offer is too good to refuse. And especially if one has come to believe that the technological status quo is “absolutely irresistible” then it makes sense why one would want to conclude that this situation is “ultimately beneficial.” As Langdon Winner put it several decades ago, “the prevailing consensus seems to be that people love a life of high consumption, tremble at the thought that it might end, and are displeased about having to clean up the messes that technologies sometimes bring” (Winner 1986, 51), such a sentiment is the essence of the bribe.

Nevertheless, it seems that more thought needs to be given to the bribe after the digital turn, the point after which the bribe has already become successful. The background of the Cold War may have provided a cultural space for Mumford’s skepticism, but, as Wendy Hui Kyong Chun has argued, with the technological advances around the Internet in the last decade of the twentieth century, “technology became once again the solution to political problems” (Chun 2006, 25). Therefore, in the twenty-first century it is not merely about bribery needing to be deployed as a means of securing loyalty to a system of control towards which there is substantial skepticism. Or, to put it slightly differently, at this point there are not many people who still really need to be convinced that they should use a computer. We no longer need to hypothesize about “computer dominated society,” for we already live there. After all, the technological value systems about which Mumford was concerned have now gained significant footholds not only in the corridors of power, but in every pocket that contains a smart phone. It would be easy to walk through the library brimming with e-books touting the wonders of all that is digital and persuasively disseminating the ideology of the bribe, but such “sugar-coated soma pills”—to borrow a turn of phrase from Howard Segal (1994, 188)—serve more as examples of the continued existence of the bribe than as explanations of how it has changed.

At the end of her critical history of social media, José Van Dijck (Van Dijck 2013, 174) offers what can be read as an important example of how the bribe has changed, when she notes that “opting out of connective media is hardly an option. The norm is stronger than the law.” On a similar note, Laura Portwood-Stacer in her study of Facebook abstention portrays the very act of not being on that social media platform as “a privilege in itself” —an option that is not available to all (Portwood-Stacer 2012, 14). In interviews with young people, Sherry Turkle has found many “describing how smartphones and social media have infused friendship with the Fear of Missing Out” (Turkle 2015, 145). Though smartphones and social media platforms certainly make up the megamachine’s ecosystem of bribes, what Van Dijck, Portwood-Stacer, and Turkle point to is an important shift in the functioning of the bribe. Namely, that today we have moved from the megatechnic bribe, towards what can be called “megatechnic blackmail.”

Whereas the megatechnic bribe was concerned with assimilating people into the “new megamachine,” megatechnic blackmail is what occurs once the bribe has already been largely successful. This is not to claim that the bribe does not still function—for it surely does through the mountain of new devices and platforms that are constantly being rolled out—but, rather, that it does not work by itself. The bribe is what is at work when something new is being introduced, it is what convinces people that the benefits outweigh any negative aspects, and it matches the sense of “irresistibility” with a sense of “beneficence.” Blackmail, in this sense, works differently—it is what is at work once people become all too aware of the negative side of smartphones, social media, and the like. Megatechnic blackmail is what occurs once, as Van Dijck put it, “the norm” becomes “stronger than the law” as here it is not the promise of something good that draws someone in but the fear of something bad that keeps people from walking away.

This puts the real “fear” in the “fear of missing out” which no longer needs to promise “use this platform because it’s great” but can instead now threaten “you know there are problems with this platform, but use it or you will not know what is going on in the world around you.” The shift from bribe to blackmail can further be seen in the consolidation of control in the hands of fewer companies behind the bribes—the inability of an upstart social network (a fresh bribe) to challenge the social network is largely attributable to the latter having moved into a blackmail position. It is no longer the case that a person, in a Facebook saturated society, has a lot to gain by joining the site, but that (if they have already accepted its bribe) they have a lot to lose by leaving it. The bribe secures the adoration of the early-adopters, and it convinces the next wave of users to jump on board, but blackmail is what ensures their fealty once the shiny veneer of the initial bribe begins to wear thin.

Mumford had noted that in a society wherein the bribe was functioning smoothly, “the two unforgivable sins, or rather punishable vices, would be continence and selectivity” (Mumford 1970, 332) and blackmail is what keeps those who would practice “continence and selectivity” in check. As Portwood-Stacer noted, abstention itself may come to be a marker of performative privilege—to opt out becomes a “vice” available only to those who can afford to engage in it. To not have a smartphone, to not have a Facebook account, to not buy things on Amazon, or use Google, becomes either a signifier of one’s privilege or marks one as an outsider.

Furthermore, choosing to renounce a particular platform (or to use it less) rarely entails swearing off the ecosystem of megatechnics entirely. As far as the megamachine is concerned, insofar as options are available and one can exercise a degree of “selectivity” what matters is that one is still selecting within that which is offered by the megamachine. The choice between competing systems of particular megatechnics is still a choice that takes place within the framework of the megamachine. Thus, Douglas Rushkoff’s call “program or be programmed” (Rushkoff 2010) appears less as a rallying cry of resistance, than as a quiet acquiescence: one can program, or one can be programmed, but what is unacceptable is to try to pursue a life outside of programs. Here the turn that seeks to rediscover the Internet’s once emancipatory promise in wikis, crowd-funding, digital currency, and the like speaks to a subtle hope that the problems of the digital day can be defeated by doubling down on the digital. From this technologically-optimistic view the problem with companies like Google and Facebook is that they have warped the anarchic promise, violated the independence, of cyberspace (Barlow 1996; Turner 2006); or that capitalism has undermined the radical potential of these technologies (Fuchs 2014; Srnicek and Williams 2015). Yet, from Mumford’s perspective such hopes and optimism are unwarranted. Indeed, they are the sort of democratic fantasies that serve to cover up the fact that the computer, at least for Mumford, was ultimately still an authoritarian technology. For the megamachine it does not matter if the smartphone with a Twitter app is used by the President or by an activist: either use is wholly acceptable insofar as both serve to deepen immersion in the “computer dominated society” of the megamachine. And thus, as to the hope that megatechnics can be used to destroy the megamachine it is worth recalling Mumford’s quip, “Let no one imagine that there is a mechanical cure for this mechanical disease” (Mumford 1954, 50).

In this situation the only thing worse than falling behind or missing out is to actually challenge the system itself, to practice or argue that others practice “continence and selectivity” leads to one being denounced as a “technophobe” or “Luddite.” That kind of derision fits well with Mumford’s observation that the attempt to live “detached from the megatechnic complex” to be “cockily independent of it, or recalcitrant to its demands, is regarded as nothing less than a form of sabotage” (Mumford 1970, 330). Minor criticisms can be permitted if they are of the type that can be assimilated and used to improve the overall functioning of the megamachine, but the unforgiveable heresy is to challenge the megamachine itself. It is acceptable to claim that a given company should be attempting to be more mindful of a given social concern, but it is unacceptable to claim that the world would actually be a better place if this company were no more. One sees further signs of the threat of this sort of blackmail at work in the opening pages of the critical books about technology aimed at the popular market, wherein the authors dutifully declare that though they have some criticisms they are not anti-technology. Such moves are not the signs of people merrily cooperating with the bribe, but of people recognizing that they can contribute to a kinder, gentler bribe (to a greater or lesser extent) or risk being banished to the margins as fuddy-duddies, kooks, environmentalist weirdos, or as people who really want everyone to go back to living in caves. The “myth of the machine” thrives on the belief that there is no alternative. One is permitted (in some circumstances) to say “don’t use Facebook” but one cannot say “don’t use the Internet.” Blackmail is what helps to bolster the structure that unfailingly frames the megamachine as “ultimately beneficial.”

The megatechnic bribe dazzles people by muddling the distinction between, to use a comparison Mumford was fond of, “the goods life” and “the good life.” But megatechnic blackmail threatens those who grow skeptical of this patina of “the good life” that they can either settle for “the goods life” or they can look forward to an invisible life on the margins. Those who can’t be bribed are blackmailed. Thus it is no longer just that the myth of the machine is based on the idea that the megamachine is “absolutely irresistible” and “ultimately beneficial” but that it now includes the idea that to push back is “unforgivably detrimental.”

Conclusion

Of the various biblical characters from whom one can draw inspiration, Jonah is something of an odd choice for a public intellectual. After all, Jonah first flees from his prophetic task, sleeps in the midst of a perilous storm, and upon delivering the prophecy retreats to a hillside to glumly wait to see if the prophesized destruction will come. There is a certain degree to which Jonah almost seems disappointed that the people of Ninevah mend their ways and are forgiven by God. Yet some of Jonah’s frustrated disappointment flows from his sense that the whole ordeal was pointless—he had always known that God would forgive the people of Ninevah and not destroy the city. Given that, why did Jonah have to leave the comfort of his home in the first place? (JPS 1999, 1333-1337). Mumford always hoped to be proven wrong. As he put it in the very talk in which he introduced himself as Jonah, “I would die happy if I knew that on my tombstone could be written these words, ‘This man was an absolute fool. None of the disastrous things that he reluctantly predicted ever came to pass!’ Yes: then I could die happy” (Mumford 1979, 528). But those words do not appear on Mumford’s tombstone.

Assessing whether Mumford was “an absolute fool” and whether any “of the disastrous things that he reluctantly predicted ever came to pass” is a tricky mire to traverse. For the way that one responds to that probably has as much to do with whether or not one shares Mumford’s outlook than with anything particular he wrote. During his lifetime Mumford had no shortage of critics who viewed him as a stodgy pessimist. But what is one to expect if one is trying to follow the example of Jonah? If you see yourself as “that terrible fellow who keeps on uttering the very words you don’t want to hear, reporting the bad news and warning you that it will get even worse unless you yourself change your mind and alter your behavior” (528) than you can hardly be surprised when many choose to dismiss you as a way of dismissing the bad news you bring.

Yet, it has been the contention of this paper, that Mumford should not be ignored—and that his thought provides a good tool to think with after the digital turn. In his introduction to the 2010 edition of Mumford’s Technics and Civilization, Langdon Winner notes that it “openly challenged scholarly conventions of the early twentieth century and set the stage for decades of lively debate about the prospects for our technology-centered ways of living” (Mumford 2010, ix). Even if the concepts from The Myth of the Machine have not “set the stage” for debate in the twenty-first century, the ideas that Mumford develops there can pose useful challenges for present discussions around “our technology-centered ways of living.” True, “the megamachine” is somewhat clunky as a neologism but as a term that encompasses the technical, political, economic, and social arrangements of a powerful system it seems to provide a better shorthand to capture the essence of Google or the NSA than many other terms. Mumford clearly saw the rise of the computer as the invention through which the megamachine would be able to fully secure its throne. At the same time, the idea of the “megatechnic bribe” is a thoroughly discomforting explanation for how people can grumble about Apple’s labor policies or Facebook’s uses of user data while eagerly lining up to upgrade to the latest model of iPhone or clicking “like” on a friend’s vacation photos. But in the present day the bribe has matured beyond a purely pleasant offer into a sort of threat that compels consent. Indeed, the idea of the bribe may be among Mumford’s grandest moves in the direction of telling people what they “don’t want to hear.” It is discomforting to think of your smartphone as something being used to “bribe” you, but that it is unsettling may be a result of the way in which that claim resonates.

Lewis Mumford never performed a Google search, never made a Facebook account, never Tweeted or owned a smartphone or a tablet, and his home was not a repository for the doodads of the Internet of Things. But it is doubtful that he would have been overly surprised by any of them. Though he may have appreciated them for their technical capabilities he would have likely scoffed at the utopian hopes that are hung upon them. In 1975 Mumford wrote: “Behold the ultimate religion of our seemingly rational age—the Myth of the Machine! Bigger and bigger, more and more, farther and farther, faster and faster became ends in themselves, as expressions of godlike power; and empires, nations, trusts, corporations, institutions, and power-hungry individuals were all directed to the same blank destination” (Mumford 1975, 375).

Is this assessment really so outdated today? If so, perhaps the stumbling block is merely the term “machine,” which had more purchase in the “our” of Mumford’s age than in our own. Today, that first line would need to be rewritten to read “the Myth of the Digital” —but other than that, little else would need to be changed.

_____

Zachary Loeb is a graduate student in the History and Sociology of Science department at the University of Pennsylvania. His research focuses on technological disasters, computer history, and the history of critiques of technology (particularly the work of Lewis Mumford). He is a frequent contributor to The b2 Review Digital Studies section.

Back to the essay

_____

Works Cited

- Alvares, Claude. 1988. “Science, Colonialism, and Violence: A Luddite View” In Science, Hegemony and Violence: A Requiem for Modernity, edited by Ashis Nandy. Delhi: Oxford University Press.

- Barlow, John Perry. 1996. “A Declaration of the Independence of Cyberspace” (Feb 8).

- Blake, Casey Nelson. 1990. Beloved Community: The Cultural Criticism of Randolph Bourne, Van Wyck Brooks, Waldo Frank, and Lewis Mumford. Chapel Hill: The University of North Carolina Press.

- Bookchin, Murray. 1995. Social Anarchism or Lifestyle Anarchism: An Unbridgeable Chasm. Oakland: AK Press.

- Cowley, Malcolm and Bernard Smith, eds. 1938. Books That Changed Our Minds. New York: The Kelmscott Editions.

- Ezrahi, Yaron, Mendelsohn, Everett, and Segal, Howard P., eds. 1994. Technology, Pessimism, and Postmodernism. Amherst: University of Massachusetts Press.

- Ellul, Jacques. 1967. The Technological Society. New York: Vintage Books.

- Ellul, Jacques. 1980. The Technological System. New York: Continuum.

- Farrenkopf, John. 2001 Prophet of Decline: Spengler on World History and Politics. Baton Rouge: LSU Press.

- Fox, Richard Wightman. 1990. “Tragedy, Responsibility, and the American Intellectual, 1925-1950” In Lewis Mumford: Public Intellectual, edited by Thomas P. Hughes, and Agatha C. Hughes. New York: Oxford University Press.

- Fromm, Erich. 1968. The Revolution of Hope: Toward a Humanized Technology. New York: Harper & Row, Publishers.

- Fuchs, Christian. 2014. Social Media: A Critical Introduction. Los Angeles: Sage.

- Herf, Jeffrey. 1984. Reactionary Modernism: Technology, Culture, and Politics in Weimar and the Third Reich. Cambridge: Cambridge University Press.

- Hughes, Michael (ed.) 1971. The Letters of Lewis Mumford and Frederic J. Osborn: A Transatlantic Dialogue, 1938-1970. New York: Praeger Publishers.

- Hughes, Thomas P. and Agatha C. Hughes. 1990. Lewis Mumford: Public Intellectual. New York: Oxford University Press.

- Hughes, Thomas P. 2004. Human-Built World: How to Think About Technology and Culture. Chicago: University of Chicago Press.

- Hui Kyong Chun, Wendy. 2006. Control and Freedom. Cambridge: The MIT Press.

- Ihde, Don. 1993. Philosophy of Technology: an Introduction. New York: Paragon House.

- Jacoby, Russell. 2005 Picture Imperfect: Utopian Thought for an Anti-Utopian Age. New York: Columbia University Press.

- JPS Hebrew-English Tanakh. 1999. Philadelphia: The Jewish Publication Society.

- Lasch, Christopher. 1991. The True and Only Heaven: Progress and Its Critics. New York: W. W.Norton and Company.

- Luccarelli, Mark. 1996. Lewis Mumford and the Ecological Region: The Politics of Planning. New York: The Guilford Press.

- Marx, Leo. 1988. The Pilot and the Passenger: Essays on Literature, Technology, and Culture in the United States. New York: Oxford University Press.

- Marx, Leo. 1990. “Lewis Mumford” Prophet of Organicism” In Lewis Mumford: Public Intellectual, edited by Thomas P. Hughes and Agatha C. Hughes. New York: Oxford University Press.

- Marx, Leo. 1994. “The Idea of ‘Technology’ and Postmodern Pessimism.” In Does Technology Drive History? The Dilemma of Technological Determinism, edited by Merritt Roe Smith and Leo Marx. Cambridge: MIT Press.

- Mendelsohn, Everett. 1994. “The Politics of Pessimism: Science and Technology, Circa 1968.” In Technology, Pessimism, and Postmodernism, edited by Yaron Ezrahi, Everett Mendelsohn, and Howard P. Segal. Amherst: University of Massachusetts Press.

- Miller, Donald L. 1989. Lewis Mumford: A Life. New York: Weidenfeld and Nicolson.

- Molesworth, Charles. 1990. “Inner and Outer: The Axiology of Lewis Mumford.” In Lewis Mumford: Public Intellectual, edited by Thomas P. Hughes and Agatha C. Hughes. New York: Oxford University Press.

- Mitcham, Carl. 1994. Thinking Through Technology: The Path between Engineering and Philosophy. Chicago: University of Chicago Press.

- Mumford, Lewis. 1926. “Radicalism Can’t Die.” The Jewish Daily Forward (English section, Jun 20).

- Mumford, Lewis. 1934. Technics and Civilization. New York: Harcourt, Brace and Company.

- Mumford, Lewis. 1938. The Culture of Cities. New York, Harcourt, Brace and Company.

- Mumford, Lewis. 1944. The Condition of Man. New York, Harcourt, Brace and Company.

- Mumford, Lewis. 1951. The Conduct of Life. New York, Harcourt, Brace and Company.

- Mumford, Lewis. 1954. In the Name of Sanity. New York: Harcourt, Brace and Company.

- Mumford, Lewis. 1959. “An Appraisal of Lewis Mumford’s Technics and Civilization (1934).” Daedalus 88:3 (Summer). 527-536.

- Mumford, Lewis. 1962. The Story of Utopias. New York: Compass Books, Viking Press.

- Mumford, Lewis. 1964. “Authoritarian and Democratic Technics.” Technology and Culture 5:1 (Winter). 1-8.

- Mumford, Lewis. 1967. Technics and Human Development. Vol. 1 of The Myth of the Machine. Technics and Human Development. New York: Harvest/Harcourt Brace Jovanovich.

- Mumford, Lewis. 1970. The Pentagon of Power. Vol. 2 of The Myth of the Machine. Technics and Human Development. New York: Harvest/Harcourt Brace Jovanovich.

- Mumford, Lewis. 1975. Findings and Keepings: Analects for an Autobiography. New York, Harcourt, Brace and Jovanovich.

- Mumford, Lewis. 1979. My Work and Days: A Personal Chronicle. New York: Harcourt, Brace, Jovanovich.

- Mumford, Lewis. 1982. Sketches from Life: The Autobiography of Lewis Mumford. New York: The Dial Press.

- Mumford, Lewis. 2010. Technics and Civilization. Chicago: The University of Chicago Press.

- Portwood Stacer, Laura. 2012. “Media Refusal and Conspicuous Non-consumption: The Performative and Political Dimensions of Facebook Abstention.” New Media and Society (Dec 5).

- Postman, Neil. 1993. Technopoly: The Surrender of Culture to Technology. New York: Vintage Books.

- Rushkoff, Douglas. 2010. Program or Be Programmed. Berkeley: Soft Skull Books.

- Segal, Howard P. 1994a. “The Cultural Contradictions of High Tech: or the Many Ironies of Contemporary Technological Optimism.” In Pessimism, and Postmodernism, edited by Yaron Ezrahi, Everett Mendelsohn, and Howard P. Segal. Amherst: University of Massachusetts Press.

- Segal, Howard P. 1994b. Future Imperfect: The Mixed Blessings of Technology in America. Amherst: The University of Amherst Press.

- Spengler, Oswald. 1932a. Form and Actuality. Vol. 1 of The Decline of the West. New York: Alfred K. Knopf.

- Spengler, Oswald. 1932b. Perspectives of World-History. Vol. 2 of The Decline of the West. New York: Alfred K. Knopf.

- Spengler, Oswald. 2002. Man and Technics: A Contribution to a Philosophy of Life. Honolulu: University Press of the Pacific.

- Srnicek, Nick and Alex Williams. 2015. Inventing the Future: Postcapitalism and a World Without Work. New York: Verso Books.

- Turkle, Sherry. 2015. Reclaiming Conversation: The Power of Talk in a Digital Age. New York: Penguin Press.

- Turner, Fred. 2006. From Counterculture to Cyberculture: Stewart Brand, The Whole Earth Network and the Rise of Digital Utopianism. Chicago: The University of Chicago Press.

- Van Dijck, José. 2013. The Culture of Connectivity. Oxford: Oxford University Press.

- Watson, David. 1997. Against the Megamachine: Essays on Empire and Its Enemies. Brooklyn: Autonomedia.

- Williams, Rosalind. 1990. “Lewis Mumford as a Historian of Technology in Technics and Civilization.” In Lewis Mumford: Public Intellectual, edited by Thomas P. Hughes and Agatha C. Hughes. New York: Oxford University Press.

- Williams, Rosalind. 1994. “The Political and Feminist Dimensions of Technological Determinism.” In Does Technology Drive History? The Dilemma of Technological Determinism, edited by Merritt Roe Smith and Leo Marx. Cambridge: MIT Press.

- Winner, Langdon. 1989. Autonomous Technology: Technics-out-of-Control as a Theme in Political Thought. Cambridge: MIT Press.

- Winner, Langdon. 1986. The Whale and the Reactor. Chicago: University of Chicago Press.

- Wojtowicz, Robert. 1996. Lewis Mumford and American Modernism: Eutopian Themes for Architecture and Urban Planning. Cambridge: Cambridge University Press.

It is telling that, in Will the Internet Fragment?, Mueller starts his account with the 2014

It is telling that, in Will the Internet Fragment?, Mueller starts his account with the 2014